Yan Cui

I help clients go faster for less using serverless technologies.

Time really does fly when you’re having fun! In a blink of the eye it’s been a whole year since I left Credit Suisse to start a career making social games with IwI. All and all, it’s been a year filled with lots of hard work, lots of learning and honestly, a hell of a lot of fun! The great thing about doing something completely different is that you get to learn about a new stack of technologies to tackle an altogether different set of challenges, and in the end I believe it has made me a better developer.

One of those new technologies which I’ve had to get used to is Amazon Web Services (AWS), Amazon’s cloud computing solution, which I will give an account of my personal experiences of working close with it during this past year.

By now you’ve probably heard plenty about cloud computing already (well chances are one of the providers have tried to sell their service to you already!), so I won’t bore you with those vague marketing tagline (well, I got nothing to sell here anyway :-P) and wonderful stories of how company XYZ made a move to the cloud and are now able to serve millions more customers whilst paying peanuts to run their servers.

Well.. truth is, things aren’t always rosy and there had been several high profile disasters which resulted in companies losing credibility or in extreme cases, their entire business..(that’s what losing your entire database cluster does to you when you haven’t got adequate backup…). So there are new risks involved and new challenges which need to be tackled.

AWS vs other cloud solutions

Whilst all my experience with cloud computing has been with AWS so I won’t comment on the pros and cons of other cloud solutions, but from my understanding of Microsoft’s Azure and Google’s AppEngine services they have a very different model to that of AWS.

Azure and AppEngine’s model is best described as platform-as-a-service where you develop your code against a SDK supplied by Microsoft or Google which allows you to make use of their respective SQL/NoSQL solutions, etc. Deployments are usually easy and the service will manage the number of instances (servers) needed to run your application to meet the current demand for you whilst letting you set a max/min cap on the number of instances so that your cost doesn’t spiral out of control.

One the other hand, Amazon’s model is infrastructure-as-a-service which is basically the same as older virtual machine technology but with lots of additional knots and bots such as automated provisioning, auto scaling, automated billing, etc. You have the ability to create new machine images from existing instances to use to spawn new instances and you can use the instances for pretty much all intents and purposes, e.g. web servers, MySQL server, cache cluster, etc. after all they’re just virtual machines.

In contrast to Azure and AppEngine’s model, AWS’s model gives you more flexibility and control but at the same time requires you to do a little bit more work to get going and take on the role of an IT pro as well as a developer.

Vendor Lock-in

Possibly the biggest complaint and worry people have about migrating to the cloud is that you are locked in with a particular vendor. Whilst that’s true, but given the current affairs and the way things are seemingly going, I’d say fears of the likes of Microsoft, Google or Amazon going bust and therefore their respective cloud services going up in smokes is.. well.. a little extreme.

It’s harder to move away from the Azure and AppEngine given that everything you’ve coded are against a particular set of SDK but with AWS’s infrastructure-as-a-service model it’s possible to migrate out of it with too much hassle. In fact, Zynga uses AWS as incubation chamber for their new games and allow them to monitor and learn about the hosting requirements for a new game in its turbulent early days (well they literally go from hundreds to millions of users in the matter of days so you can imagine…) before moving the game into their own data centres once the traffic stabilises.

Pricing

In terms of pricing, there’s little that separates the three providers I’ve mentioned here, in fact, the last time I looked, the equivalent instances in Azure costs the equivalent amount as their AWS counterpart so clearly the market research divisions have been working hard to know exactly what their competitors have been up to :-P

Maturity

Having been released in 2006, AWS is one of the if not the oldest cloud service out there and even then it’s still barely four years old and still a little rough around the edges (they don’t call it cutting edge for no reason I suppose). It has steadily improved both in terms of feature as well as tooling and there is an active community out there building supplementary tools/frameworks for it in different languages. I also find that the documentations Amazon provides are in general up-to-date and useful.

Popularity

Much to my surprise, I found out at a recent cloud computing conference that none of Microsoft, Google or Amazon made the list of top 3 cloud computing service providers. Instead, SalesForce.com made number one and I can’t remember who the other two providers are…sorry..

Services

AWS offers a whole ecosystem of different services which should cover most aspects of a given architecture, from service hosting to data storage, messaging, they even recently announced a new DNS service called Route 53!

There’s a quick run down of the three most important services which AWS offers:

Elastic Cloud Computing (EC2)

EC2 forms the backbone of Amazon’s cloud solution, its key characteristics include:

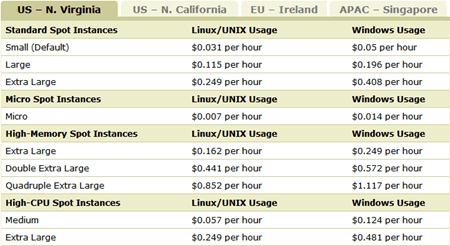

- you pay for what you use at a per instance per hour rate

- you pay for the amount of data transfers in and out of Amazon EC2 (data transfer between Amazon EC2 and other Amazon Web Services in the same region is free)

- there are a number of different OS’s to choose from including Linux and Windows, Linux instances are cheaper to run without the license cost associated with Windows

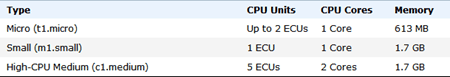

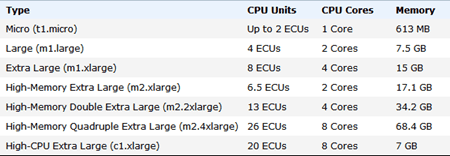

- there are a good range of different instance types to choose from, from the most basic (single CPU, 1.7GB ram) to high performance instances (22 GB ram, 2 x Intel Xeon X5570, quad-core Nehalem, 2 x NVIDIA Tesla Fermi M2050 GPUs)

- a basic instance running windows will cost you roughly $3 a day to run non-stop

- default machine images are regularly patched by Amazon

- once you’ve set up your server to be the way you want, including any server updates/patches, you can create your own AMI (Amazon Machine Image) which you can then use to bring up other identical instances

- there’s a Elastic Load Balancing service which provides load balancing capabilities at additional cost (though most of the time you’ll only need one)

- there’s a Cloud Watch service which you can enable on a per instance basis to help you monitor the CPU, network in/out, etc. of your instances, this service also has its own cost

- you can use the Auto Scaling service to automatically bring up or terminate instances based on some metric, e.g. terminate 1 instance at a time if average CPU utilization across all instances is less than 50% for 5 minutes continuously, but bring up 1 new instance at a time if average CPU goes beyond 70& for 5 minutes

- you can use the instances as web servers, DB servers, Memcached cluster, etc. choice is yours

- round trips within Amazon is very very fast, but trips out of Amazon are significantly slower, therefore the usual approach is to use Amazon SimpleDB (see below) or Amazon RDS as the Database (should you need one that is)

- AmazonSDK is pretty solid and contains enough classes to help you write some custom monitoring/scaling service if you ever need to but the AWS Management Console (see lower down) lets you do most basic operations anyway

SimpleDB

Amazon’s NoSQL database, it is a non-relational, distributed, key-value data store, its key characteristics include:

- compared to traditional relational Databases it has lower per-request performance, typical 15-20ms operations tend to take anything between 75-100ms to complete

- in return, you get high scalability without having to do any work

- you pay for usage – how much work it takes to execute your query

- you start off with a single instance hosting your data, instances are auto scaled up and down depending on traffic, there’s no way to change the number of Database instance to start off with

- supports a SQL-like querying syntax, though still fairly limited

- for .Net, MindScape’s SimpleDB Management Tools is the best management tool we’ve used, it integrates directly into Visual Studio and at $29 a head it’s not expensive either

- most performing when traffic increases/decreases steadily, there’s a noticeable slump in response times when there’s a sudden surge of traffic as new instances takes around 10-15 mins to be ready to service requests

- data are partition into ‘domains‘, which are equivalent to tables in a relational Database

- data are non-relational, if you need a relational model then use Amazon RDS, I don’t have any experience with it so not the best person to comment on it

- be aware of ‘eventual consistency‘, data are duplicated on multiple instances after Amazon scales up your database to meet the current traffic, and synchronization is not guaranteed when you do an update so it’s possible though highly unlikely to update some data then read it back straight away and get the old data back

- there are ‘consistent read‘ and ‘conditional update‘ mechanisms available to guard against the eventual consistency problem, if you’re developing in .Net, I suggest using SimpleSavant client to talk to SimpleDB, it’s a fairly feature-complete ORM for SimpleDB which already supports both consistent reads and conditional updates

Simple Storage Service (S3)

Amazon’s storage service, again, extremely scalable, and safe too – when you save a file to S3 it’s replicated across multiple nodes so you get some DR ability straight away. Many populate services such as DropBox uses it behind the scene and it’s also the storage of choice for many image hosting services. Key characteristics include:

- you pay for data transfer in and out (data transfer between EC2 and S3 in the same region is free)

- files are stored against a key

- you create ‘buckets‘ to hold your files, and each bucket has a unique URL (unique across all of Amazon, and therefore S3 accounts)

- there’s a Cloud Front service for content delivery, data are cached on the first request and therefore speeds up subsequent requests from the same region

- CloudBerry S3 Explorer is the best UI client we’ve used in Windows

- you can use the AmazonSDK to write you own repository layer which utilizes S3

These are the three core services which most people use AWS for, but there are other useful services which I haven’t mentioned yet, such as the Simple Queue Service (SQS) and Elastic MapReduce, but those are more for edge cases.

Cost

Lower cost of entry

The great thing about the pay-as-you-go pricing model for cloud computing solutions in general is that as a small start-up, or even individuals, you can have the capability to serve millions of customers right from the word go without having to invest heavily up front on infrastructure and hardware. This serves to lower the cost of entry and therefore the risk involved, which consequently encourages innovations.

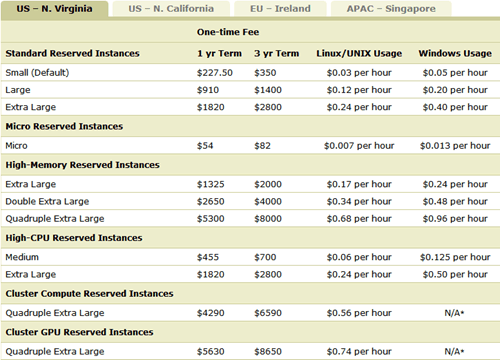

Diminishing value of renting

However, drawing analogies from car rentals, if you only need a car occasionally it makes much more economic sense to simply rent whenever you need one, but as your needs increases there comes a point when it becomes cheaper to actually own a car out right. The same can be said of the cost of running all your services out of AWS, especially for high performance instances required to run a Database for example, see below screenshots for some examples of the available instance types and corresponding cost of renting by the hour:

Reserving instances

In addition to the standard ‘pay for what you use’ model, Amazon also offers discounts when you reserve an instance for 1 or 3 year terms for a one-time fee, after which the instance is reserved for you.

So one way to cut your costs is to reserve the minimum number of instances you will need to run constantly based on the minimum usage of your service and supplement them with spot instances you request dynamically to cope with spikes in traffic.

Using EC2 as a supplement

Some companies such as Zynga uses a similar approach, where they run majority of their services out of their own data centres but uses EC2 instances to supplement that and help them cope with surges in traffic.

This approach however, doesn’t apply to vendors which uses a platform-as-a-service model (e.g. Azure and AppEngine) because you are more tightly locked in with the specific vendor and can’t simply run part of your service outside of their platform. For example, if you’ve developed your application to use Azure, you won’t be able to run your application out of your own servers because Azure as a platform is only provided by Microsoft.

Be ware of the additional costs

When it comes to estimating your cost, it’s easy to forget about all the other small charges you incur, such as the cost of data transfers, which whilst fairly cheap but depending on your usage can easily build up and eclipse the cost of running the virtual servers. Take a flash game for example, the game and relevant assets etc. can easily amount to a few megabytes. This on its own is nothing, but multiply that by 500k, 1 million, 2 million, 10 million users, and then multiply by the number of updates/patches which requires the users to download the package again, and soon you just might be looking at a rather sizable bill in relation to your data transfers..

Add to that the cost of other peripheral services such as Cloud Watch or Load Balancing, etc. etc. which are all perfectly reasonable by any means, but they all add up in the end.

It’s possible to mitigate some of these additional cost, for example, you could make use of the Cloud Front service to reduce the amount of data transfers from S3 (data is cached after the first request in a given region), or better still you can architect your application so that it only loads resources at the time when they’re required but obviously this adds to the complexity of your application and can also complicate the deployment process.

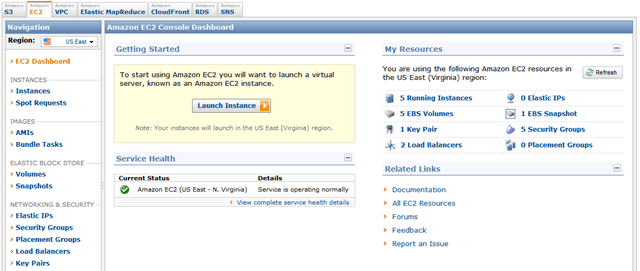

Web Console

The standard AWS Management Console (see image below) is good if unspectacular, and doesn’t cover the full range of the services Amazon provides. There are also some important features missing too, for instance, in order to talk to the Auto Scaling service to adjust the scaling parameters (change max number of instances from 10 to 5), you have to either download and use a command line tool or use the Amazon SDK, or build some UI around it to make life easier for yourselves as we did.

There are other vendors such as RightScale which provides you with better tooling to help you manage/automate a lot of the work you have to otherwise do yourself, but they usually have pretty steep pricing and doesn’t represent great value for money for a small startup looking to get up and running cheaply. You know, the sort of folks that are attracted by cloud computing’s low cost entry point.. wait a minute…

Reliability

The well goes deep…

I read an interesting story not long ago about the attempted Distributed DOS attack on Amazon the Anonymous collective of online protesters tried to pull off (in protest of Amazon cutting WikiLeaks loose) after successful attempts on several other high profile sites. The attacked ended in failure as the organizers admitted that Amazon was too hard a nut to crack, so clearly the well is deep enough for all of us, and some more!

Outages and Performance Drops

Over the last year there had been several outages but they were all resolved fairly quickly, but there had been several instances where the performance (in terms of response time and/or number of timed-out requests) had noticeably dropped for both SimpleDB and S3.

When you request a new EC2 instance, you have to specify which availability zone the instance should be spawned in, but for services such as SimpleDB you don’t have this control and new instances are always spawned in the default availability zone for your current region.

For instance, if your current region is US East (N. Virginia) the default availability zone is us-east-1a, and everyone who requests EC2 instances without changing the default availability zone will be using the same zone and therefore likely to cause a lot of congestion in that zone and affect other services such as SimpleDB. There’s even been several times when we simply weren’t able to scale up our application because the us-east-1a availability zone had no spare capacity!

It’s very important to build in lots of fault tolerance into your application when you’re using AWS, you should also avoid (where possible) using the default availability zones for your EC2 instances as they tend to be the most likely to congest.

NOTE: as I mentioned before about data transfer costs, data transfers between different availability zones in the same region are free, so there’s no need to worry about incurring extra costs by having your EC2 instances running in a different availability zone to that of SimpleDB/S3.

Bug Reporting

Being a rapidly and constantly evolving platform, it’s no surprise that there had been the odd bugs that have been introduced as the result of an update, e.g. for a little while no one was able to remote desktop to any instance whose public IP starts with 50, e.g. 50.0.0.0…

There is an active discussion forum where you can report any bugs you notice about the services, and Amazon employees do monitor these forums and provide helpful feedbacks etc. In addition to that, there’s also a service status dashboard you can use to check the current status of their services by date, and by region.

Parting thoughts…

So there, a not so quick :-P high level summary of my experience with AWS over the last 12 months. To wrap things up, here’s just a couple of blogs you could read regularly to find out what’s going on the ‘cloud’:

Well, hope this helps you in some way, belated happy 2011!

Whenever you’re ready, here are 4 ways I can help you:

- If you want a one-stop shop to help you quickly level up your serverless skills, you should check out my Production-Ready Serverless workshop. Over 20 AWS Heroes & Community Builders have passed through this workshop, plus 1000+ students from the likes of AWS, LEGO, Booking, HBO and Siemens.

- If you want to learn how to test serverless applications without all the pain and hassle, you should check out my latest course, Testing Serverless Architectures.

- If you’re a manager or founder and want to help your team move faster and build better software, then check out my consulting services.

- If you just want to hang out, talk serverless, or ask for help, then you should join my FREE Community.