Yan Cui

I help clients go faster for less using serverless technologies.

I’ve covered the topic of using SmartThreadPool and the framework thread pool in more details here and here, this post will instead focus on a more specific scenario where the rate of new work items being queued outstrips the pool’s ability to process those items and what happens then.

First, let’s try to quantify the work items being queued when you do something like this:

1: var threadPool = new SmartThreadPool();

2: var result = threadPool.QueueWorkItem(....);

The work item being queued is a delegate of some sort, basically some piece of code that needs to be run, until a thread in the pool becomes available and process the work item, it’ll simply stay in memory as a bunch of 1’s and 0’s just like everything else.

Now, if new work items are queued at a faster rate than the threads in the pool are able to process them, it’s easy to imagine that the amount of memory required to keep the delegates will follow an upward trend until you eventually run out of available memory and an OutOfMemoryException gets thrown.

Does that sound like a reasonable assumption? So let’s find out what actually happens!

Test 1 – Simple delegate

To simulate a scenario where the thread pool gets overrun by work items, I’m going to instantiate a new smart thread pool and make sure there’s only one thread in the pool at all times. Then I recursively queue up an action which puts the thread (the one in the pool) to sleep for a long time so that there’s no threads to process subsequent work items:

1: // instantiate a basic smt with only one thread in the pool

2: var threadpool = new SmartThreadPool(new STPStartInfo

3: {

4: MaxWorkerThreads = 1,

5: MinWorkerThreads = 1,

6: });

7:

8: var queuedItemCount = 0;

9: try

10: {

11: // keep queuing a new items which just put the one and only thread

12: // in the threadpool to sleep for a very long time

13: while (true)

14: {

15: // put the thread to sleep for a long long time so it can't handle anymore

16: // queued work items

17: threadpool.QueueWorkItem(() => Thread.Sleep(10000000));

18: queuedItemCount++;

19: }

20: }

21: catch (OutOfMemoryException)

22: {

23: Console.WriteLine("OutOfMemoryException caught after queuing {0} work items", queuedItemCount);

24: }

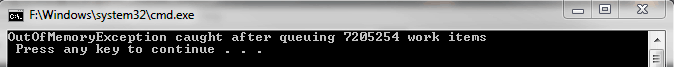

The result? As expected, the memory used by the process went on a pretty steep climb and within a minute it bombed out after eating up just over 1.8GB of RAM:

![]()

All the while we managed to queue up 7205254 instances of the simple delegate used in this test, keep this number in mind as we look at what happens when the closure also requires some expensive piece of data to be kept around in memory too.

Test 2 – Delegate with very long string

For this test, I’m gonna include a 1000 character long string in the closures being queued so that string objects need to be kept around in memory for as long as the closures are still around. Now let’s see what happens!

1: // instantiate a basic smt with only one thread in the pool

2: var threadpool = new SmartThreadPool(new STPStartInfo

3: {

4: MaxWorkerThreads = 1,

5: MinWorkerThreads = 1,

6: });

7:

8: var queuedItemCount = 0;

9: try

10: {

11: // keep queuing a new items which just put the one and only thread

12: // in the threadpool to sleep for a very long time

13: while (true)

14: {

15: // generate a 1000 character long string, that's 1000 bytes

16: var veryLongText = new string(Enumerable.Range(1, 1000).Select(i => 'E').ToArray());

17:

18: // include the very long string in the closure here

19: threadpool.QueueWorkItem(() =>

20: {

21: Thread.Sleep(10000000);

22: Console.WriteLine(veryLongText);

23: });

24: queuedItemCount++;

25: }

26: }

27: catch (OutOfMemoryException)

28: {

29: Console.WriteLine("OutOfMemoryException caught after queuing {0} work items", queuedItemCount);

30: }

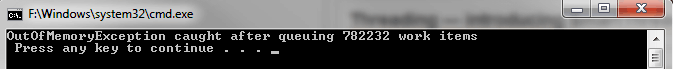

Unsurprisingly, the memory was ate up even faster this time around and at the end we were only able to queue 782232 work items before we ran out of memory, which is significantly lower compared to the previous test:

Parting thoughts…

Besides it being a fun little experiment to try out, there is a story here, one that tells of a worst case scenario (albeit one that’s highly unlikely but not impossible) which is worth keeping in the back of your mind of when utilising thread pools to deal with highly frequent, data intense, blocking calls.

Whenever you’re ready, here are 3 ways I can help you:

- Production-Ready Serverless: Join 20+ AWS Heroes & Community Builders and 1000+ other students in levelling up your serverless game.

- Consulting: If you want to improve feature velocity, reduce costs, and make your systems more scalable, secure, and resilient, then let’s work together and make it happen.

- Join my FREE Community on Skool, where you can ask for help, share your success stories and hang out with me and other like-minded people without all the negativity from social media.

Good catch. I’m just looking for some similar experiments on smartthreadpool.

Thanks for sharing!