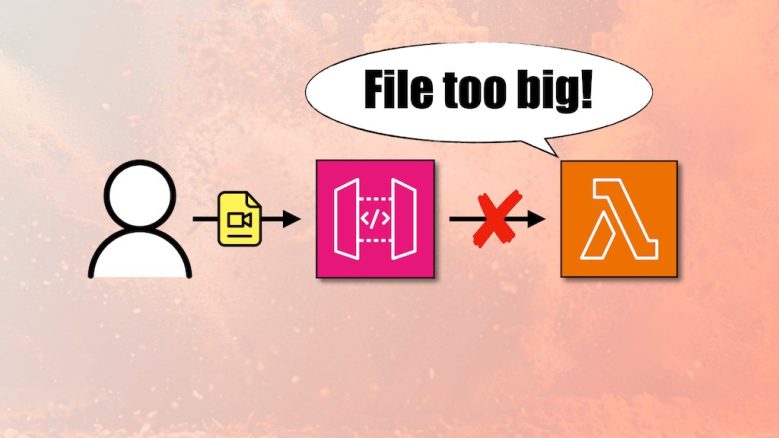

Lambda layer: not a package manager, but a deployment optimization

It’s been two years since I last wrote about Lambda layer and when you should use it. Most of the problem I discussed in that original post still stands: It makes it harder to test your functions locally. You will still need those dependencies to execute your function code locally as part of your tests. …

Lambda layer: not a package manager, but a deployment optimization Read More »