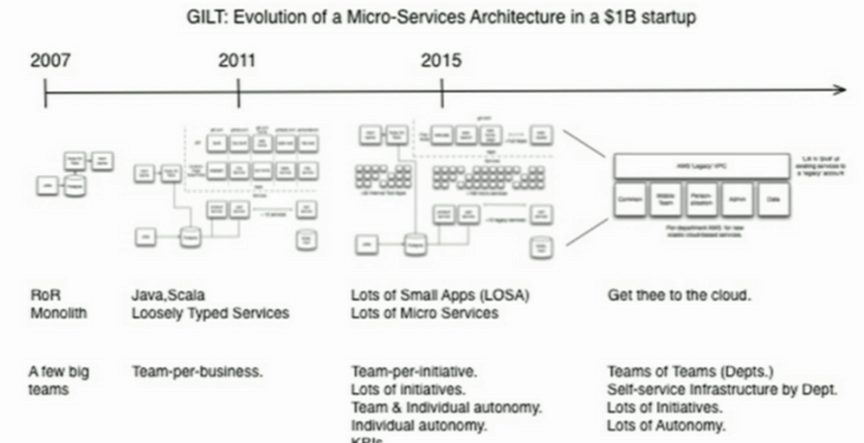

Video and slides for my talk “applying best parts of Microservices to Serverless”

Hello, just a quick note to tell you that recording of my keynote at ServerlessDays TLV is now live! In this talk, I looked at a number of important lessons we learnt from the Microservices world and how they are still relevant to us as move to Serverless, and we can apply past learnings and …

Video and slides for my talk “applying best parts of Microservices to Serverless” Read More »