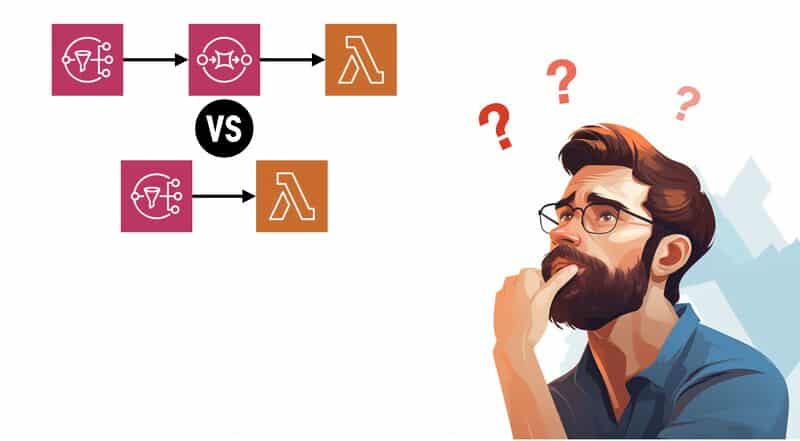

SNS to Lambda or SNS to SQS to Lambda, what are the trade-offs?

I had a really good question from one of my students at the Production-Ready Serverless workshop the other day: “I’m reacting to S3 events received via an SNS topic and debating the merits of having the Lambda triggered directly by the SNS or having an SQS queue. I can see the advantage of the queue …

SNS to Lambda or SNS to SQS to Lambda, what are the trade-offs? Read More »