Yan Cui

I help clients go faster for less using serverless technologies.

NOTE: For an updated set of benchmarks, see the Benchmarks page.

For those of you who have worked with JSON data in .Net you should be familiar with the DataContractJsonSerializer class and the JavaScriptSerializer class already. Both allow you to serialize/deserialize an object to and from a piece of string in JSON format, though there are some notable differences.

Besides these two BCL (base class library) JSON serializers, there are popular third-party offering such as Json.Net and a relatively newer ServiceStack.Text which also offers its own serialization format called JSV (JSON + CSV).

It is claimed that ServiceStack.Text‘s JSON serializer is 3x faster than Json.Net and 3.6x faster than the BCL JSON serializers! So, naturally, I had to test it out for myself and here’s what I found.

Assumptions/Conditions of tests

- code is compiled in release mode, with optimization options turned on

- 5 runs of the same test is performed, with the top and bottom results excluded, the remaining three results is then averaged

- 100,000 instances of type SimpleObject (see below) is created, each with a different ID and Name, and then given to the serializers to serialize and deserialize

- serialization/deserialization of the objects happen sequentially in a loop (no concurrency)

1: [DataContract]

2: public class SimpleObject

3: {

4: [DataMember]

5: public int Id { get; set; }

6:

7: [DataMember]

8: public string Name { get; set; }

9: }

Results

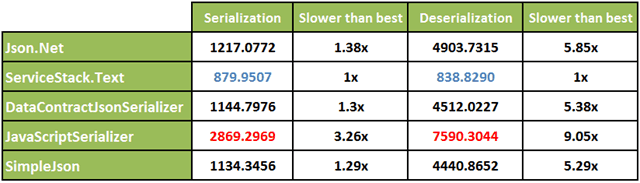

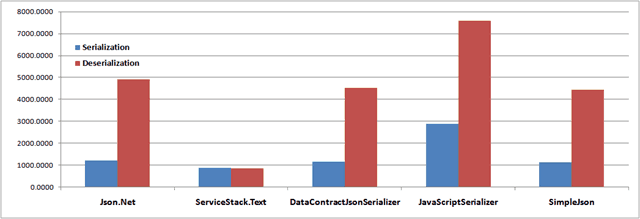

With these assumptions in mind, here are the average times (in milliseconds) I recorded for serialization and deserialization using each of five JSON serializers I tested:

Looking at these data, it would seem the ServiceStack.Text.JsonSerializer class offers the best speed in both serialization and deserialization cases, however the gains are much more modest than those advertised in the case of serialization. However, it really comes into its own when it comes to deserialization and the speed gains are quite impressive indeed!

Update 2011/09/12:

Turns out I had a typo in my performance test code and I was using Json.Net for the serialization test for SimpleJson, my bad, sorry folks… So I fixed the typo and ran the tests again and updated the data and graph above with the correct data. As Prabir Shrestha pointed out, by enabled Reflection.Emit you’re able to get much better performance out of SimpleJson and the new test results reflect this as the SimpleJson test was run whilst Reflection.Emit was enabled.

Whenever you’re ready, here are 3 ways I can help you:

- Production-Ready Serverless: Join 20+ AWS Heroes & Community Builders and 1000+ other students in levelling up your serverless game. This is your one-stop shop for quickly levelling up your serverless skills.

- I help clients launch product ideas, improve their development processes and upskill their teams. If you’d like to work together, then let’s get in touch.

- Join my community on Discord, ask questions, and join the discussion on all things AWS and Serverless.

Hey cool nice benchmarks :)

Note the initial speed gains we’re based on the Northwind DataSet benchmarks (also linked to on the ref article at: http://goo.gl/AhHUp ) which shows the average performance after combining both Serialization and Deserialization times for each table in the Northwind database. Which are similar to your benchmarks where most of the speed gains came from deserialization. The reason for this is simple, it’s hard to make writing to an output stream any quicker and most serializers will generally do it the same naive way.

So for comparisons your benchmarks (after combining Serialization and Deserialization) show ServiceStack.Text is 3.697x faster than JSON.NET

Could you include SimpleJSON in your comparisons?

http://simplejson.codeplex.com

Pingback: Performant .NET JSON Libraries compared | ogreLabs

@Livingston – sure, I can add that to the post later on, if you’re interested though, the source code for the test is included in the examples project for my SimpleSpeedTester project:

http://simplespeedtester.codeplex.com

You should be able to put together a test yourself easily enough, I would appreciate any feedback on the tester too :-)

@Demis Bellot – that’s a very comprehensive set of benchmarks you’ve got there! Love the work you guys have done on the JSON serializer and Reddis client btw :-)

@theburningmonk hey no worries thanks, glad it’s proving useful :)

@Livingston – SimpleJson included in the test as requested ;-)

oh wow…. I expected better performance than that. Thanks for adding though. I’ll check out the tester later today

Thanks for adding SimpleJson to your benchmarks.

i downloaded the source code and found out that you are actually using json.net for serializing tests in simplejson.

// speed test SimpleJson

DoSpeedTest(“SimpleJson”, SerializeWithJsonNet, DeserializeWithSimpleJson);

SerializeWithJsonNet should be SerializeWithSimpleJson instead.

And one more thing. by default lot of features are disabled as we tend to support .net 2.0+, sl3+ and wp7 using the same code. Since sl and wp7 doesnt contain Reflection.Emit we have disabled it by default. to enable it you must manually define the SIMPLE_JSON_REFLECTIONEMIT as part of conditional compilation symbol or uncomment line number 18.

#define SIMPLE_JSON_REFLECTIONEMIT

I had also written a benchmark app before shipping simple json public and it was very close to json.net. there are times when it actually performs better than json.net

Here is the result i got after enabling Reflection.Emit for simple json

Test Group [Json.Net], Test [Serialization] results summary:

Successes [5]

Failures [0]

Average Exec Time [964.4081] milliseconds

Test Group [Json.Net], Test [Deserialization] results summary:

Successes [5]

Failures [0]

Average Exec Time [4175.15426666667] milliseconds

Test Group [ServiceStack.Text], Test [Serialization] results summary:

Successes [5]

Failures [0]

Average Exec Time [597.442066666667] milliseconds

Test Group [ServiceStack.Text], Test [Deserialization] results summary:

Successes [5]

Failures [0]

Average Exec Time [670.168766666667] milliseconds

Test Group [DataContractJsonSerializer], Test [Serialization] results summary:

Successes [5]

Failures [0]

Average Exec Time [1152.636] milliseconds

Test Group [DataContractJsonSerializer], Test [Deserialization] results summary:

Successes [5]

Failures [0]

Average Exec Time [3614.29796666667] milliseconds

Test Group [JavaScriptSerializer], Test [Serialization] results summary:

Successes [5]

Failures [0]

Average Exec Time [2517.56013333333] milliseconds

Test Group [JavaScriptSerializer], Test [Deserialization] results summary:

Successes [5]

Failures [0]

Average Exec Time [6903.22913333333] milliseconds

Test Group [SimpleJson], Test [Serialization] results summary:

Successes [5]

Failures [0]

Average Exec Time [953.122566666667] milliseconds

Test Group [SimpleJson], Test [Deserialization] results summary:

Successes [5]

Failures [0]

Average Exec Time [3210.8124] milliseconds

all done…

Pingback: Performance Test – JSON serializers Part II | theburningmonk.com

Pingback: Stack Exchange Open Source Projects - Blog – Stack Exchange

Another great library worth adding to the benchmark is fastJson

@Anastasiosyal – there’s a more up-to-date post which includes fastJson, check out the benchmarks page : https://theburningmonk.com/benchmarks/

I wonder how JsonFx would stack up?

Ah, nevermind. Thanks for the code! I added JsonFx and ran it. This chart will probably look bad, but…

Name Serialize Deserialize

ServiceStack.Text 410.0747667 624.4737

fastJson 814.4741667 823.0015333

Json.Net 919.5261667 1350.576233

SimpleJson 949.6640333 2334.040933

DataContractJsonSerializer 772.007 2351.076167

JsonFx 1293.9739 2359.188467

JavaScriptSerializer 2082.950367 4094.520433

JayRock 4458.4778 5638.371767

@rrjp – I’ve included JsonFx in the Json Serializers benchmark in the benchmarks page

Pingback: Performance benchmarks updated | theburningmonk.com