Yan Cui

I help clients go faster for less using serverless technologies.

On day three of QCon London, we were treated to some really insightful stories from the likes of Google, Atlas and Spotify. And for the first time in a while Uber is talking publically about what they’ve been up to.

The challenge for Uber’s platform is that both supply (Uber drivers) and demand (riders) are dynamic and matching them efficiently in real-time is not easy.

Uber’s services are written in a mixture of Node.js, Python, Java and Go, whilst a whole mix of databases are used – PostgreSQL, Redis, MySQL and Riak.

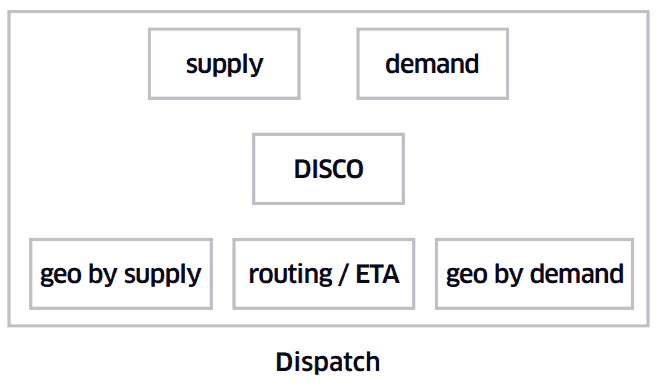

From a high level, they have a number of backend components:

They recently rewrote the Dispatch system despite Joel Spolsky advising against complete rewrites. To that, Matt Ranney said there are a number of built-in assumptions in the current dispatch system that is so deep-rooted that a revolutionary step is more efficient and productive:

- assumes 1 rider per vehicle, hard to support vehicle pooling

- the idea of moving people is baked into domain and code, making it hard to move into new markets (Matt didn’t elaborate, but I assume transportation of goods might be one such market)

- sharding by city, which is not a sustainable approach as Uber moves into more and more cities

- multiple points of failure that can bring everything down

The dispatch system was hard to fix incrementally, and since everything runs as a service, it was feasible to replace the existing system outright.

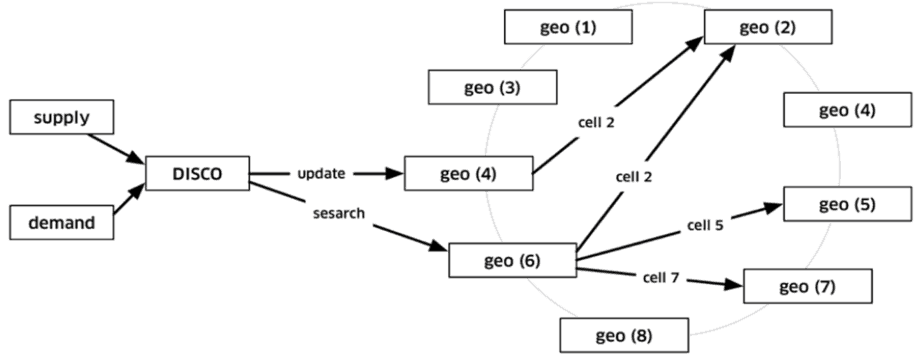

The new dispatch system looks like this:

where DISCO stands for DISpatCh Optimization service.

For geo-indexing, the dispatch service needs to know not only the physical distance between supply and demand, but also ETA based on historical travel data. The ETA calculation also needs to handle a number of special cases, including airports, where demands need to be queued (i.e. first come first served) to provide a fair service to everyone waiting at an airport.

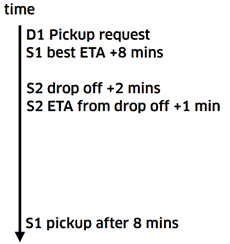

The old system can only track available supplies (i.e. cars with no riders), which means there are missed optimization opportunities such as the following:

where the demand (D1) can be met by an in-flight supply (S2) earlier than an available supply (S1).

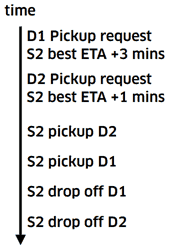

DISCO is able to consider supplies that are currently in-flight and project their route into the future and take that into the matching process, and supports vehicle pooling (if both D1 and D2 agrees to share a vehicle):

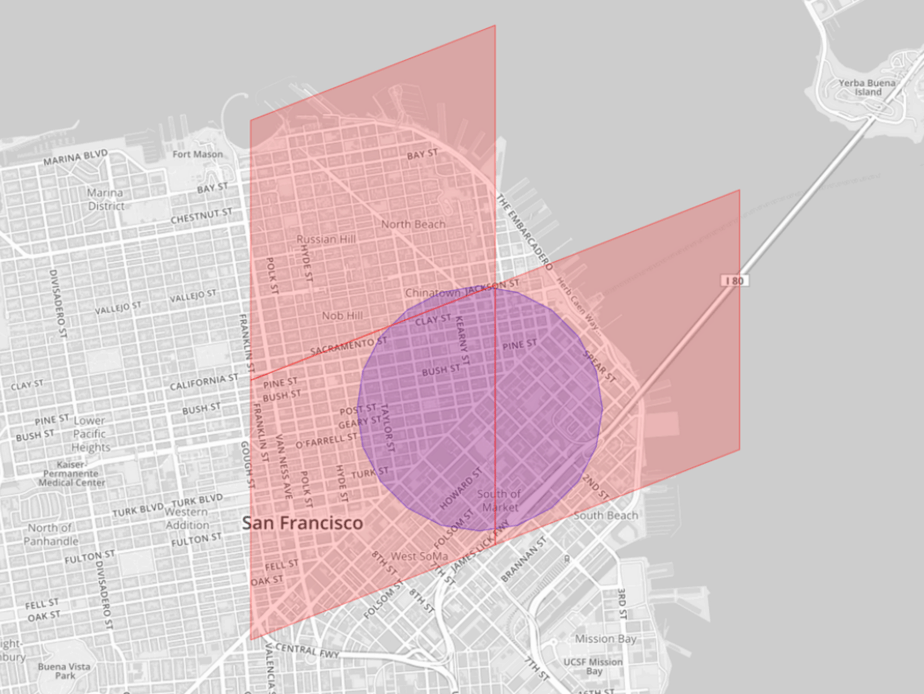

Uber breaks up the earth into tiny cells (like in Google Maps) and each is given a unique ID. Using the Google S2 library, you can identify cells that will completely cover a shape you’ve supplied:

Uber uses these cell IDs as sharding key to update supply, and when DISCO needs to match supply to demand, you can use that information to find supplies that are in the matching cells.

A limitation with this approach is that the cells have fixed size, so one would imagine the update activities are not well spread out through the key space. It’s natural for supply and demand to be concentrated around city centres where the night life is – central London being a prime example.

Nonetheless, the goal of the routing is to:

- reduce wait time for riders

- reduce extra driving for drivers

- lower overall ETAs

In order to scale their services, Uber went with an approach of building stateful services using Node.js. In addition, they also introduced a custom RPC protocol called ringpop, which is based on the SWIM paper. Ringpop also runs on its own TChannel multiplexing and framing protocol.

The goal of these projects is to provide:

- performance across different languages

- high performance request forwarding

- proper pipelining

- support for checksums and tracing

- encapsulation

On a high-level, nodes in a cluster is able to handle any request, and if the data is not available on the node then the request is forwarded to the correct node.

This essentially deals with the need for managing consistent hashing on the client.

For Uber, availability is of paramount importance, as the cost of switching to competitor is low. So they decided to:

- make everything retryable, which means making every operation idempotent (something which I suspect can be challenging in practice)

- make everything killable (chaos monkey style), ringpop detects failed nodes and remove them from the cluster

- crash only, no complicated graceful shutdowns

- break things up into small pieces

which in turn required some cultural changes:

- no pairs (I think he was talking about read-replica setups where there’s a potentially complicated fallover process)

- kill everything, even databases

Since service talk to each other via load balancers, so you will need to be able to kill load balancers too, so instead load balancer logic is put in the service client (similar to Netflix Ribbon from what I gathered). I didn’t buy Matt’s rationale here since it’s possible to make load balancers highly available too, but then he also mentions the ability to do smarter routing – choosing data centre with better latency in a globally deployed infrastructure for example – which makes more sense.

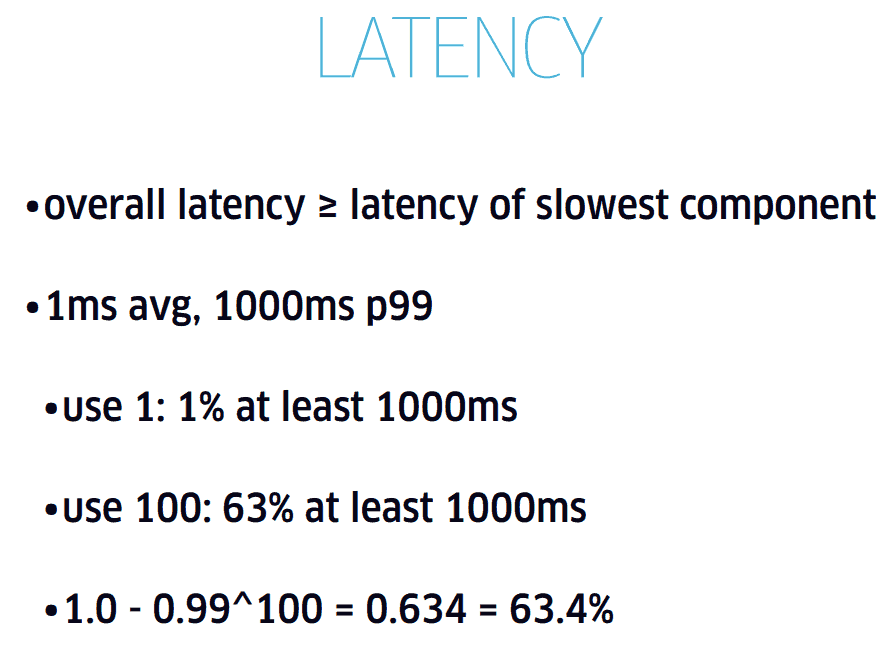

Matt then went on to talk about some of the challenges with large fanout services, and in particular, the challenge with getting predictable latency when a large number of services are involved.

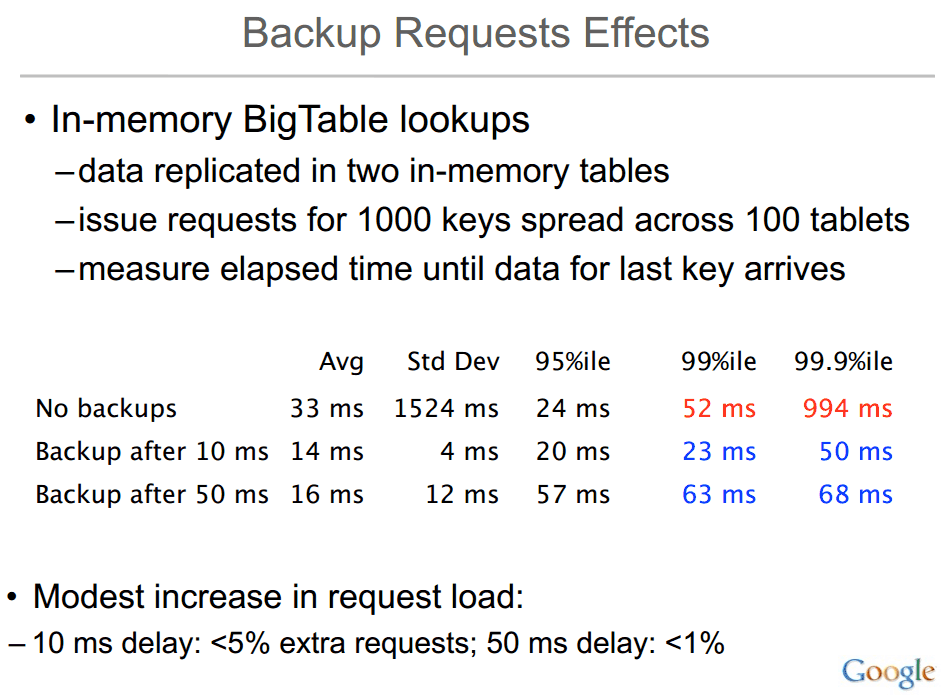

He also referenced Google fellow Jeff Dean’s paper Achieving Rapid Response Times in Large Online Services which is a great read, slide 39-70 describes the approach Uber has adopted.

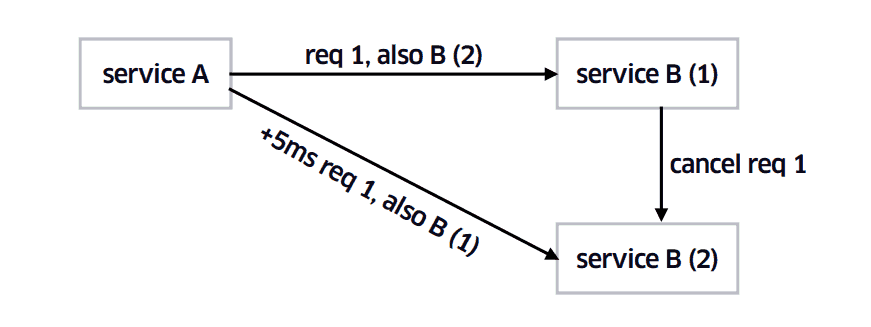

In the example above, the following happened:

- service A sends req 1 to service B (1), informing it that the request will also be sent to service B (2)

- 5ms later, service A indeed sends the same request to service B (2), which goes into its backlog, service B (2) also finds out that service B (1) also got the same request

- meanwhile, service B (1) starts to process the request, sends a signal to service B (2) to cancel req 1 from its backlog

- service B (1) completes the request and replies to service A

If service B (1) was under load and couldn’t process the request fast enough then service B (2) would have processed the request and replied to service A, unless of course service B (2) is also under load.

In case you’re worried about the extra requests that would need to be processed with this approach, Jeff Dean paper (above) has the following results to show:

A more naive approach would be to always send the request to both service B (1) and service B (2) and just ignore the slower response. Based on a previous talk I watch this is (at least was) what Netflix does.

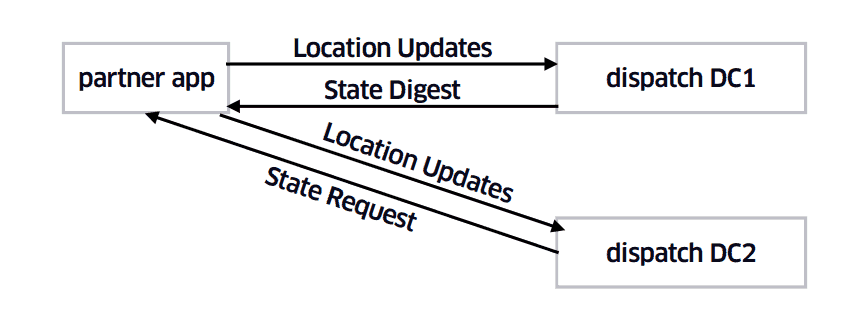

Finally, Matt touched on how Uber deals with datacentre outages. Their approach is quite simple and effective:

In this example, when the mobile app sends a location update, the service will respond with an encrypted state digest. When datacentre 1 fails:

- app will send the location updates to datacentre 2 instead

- since datacentre 2 doesn’t have the user’s state, so it requests the last state digest the app has received

- the app then sends the encrypted state digest in datacentre 2

- datacentre 2 decrypts the digest and initialize the user state

- now the app can converse with data centre 2 normally

Links

SWIM : Scalable Weakly-consistent Infection-style process group Membership protocol

Jeff Dean – Achieving rapid response times in large online services

Whenever you’re ready, here are 3 ways I can help you:

- Production-Ready Serverless: Join 20+ AWS Heroes & Community Builders and 1000+ other students in levelling up your serverless game. This is your one-stop shop for quickly levelling up your serverless skills.

- I help clients launch product ideas, improve their development processes and upskill their teams. If you’d like to work together, then let’s get in touch.

- Join my community on Discord, ask questions, and join the discussion on all things AWS and Serverless.