Yan Cui

I help clients go faster for less using serverless technologies.

Bryan Hunter has been responsible for organising the FP track at NDC conferences as well as a few others and the quality of the tracks have been consistently good.

The FP track for this year’s NDC Oslo was exceptional and every talk has had a full house. And this year Bryan actually kicked off the conference with a talk on how functional programming can help you be more ‘lean’.

History of Lean

Bryan started by talking about the history of lean, and whilst most people (myself included) thought the lean principles were created at Toyota, turns out it actually originated from the US.

At a time when the US workforce was diminished because all the men were sent to war, a group called the TWI (Training Within Industry) was formed to find a way to bring women into the workforce for the first time, and train them.

The process of continuous improvement the TWI created was a stunning success, before the war the US was producing around 3,000 planes per year and by the end of the war the US was producing 300,000 planes a year!

Unfortunately, this history of lean was mostly lost when the men came back from war and the factories went back to how they worked before, and jobs were prioritized over efficiency.

Whilst the knowledge from this amazing period of learning was lost in the US, remains of the TWI was sent to Germany and Japan to help them rebuild and this was how the basic foundations of the lean principles were passed onto Toyota.

sidebar: one thing I find interesting is that, the process of continuous improvement that lean introduces:

is actually very similar to how deep learning algorithms work, or as glimpsed from this tweet by Evelina, how our brain works.

“Backpropagation is how the brain actually works.” Geoff Hinton on #deeplearning pic.twitter.com/YiS6rG9xYU

— Evelina Gabasova (@evelgab) June 25, 2015

Bryan then outlined the 4 key points of lean:

Long term philosophy : you need to have a sense of purpose that supersedes short-term goals and economic conditions. This is the foundation of lean, and without it you’re never stable.

The right process will produce the right results : if you aren’t getting the right results then you’ve got the wrong process and you need to continuously improve that process.

Respect, challenge, and develop your people : and they will become a force multiplier.

Continuously solving root problems drives organizational learning.

Companies adopt lean because it’s a proven path to improving delivery times, reducing costs and improving quality.

The way these improvements happen is by eliminating waste first, then eliminating over-burden and inconsistency.

Lean Thinking

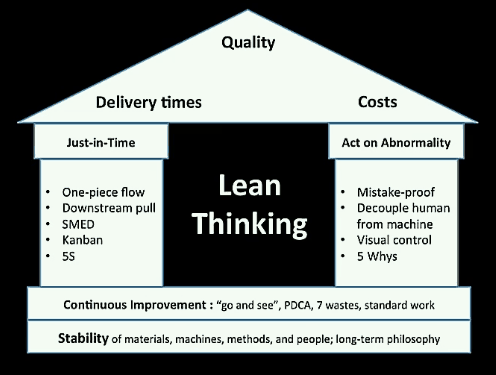

The so-called lean house is built on top of the stability of having a long-term philosophy and the process of continuous improvement (or Kaizen, which translates to changes for the better in Chinese and Kanji).

Then through the two pillars of Just-In-Time and Act on Abnormality we arrive at our goal of improved Delivery Times, Quality and reduced Costs.

A powerful tool to help you improve is “go and see”, where you go and sit with your users and see them use your system. Not only do you see how they are actually using your system, but you also get to know them as humans and develop mutual empathy and respect which leads to better communication.

Another thing to keep in mind is that you can’t adopt lean by just adopting a new technical solution. Often when you adopt a new technical solution you just change things without improving them, and you end up with continuous change instead of continuous improvement.

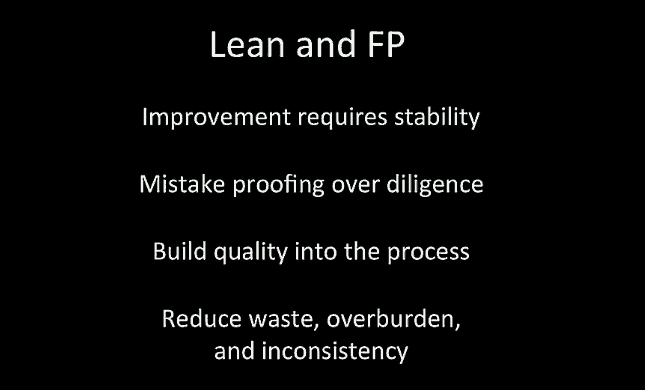

Functional programming fits nicely here because it holds up underneath the scrutiny of Plan-Do-Check-Act (PDCA). Instead of having a series of changes you really have to build on the idea of standard work.

Without the standard you end up with a shotgun map where things change, and improvements might come about (if you change enough times then it’s bound to happen some time, right?) but not as the result of a formalised process and are therefore unpredictable.

Seven Wastes

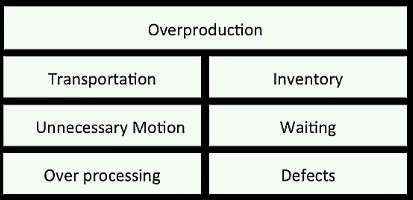

Then there are the Seven Wastes.

Overproduction is the most important waste as it encompasses all the wastes underneath it. In software, if you are building features that aren’t used then you have overproduced.

sidebar : for regular readers of this blog, you might remember Melissa Perris calling this tendency of building features without verifying the need for them first as The Build Trap in her talk at QCon London. Dan North also talked about the issue of overproduction through a fixation on building features in his talk at CraftConf – Beyond Features.

By doing things that aren’t delivering value you cause other waste to occur.

Transportation, in software terms, can be thought of as the cost to go from requirement, through to deployment and into production. DevOps, Continuous Integration and Continuous Deployment are very much in line with the spirit of lean here as they all aim to reduce the waste (both cognitive and time) associated with transportation.

Inventory can be thought of as all the things that are in progress and haven’t been deployed. In the waterfall model where projects go on for months without anything ever being deployed, then all of it will become inventorial waste if the project is killed. The same can happen with scrums where you build up inventory during the two-week sprint ,which as Dan North mentioned in his Beyond Features talk, is just long enough for you to experience all the pain points of waterfall.

You experience Unnecessary Motion whenever you are fire fighting or doing things that should be automated. This waste equates to the wear-and-tear on your people, and can cause your people to burn out.

Waiting is self-explanatory, and can often result from deficiencies in your organization’s communication and work scheduling (requirements taking too long to arrive whilst assigned workers are waiting on their hands).

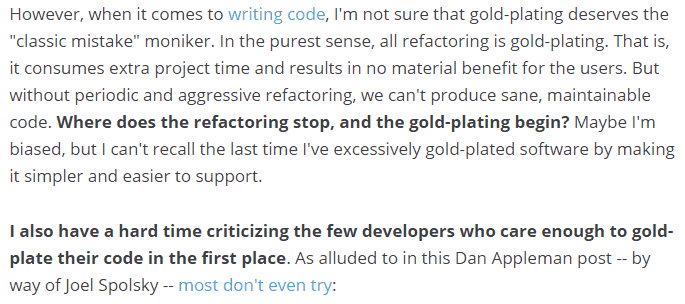

Over processing is equivalent to the idea of gold plating in software. Although as Jett Atwood pointed out in his post here, refactoring can be thought of as gold plating in the purest sense but it’s also important in producing sane, maintainable code.

Lastly, we have Defects. It’s exponentially cheaper to catch bugs at compile time than it is in production. This is why the notion of Type Driven Development is so important, and the goal is to make invalid state unrepresentable (as Scott Wlaschin likes to say!).

But you can still end up with defects related to performance, which is why I was really excited to hear about the work Greg Young has done with PrivateEye (which he publically announced at NDC Oslo).

Just-In-Time

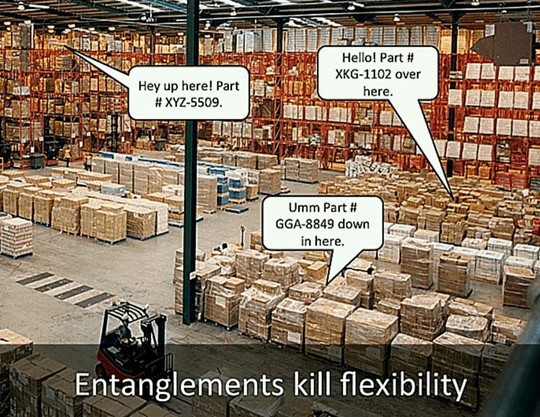

Bryan used the working of a (somewhat dysfunctional) warehouse to illustrate the problem of a large codebase where you have lots of unnecessary code you have to go through to get to the problems you’re trying to solve.

sidebar : whilst Bryan didn’t call it out by name, this is an example of cross-cutting concerns, which Aspect-Oriented Programming aims to address and something that I have written about regularly.

One way to visualize this problem is through Value Stream Mapping.

In this diagram the batch processes are where value is created, but we can see that there’s a large amount of lead time (14 days) compared to the amount of processing time (585 seconds) so there are lots of waste here.

As one process finishes its work the material is pushed aside until the next process is ready, which is where you incur lead time between processes.

This is the push model in mass manufacturing.

In software, you can relate this to how the waterfall model works. All the requirements are gathered at once (a batch process); then implementations are done (another batch); then testing, and so on.

In between each batch process, you’re creating waste.

The solution to this is the flow model, or one-piece flow. It focuses on completing the production of one piece from start to finish with as little work in process inventory between operations as possible.

In this model you also have the opportunity of catching defects early. By exercising the process from start to end early you can identify problems in the process early, and also use the experience to refine and improve the process as you go.

You also deliver value (a completed item) for downstream as early as possible. For example, if your users are impacted by a bug, rather than have them wait for the fix in the next release cycle along with other things (the batch model) you can deliver just the fix right away.

Again, this reminds me of something that Dan North said in his Beyond Features talk:

“Lead time to someone saying thank you is the only reputation metric that matters.”

– Dan North

And finally, you can easily parallelise this one-piece flow by having multiple people work on different things at the same time.

Bryan then talked about the idea of Single-minute Exchange of Die (SMED) – which is to say that we need an efficient way to convert a manufacturing process from running the current product to running the new product.

This is also relatable to software, where we need to stay away from the batch model (where we have too much lead time between values being delivered), and do as much as necessary for the downstream and then switch to something else.

sidebar : I feel this is also related to what Greg Young talked about in his The Art of Destroying Software talk where he pushed for writing software components that can be entirely rewritten (i.e. exchanged) in less than a week. It is also the best answer to “how big should my microservice be?” that I have heard.

You should flow when you can, and pull when you must, and the idea of pull is:

“produce what you need, only as much as you need, when you need”

– Taiichi Ohno

when you do this you’re forced to see the underlying problems.

With a 2 week sprint, there is enough buffer there to mask any underlying issues you have in your process. For instance, even if you have to spend 20 mins to fight with some TeamCity configuration issue then that inefficiency is masked by you working just a bit harder. However, that problem is not fixed for anyone else and is a recurring cost that your organization has to pay.

In the pull model where you have downstream waiting on you then you’re forced to see these underlying problems and solve them.

There’s a widespread misconception that kanban equals lean, but kanban is just a tool to get there and there are other tools available. An interestingly, Taiichi Ohno actually got the idea for kanban from piggly wiggly, based on the way they stock sodas and soup.

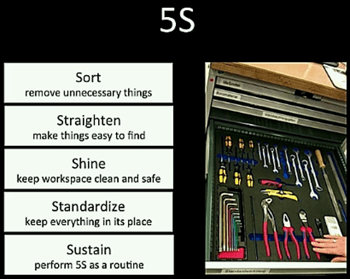

Another tool that is similar to kanban is 5S.

Act on Abnormality

A key component here is to decouple humans from machines – that you shouldn’t require humans to watch the machines do their job and babysit them.

Another tool here is mistake-proofing, or poka yoke. If there’s a mistake that can happen we try to minimize the impact of those mistakes.

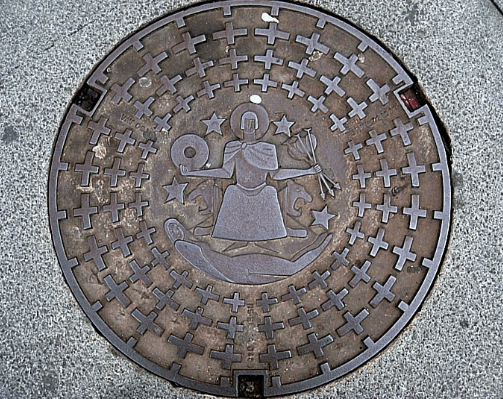

A good example is the design of the manhole cover:

which is round so that it can’t fall down the hole no matter which way you turn it.

Another example is to have visual controls that are vary obvious and obnoxious so that you don’t let problems hide.

I’m really glad to hear Bryan say:

“you shouldn’t require constant diligence, if you require constant diligence you’re setting everyone up for failure and hurt.”

– Bryan Hunter

which is something that I have been preaching to others in my company for some time.

An example of this is in the management of art assets in our MMORPG Here Be Monsters. There were a set of rules (naming conventions, folder structures, etc.) the artists have to follow for things to work, and when they make a mistake then we get subtle asset-related bugs such as:

- invisible characters and you can only see his/her shadow

- a character appearing without shirt/pants

- trees mysteriously disappearing when transition into a state that’s missing an asset

- …

So we created a poka yoke for this in the form of some tools to decouple the artists from these arbitrary rules. To give you a flavour of what the tool did here’s some screenshots from an internal presentation we did on this:

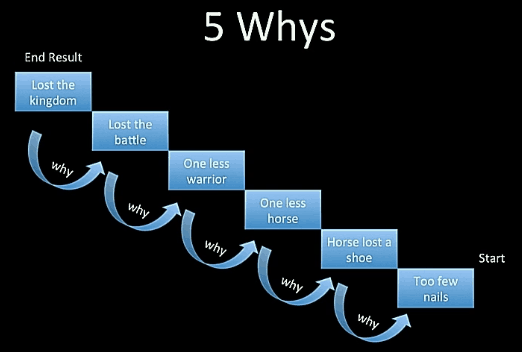

When you do have a problem, there’s a process called the 5 Whys which helps you identify the root cause.

Functional Programming

The rest of the talk is on how functional programming helps you mistake-proof your code in ways that you can’t in imperative programming.

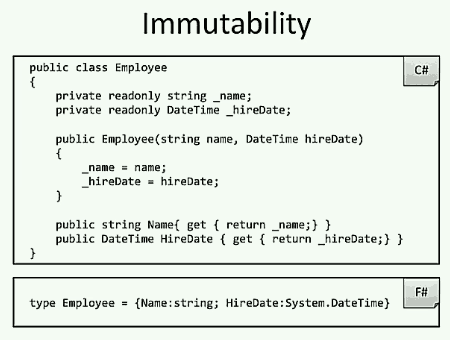

Immutability

A key difference between FP and imperative programming is that imperative programming relies on mutating state, whereas FP is all about transformations.

This quote from Joe Armstrong (one of the creators of Erlang) sums up the problem of mutation very clearly:

“the problem with OO is that you ask OO for a banana, and instead you get a Gorilla holding the banana and the whole jungle.”

– Joe Armstrong

With mutations, you always have to be diligent to not cause some adverse effect that impacts other parts of the code when you call a method. And remember, when you require constant diligence you’re setting everyone up for failure and hurt.

sidebar : Venkat Subramaniam gave two pretty good talks at NDC Oslo on the power and practicality immutability and things we can learn from Haskell, both are relevant here and you might be interested in checking out if you’re still not sold on FP yet!

In C#, making a class immutable requires diligence because you’re going up against the defaults in the language.

sidebar : as Scott Wlaschin has discussed at length in this post, no matter how many FP features C# gets there will always be an unbridgeable gap because of the behaviour the language encourages with its default settings.

This is not a criticism of C# or imperative programming.

Programming is an incredibly wide spectrum and imperative programming has its place, especially in performance critical settings.

What we should do however, is to shy away from using C# for everything. The same is true for any other language – stop looking for the one language that rules them all.

Explore, learn, unlearn, there are lots of interesting languages and ideas waiting for you to discover!

How vs What

Another important difference between functional and imperative programming is that FP is focused on what you want to do whereas imperative forces you to think about how you want to do it.

“Functional programming moves you to a higher level of abstraction, away from the plumbing” – @neal4d #ndcoslo

— Yan Cui (@theburningmonk) June 18, 2015

Imperative programming forces you to understand how the machine works, which brings us back to the human-machine lock-in we talked about earlier. Remember, you want to decouple humans from machines, and allow humans to focus on understanding and solving the problem domain (which they’re good at) and leave the machine to work out how to execute their solution.

Some historical context is useful here, in that back in the days when computers were slow and buggy, the hardware was the bottleneck. So it pays to have the developer tell the computer how to do our computations for us, because we knew better.

Nowadays, software is the thing that is slow and buggy, both compilers and CPUs are capable of doing much more optimization than most of us can ever dream of.

Whilst there are still legitimate cases for choosing imperative programming in performance critical scenarios, it doesn’t necessarily mean that developers need to write imperative code themselves. Libraries such as Streams is a good example of how you can allow developers to write functional style code which is translated to imperative code under the hood. In act, the F# compiler does this in many places – e.g. compiling your functional code into imperative while/for loops.

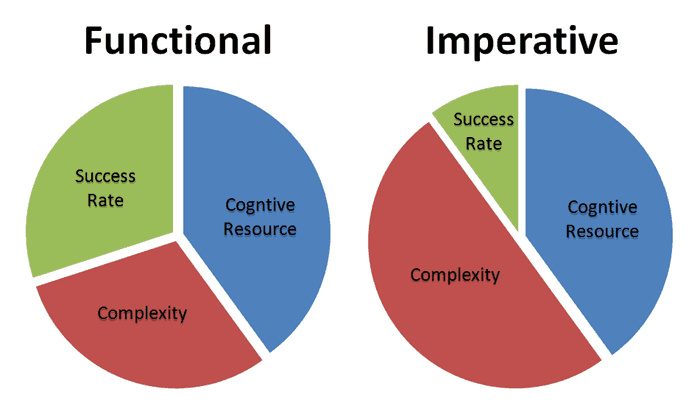

Another way to look at this debate is that, by making your developers deal with the HOW as well as the WHAT (just because you’re thinking about the how doesn’t mean that you don’t have to think about the what) you have increased the complexity of the task they have to perform.

Given the amount of cognitive resources they have to perform the task is constant, then you have effectively reduced the likelihood your developers would deliver a piece working software that does the right thing. And a piece of software that does the wrong faster is probably not what you are after…

p.s. don’t take this diagram literally, it’s merely intended to illustrate the relationship between the three things here – cognitive resources, complexity of the problem and the chance of delivering a correct solution. And before you argue that you can solve this problem by just adding more people (i.e. cognitive resources) into the equation, remember that the effect of adding a new member into the group is not linear, and is especially true when the developers in question do not offer sufficiently different perspective or view point.

null references

And any conversation about imperative programming is about complete without talking about null references, the invention that Sir Tony Hoare considers as his billion dollar mistake.

sidebar : BTW, Sir Tony Hoare is speaking at the CodeMesh conference in London in November, along with other industry greats such as John Hughes, Robert Virding, Joe Armstrong and Don Syme.

In functional languages such as F#, there are no nulls.

The absence of a value is explicitly represented by the Option type which eliminates the no. 1 invalid state that you have to deal with in your application. It also allows the type system to inform you exactly where values might be absent and therefore require special handling.

And to wrap things up…

Links

- All the FP talks at NDC Oslo

- Skillscast – WTF is Deep Learning?

- Melissa Perris – The Bad Idea Terminator

- Dan North – Beyond Features

- Coding Horror – Gold Plating

- Coding Horror – The Ultimate Software Gold Plating

- Edwin Brady – Type-driven development in Idris

- Scott Wlaschin – Domain modelling with F#

- Greg Young – PrivateEye

- Slideshare – Introduction to Aspect Oriented Programming

- PostSharp – Gamesys case study

- Greg Young – The art of destroying software

- Scott Wlaschin – Is your programming language unreasonable?

- Has C# peaked?

- Yan Cui – A Tour of the Language Landscape

- Tony Hoare – Null references : the Billion dollar mistake

Whenever you’re ready, here are 3 ways I can help you:

- Production-Ready Serverless: Join 20+ AWS Heroes & Community Builders and 1000+ other students in levelling up your serverless game. This is your one-stop shop for quickly levelling up your serverless skills.

- I help clients launch product ideas, improve their development processes and upskill their teams. If you’d like to work together, then let’s get in touch.

- Join my community on Discord, ask questions, and join the discussion on all things AWS and Serverless.

Pingback: My picks from OSCON keynotes | theburningmonk.com