Yan Cui

I help clients go faster for less using serverless technologies.

One of the great things about CraftConf is the plethora of big name tech speakers that the organizers have managed to attract.

Michael Feathers is definitely one of the names that made me excited to go to CraftConf.

Know your commits

We have a bias towards the current state of our code, but we have a lot more information than that. Along the history of its commits a piece of code can vary greatly in size and complexity.

To help find the places where methods jump the shark, Michael created a tool called Method Shark, you can get it from github here. What he found is that it’s cultural, some places just don’t refactor much whilst others wait until it hits some threshold.

We’re at an interesting stage in our industry where we’re doing lots Big Data analysis on customer behaviour and usage trend, but we’re not doing anywhere near enough for ourselves by analysing our commit history.

Those of you who read this blog regularly would know that I’m a big fan of Adam Tornhill’s work in this area. It was great to see Michael give a shout out to Adam’s book at the end of the talk, which is available on Amazon:

Richard Gabriel noted that biologists have a different notion of modularity to us:

“Biologists think of a module as representing a common origin more so than what we think of as modularity, which they call spatial compartmentalization”

– Richard Gabriel (Design Beyond Human Abilities)

So when biologists talk about modularity, they’re concerned about evolution and ancestry and not just the current state of things.

By recording and analysing interesting events about changes in our commits – event type (add/update), method body, name, etc. – we too, are able to gain deeper into the evolution of our codebase.

Commit history analysis

Michael demonstrated a number of analysis which you might take inspirations from and apply on your codebase.

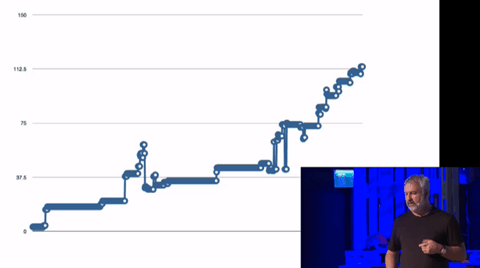

Method Lifeline

This maps the size of a particular method in lines over its lifetime.

From this picture you can see that this particular method has been growing steadily in size so it’s a good candidate for refactoring.

Method Ascending

You can find methods that have increased in size in consecutive commits to identify methods that are good candidates for refactoring.

It might be that the way to refactor the method is not obvious, so the decision to refactor is delayed and that indecision compounds. The result is that the method keeps growing in size over time.

You can also apply method ascending to a whole codebase. For instance, for the Rails project as of 2014 you might find:

[1827, 765, 88, 13, 2, 1]

i.e. 765 methods have been growing in size in the last 2 consecutive commits, 88 methods have growing in the in size in the last 3 consecutive commits, and so on.

Trending Methods

You can use this analysis to find the most changed methods in say, the last month.

Activity List

Things that are changed a lot are probably worth investigating too. You can identify bouts of activities on a method over a given period. For example, a method that has existed for 18 months were changed during bouts of activities over a period of 9 months.

This helps to identify methods that you keep going back to, which is a sign that they may be violations to single responsibility and/or open-closed principles.

Single Responsibility Principle

If you find methods that are changed together many times, then they might represent responsibilities within the class that we haven’t identified, and therefore are good candidates to be extracted into a separate abstraction.

Open/Closed Principle

Even when you’re working with a large codebase, chances are lots of areas of code don’t change that much.

You can find a “closure date” for each class – the last time each class is modified. Don’t concentrate your refactoring efforts on areas of code that have been closed for a long time.

Temporal Correlation of Class Changes

Find classes that are changed together to find areas in the system that have temporal coupling through coincidental changes happening across different areas.

These might be results of poor abstractions, where responsibilities haven’t been well encapsulated into one class. They can be indicators of areas that might need to be refactored or re-abstracted.

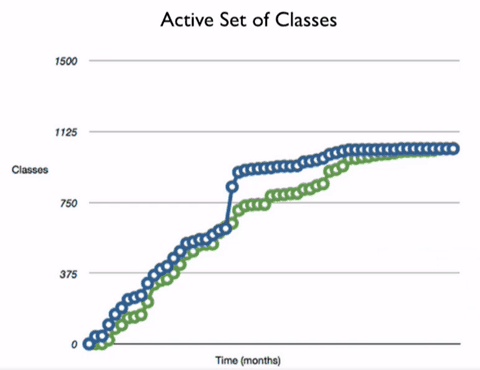

Active Set of Classes

If you plot the no. of classes at a point in time (blue) vs the no. of classes that hasn’t changed again since (green):

the distance between the two lines is the number of things you’ll be touching since that point in time.

In the example above, you can see a large number of classes was introduced in the middle of the graph, and gradually these classes became closed over time.

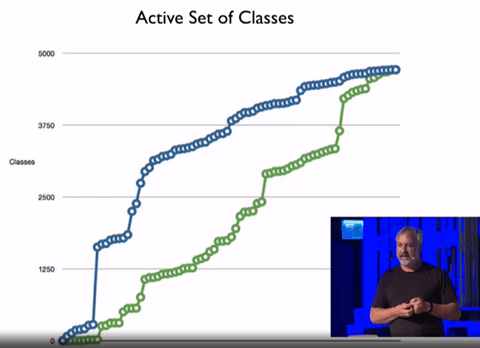

Looking at another example:

we can see that a lot of classes were introduced in two bursts of activities and it took a long time for them to become closed.

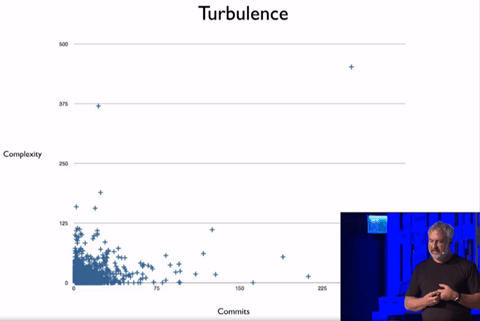

Turbulence

This is a measure of the no. of commits on a file vs its complexity.

From this graph, we can see that most of the files in this codebase has relatively few number of commits and are of low complex.

Be ware of things in the top-left and top-right quadrants of the graph, they are things that either:

- gained a lot of complexity without a lot of commits (did one of your hotshots decide to go to work with that file?), or;

- things that are complex and changes a lot (are these code that are so complex nobody wanna do anything about it? so the level of complexity just grows with each commit)

Proactive refactoring can be a way to stop the complexity of these classes from getting out of control.

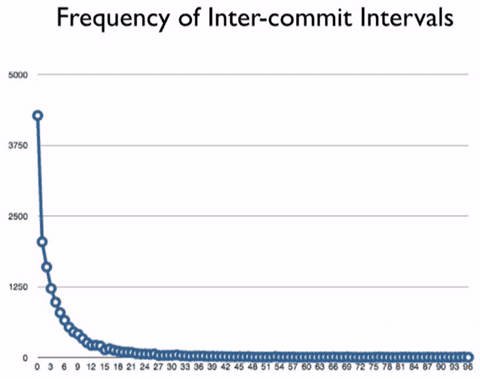

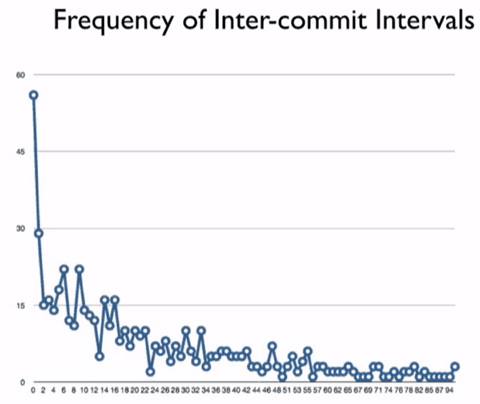

Frequency of Inter-Commit Intervals

You can also discover the working style of your developers by looking at the frequency of inter-commit intervals.

Churn

Churn is the number of times things change. If you look at the repository for ruby-gems, where:

- every point on the x-axis is a file;

- and y-axis is the number of times it has changed in the history of the codebase

When we think about the single responsibility principle and the open-closed principle, we think about classes that are well abstracted and don’t have to change frequently.

But every codebase Michael looked at has a similar graph to the above where there are areas of code that changes very frequently and areas that hardly changes at all.

Michael then referenced Susanne Siverland’s work on “Where do you save most money on refactor?”. I couldn’t find the paper afterwards, but Michael summarised her finding as – “in general, churn is a great indicator, when you compare it with other things such as complexity, in terms of the payoff you get from refactoring, and in terms of reducing defects.”

In hindsight this is pretty obvious – bugs are there because we put them there, and if we keep going back to the same areas of code then it probably means they’re non-optimal and each time we change something there’s a chance of us introducing a new bug.

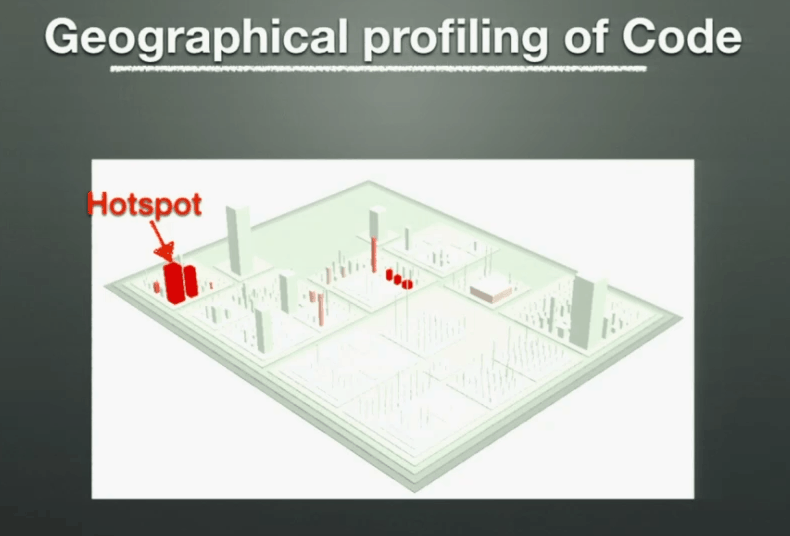

If you look at Adam Tornhill’s Code as Crime Scene talk, this is also the basis for his work on geographical profiling of code.

Finally, keep in mind that these analysis are intended to give you indicators of where you should be doing targeted refactoring, but at the end of day they’re still just indicators.

Links

- CraftConf 15 takeaways

- QCon London 15 – Takeaways from “Code as Crime Scene”

- MS Research – Use of relative code churn measures to predict system defect density

Whenever you’re ready, here are 3 ways I can help you:

- Production-Ready Serverless: Join 20+ AWS Heroes & Community Builders and 1000+ other students in levelling up your serverless game. This is your one-stop shop for quickly levelling up your serverless skills.

- I help clients launch product ideas, improve their development processes and upskill their teams. If you’d like to work together, then let’s get in touch.

- Join my community on Discord, ask questions, and join the discussion on all things AWS and Serverless.

http://www.bth.se/fou/cuppsats.nsf/28b5b36bec7ba32bc125744b004b97d0/2e48c5bc1c234d0ec1257c77003ac842!OpenDocument&Highlight=0,siverland* but lots of research in this area e.g. http://2015.msrconf.org

If you watch the talk, Michael actually referenced the first paper you linked.

Great to see a whole conference dedicated to mining software repos! Thanks for the link, are the talks recorded? I could only find a few PDFs, would be nice to watch the talks.

Right, that’s just the working link.

MSR doesn’t record talks, as far as I know, but papers will now be online just prior to the conference for 2-3 weeks (access to research papers is an ongoing problem).