Yan Cui

I help clients go faster for less using serverless technologies.

update Jan 26, 2020: since this article was already written, the problem of throughput dilution has been resolved by DynamoDB’s adaptive capacity feature which is applied in real-time.

TL;DR – The no. of partitions in a DynamoDB table goes up in response to increased load or storage size, but it never come back down, ever.

DynamoDB is pretty great, but as I have seen this particular problem at 3 different companies – Gamesys, JUST EAT, and now Space Ape Games – I think it’s a behaviour that more folks should be aware of.

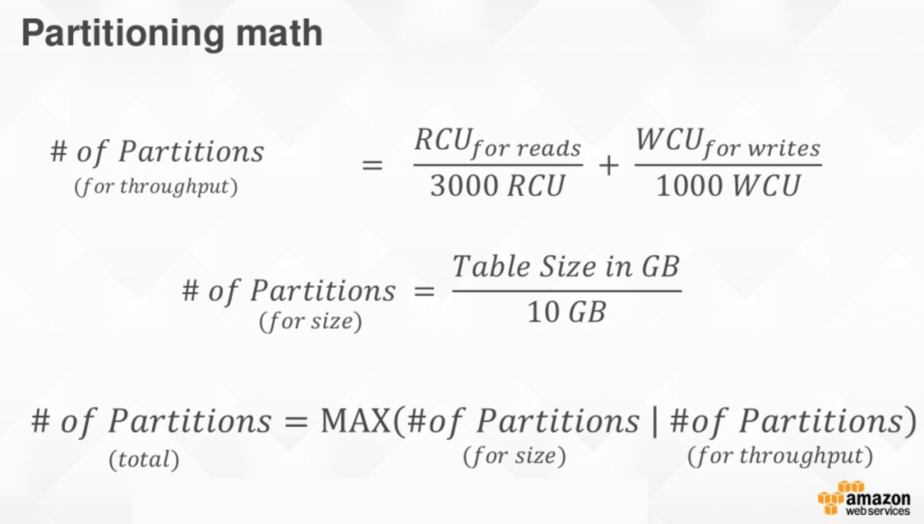

Credit to AWS, they have regularly talked about the formula for working out the no. of partitions at DynamoDB Deep Dive sessions.

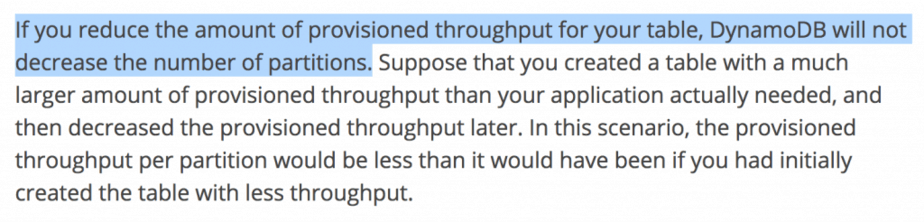

However, they often forget to mention that the DynamoDB will not decrease the no. of partitions when you reduce your throughput units. It’s a crucial detail that is badly under-represented in a lengthy Best Practice guide.

Consider the following scenario:

- you dial up the throughput for a table because there’s a sudden spike in traffic or you need the extra throughput to run an expensive scan

- the extra throughputs cause DynamoDB to increase the no. of partitions

- you dial down the throughput to previous levels, but now you notice that some requests are throttled even when you have not exceeded the provisioned throughput on the table

This happens because there are less read and write throughput units per partition than before due to the increased no. of partitions. It translates to higher likelihood of exceeding read/write throughput on a per-partition basis (even if you’re still under the throughput limits on the table overall).

When this dilution of throughput happens you can:

- migrate to a new table

- specify higher table-level throughput to boost the through units per partition to previous levels

Given the difficulty of table migrations most folks would opt for option 2, which is how JUST EAT ended up with a table with 3000+ write throughput unit despite consuming closer to 200 write units/s.

In conclusion, you should think very carefully before scaling up a DynamoDB table drastically in response to temporary needs, it can have long lasting cost implications.

Whenever you’re ready, here are 3 ways I can help you:

- Production-Ready Serverless: Join 20+ AWS Heroes & Community Builders and 1000+ other students in levelling up your serverless game. This is your one-stop shop to level up your serverless skills quickly.

- Do you want to know how to test serverless architectures with a fast dev & test loop? Check out my latest course, Testing Serverless Architectures and learn the smart way to test serverless.

- I help clients launch product ideas, improve their development processes and upskill their teams. If you’d like to work together, then let’s get in touch.

Yan,

Thanks for some great insights from your lambda adventures at Yubl, and after. Do you consult on designing lambda infrastructures? My startup (Tradle) is migrating infrastructure from containerized to lambda. Is there a way we can get some time with you to pick your brain and evaluate some tentative designs?

Thanks,

-Mark

Hi Mark,

I don’t do consulting, but happy to help you out if you have specific questions. DM me on twitter and we can sort something out.

Cheers,

Would the Aurora based solutions be affected (maybe indirectly), since all managed DB services are based on the same low level data management infrastructure?

I wouldn’t think so, because Aurora doesn’t manage/throttle throughput using these throughput units, so it wouldn’t be affected by this problem of diluting the throughput units when you excessively scale.

Although they might share some common distributed file system (eg. many of Google’s service are built on top of their proprietary distributed file system) under the hood, that’d be much lower level of abstraction than where throughput management, which is something that’ll be managed at the application (as in, the database system) level.