Yan Cui

I help clients go faster for less using serverless technologies.

This article is brought to you by

The real-time data platform that empowers developers to build innovative products faster and more reliably than ever before.

In the last couple of days or so I have spent some time reading Karl Seguin’s excellent and FREE to download ebook – Foundations of Programming which covers many topics from dependency injection to best practices for dealing with exceptions.

The main topic that took my fancy was the Back to Basics: Memory section, here’s a summary I put together with additional example.

In C#, variables are stored in either the Stack or the Heap based on their type:

- Values types go on the stack

- Reference types go on the heap

Remember, a struct in C# is a value type, as is an enum, so they both go on the stack. Which is why it’s generally recommended (for better performance) that you prefer a struct type to a reference type for small objects which are mainly used for storing data.

Also, value types that belong to reference types also go on the heap along with the instance of the reference type.

In Java, because everything is a reference type so all the variables go on the heap making the size of the heap one of the most important attributes that determine the performance of a Java application. The C# creators saw this as inefficient and unnecessary, which is why we have value types in C# today :-)

The Stack

Values on the stack are automatically managed even without garbage collection because items are added and removed from the stack in a LIFO fashion every time you enter/exit a scope (be it a method or a statement), which is precisely why variables defined within a for loop or if statement aren’t available outside that scope.

You will receive a StackOverflowException when you’ve used up all the available space on the stack, though it’s almost certainly the symptom of an infinite loop (bug!) or poorly designed system which involves near-endless recursive calls.

The Heap

Most heap-based memory allocations occur when we create a new object, at which point the compiler figures out how much memory we’ll need, allocate an appropriate amount of memory space and returns a pointer to the allocated memory.

Unlike the stack, objects on the heap aren’t local to a given scope. Instead, most are deeply nested references of other referenced objects. In unmanaged languages like C, it’s the programmer’s responsibility to free any allocated memory, a manual process which inevitably lead to many memory leaks down the years!

In managed language, the runtime takes care of cleaning up resources. The .Net framework uses a Generation Garbage Collector which puts object references into generations based on their age and clears the most recently created references more often.

How they work together

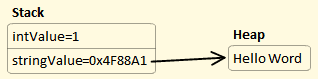

As mentioned earlier, every time you create a new object, some memory gets allocated and what you assign to your variable is actually a reference pointer to the start of that block of memory. This reference pointer comes in the form of a unique number represented in hexadecimal format, and as an integer they reside on the stack unless they are part of a reference object.

So for example, the following code will result in two values on the stack, one of which is a pointer to the string:

int intValue = 1; string stringValue = "Hello World";

When these two variables go out of scope, the values are popped off the stack, but the memory allocated on the heap is not cleared. Whilst this results in a memory leak in C/C++, the garbage collector (GC) will free up the allocated memory for you in a managed language like C# or Java.

Pitfalls in C#

Despite having the GC to do all the dirty work so you don’t have to, there are still a number of pitfalls which might sting you:

Boxing & Unboxing

Boxing occurs when a value type is ‘boxed’ into a reference type (when you put a value type into an ArrayList for example). Unboxing occurs when a reference type is converted back into a value type (when you cast an item from the ArrayList back to its original type for example).

The generics features introduced in .Net 2.0 increases type-safety but also addresses the performance hit resulting from boxing and unboxing.

ByRef

Most developers understand the implication of passing a value type by reference, but few understands why you’d want to pass a reference by reference. When you pass a reference type ByValue you are actually passing a copy of the reference pointer, but when you pass a reference type ByRef you’re passing the reference pointer itself.

The only reason to pass a reference type by reference is if you want to modify the pointer itself – as in where it points to. However, this can lead to some nasty bugs:

void Main()

{

List<string> list = new List<string> { "Hello", "World"; };

// pass a copy of the reference pointer

NoBug(list);

// no error here

Console.WriteLine(list.Count);

// pass the actual reference pointer

BadBug(ref list);

// reference pointer has been amended, this throws NullReferenceException!

Console.WriteLine(list.Count);

}

public void BadBug(ref List<string> list)

{

list = null; // this changes the original reference pointer

}

public void NoBug(List<string> list)

{

list = null; // this changes the local copy of the reference pointer

}

In almost all cases, you should use an out parameter or a simple assignment instead (whichever that expressed your intention more clearly).

Whilst I’m on the topic, do you know the difference between using out and using ref? When you pass a parameter to a method using the out keyword, the parameter must be assigned inside the method scope; when you pass a parameter to a method using the ref keyword, the parameter must be assigned before it’s passed to the method.

Managed Memory Leaks

Yes, memory leak is still possible in a managed language! Typically, this type of memory leak happens when you hold on to a reference indefinitely, though most of the time this might not amount to any noticeable impact on your application it can sting you rather unexpectedly as the system matures and starts to handle greater loads of data. For example, I ran into a platform bug with ADO.NET a little while back and it took the best part of a week to figure out and fix it! There are memory profilers out there that can help hunt down memory leaks in a .Net application, the best ones being dotTrace and ANTS Profiler. For memory profiling, I prefer ANTS Profiler which allows you to easily compare two snapshots of your memory usage.

One specific situation worth mentioning as a common cause of memory leak is events. If, in a class you register for an event, a reference is created to your class. Unless you de-register from the event your object lifecycle will ultimately be determined by the event source. Two solutions exist:

1. de-registering from events when you’re done (the IDisposable pattern is ideal here)

2. use the WeakEvent Pattern or a simplified version.

Another potential source of memory leak is when you implement some of caching mechanism for your application without any expiration policy, in which case your cache is likely to keep growing until it takes up all available memory space and thus triggering OutOfMemoryException.

Fragmentation

As your program runs its course, the heap becomes increasingly fragmented and you could end up with a lot of unusable memory space spread out between usable chunks of memory.

Usually, the GC will take care of this by compacting the heap and the .Net framework will update the references accordingly, but there are times when the .Net framework can’t move an object – when the object is pinned to a specific memory location.

Pinning

Pinned memory occurs when an object is locked to a specific address on the heap. This usually is a result of interaction with unmanaged code – the GC updates object references in managed code when it compacts the heap, but has no way of updating the references in unmanaged code and therefore before interoping it must first pin objects in memory.

A common way to get around this is to declare large objects which don’t cause as much fragmentation as many small ones. Large objects are placed in a special heap called the Large Object Heap (LOH) which isn’t compacted at all. For more information on pinning, here’s a good article on pinning and asynchronous sockets.

Another reason why an object might be pinned is if you compile your assembly with the unsafe option, which then allows you to pin an object via the fixed statement. The fixed statement can greatly improve performance by allowing objects to be manipulated directly with pointer arithmetic, which isn’t possible if the object isn’t pinned because the GC might reallocate your object.

Under normal circumstances however, you should never mark your assembly as unsafe and use the fixed statement!

Garbage Spewers

Already discussed here.

Setting things to null

You don’t need to set your reference types to null after you’re done with that because once that variable falls out of scope it will be popped off the stack anyway.

Deterministic Finalization

Even in a managed environment, developers still need to manage some of their references such as file handles or database connections because these resources are limited and therefore should be freed as soon as possible. This is where deterministic finalization and the Dispose pattern come into play, because deterministic finalization releases resources not memories.

If you don’t call Dispose on an object which implements IDisposable, the GC will do it for you eventually but in order to release precious resources or DB connections in a timely fashion you should use the using statement wherever possible.

Whenever you’re ready, here are 4 ways I can help you:

- Production-Ready Serverless: Join 20+ AWS Heroes & Community Builders and 1000+ other students in levelling up your serverless game. This is your one-stop shop for quickly levelling up your serverless skills.

- Do you want to know how to test serverless architectures with a fast dev & test loop? Check out my latest course, Testing Serverless Architectures and learn the smart way to test serverless.

- I help clients launch product ideas, improve their development processes and upskill their teams. If you’d like to work together, then let’s get in touch.

- Join my community on Discord, ask questions, and join the discussion on all things AWS and Serverless.