Yan Cui

I help clients go faster for less using serverless technologies.

I’ve spent time with Rust at various points in the past, and being a language in development it was no surprise that every time I looked there were breaking changes and even the documentations look very different at every turn!

Fast forward to May 2015 and it has now hit the 1.0 milestone so things are stable and it’s now a good time to start looking into the language in earnest.

The web site is looking good, and there is an interactive playground where you can try it out without installing Rust. Documentation is beefed up and readily accessible through the web site. I personally find the Rust by Examples useful to quickly get started.

Ownership

The big idea that came out of Rust was the notion of “borrowed pointers” though the documentations don’t refer to that particular term anymore. Instead, they talk more broadly about an ownership system and having “zero-cost abstractions”.

Zero-cost what?

The abstractions we’re talking here are much lower level than what I’m used to. Here, we’re talking about pointers, polymorphic functions, traits, type inference, etc.

Its pointer system for example, gives you memory safety without needing a garbage collector and Rust pointers compiles to standard C pointers without additional tagging or runtime checks.

It guarantees memory safety for your application through the ownership system which we’ll be diving into shortly. All the analysis are performed at compile time, hence incurring “zero-cost” at runtime.

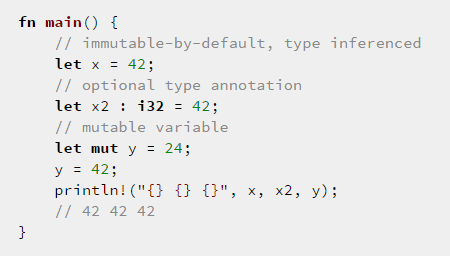

Basics

Let’s get a couple of basics out of the way first.

Note that in Rust, println is implemented as a macro, hence the bang (!).

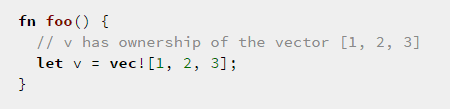

Ownership

When you bind a variable to something in Rust, the binding claims ownership of the thing it’s bound to. E.g.

When v goes out of scope at the end of foo(), Rust will reclaim the memory allocated for the vector. This happens deterministically, at the end of the scope.

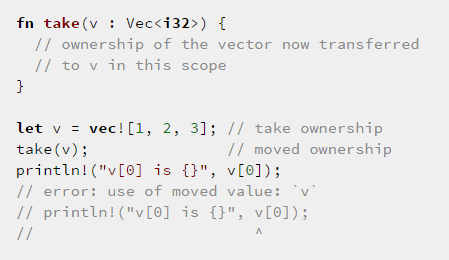

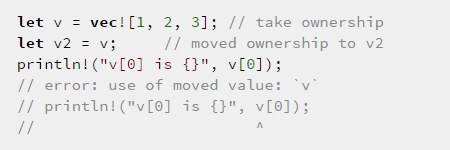

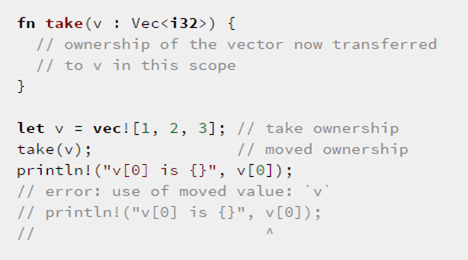

When you pass v to a function or assign it to another binding then you have effectively moved the ownership of the vector to the new binding. If you try to use v again after this point then you’ll get a compile time error.

This ensures there’s only one active binding to any heap allocated memory at a time and eliminates data race.

There is a ‘data race’ when two or more pointers access the same memory location at the same time, where at least one of them is writing, and the operations are not synchronized.

Copy trait

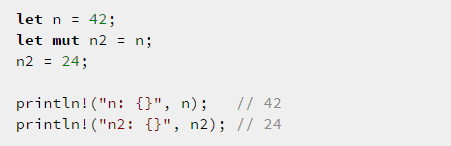

Primitive types such as i32 (i.e. int32) are stack allocated and exempt from this restriction. They’re passed by value, so a copy is made when you pass it to a function or assign it to another binding.

The compiler knows to make a copy of n because i32 implements the Copy trait (a trait is the equivalent to an interface in .Net/Java).

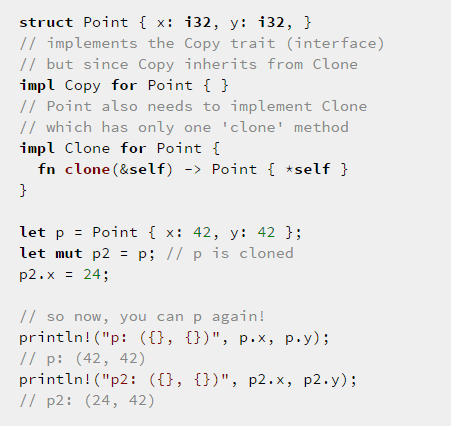

You can extend this behaviour to your own types by implementing the Copy trait:

Don’t worry about the syntax for now, the point here is to illustrate the difference in behaviour when dealing with a type that implements the Copy trait.

The general rule of thumb is : if your type can implement the Copy trait then it should.

But cloning is expensive and not always possible.

Borrowing

In the earlier example:

- ownership of the vector has been moved to the binding v in the scope of take();

- at the end of take() Rust will reclaim the memory allocated for the vector;

- but it can’t, because we tried to use v in the outer scope afterwards, hence the error.

What if, we borrow the resource instead of moving its ownership?

A real world analogy would be if I bought a book from you then it’s mine to shred or burn after I’m done with it; but if I borrowed it from you then I have to make sure I return it to you in pristine conditions.

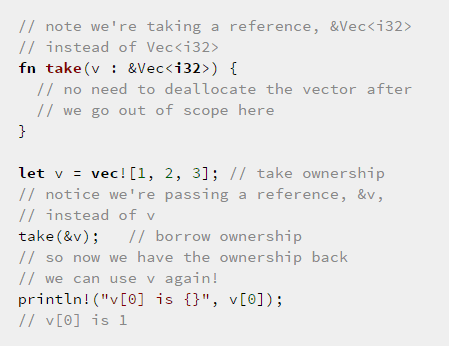

In Rust, we do this by passing a reference as argument.

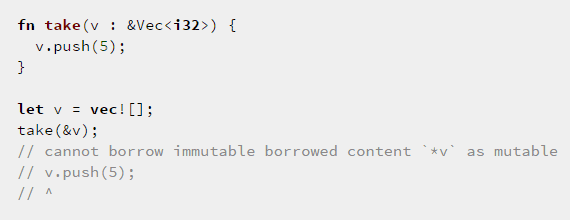

References are also immutable by default.

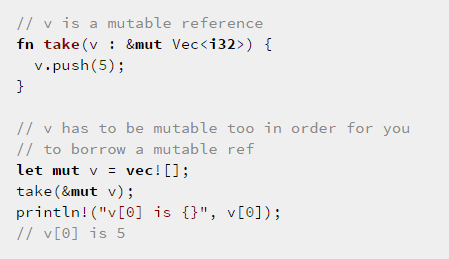

But just as you can create mutable bindings, you can create mutable references with &mut.

There are a couple of rules for borrowing:

1. the borrower’s scope must not outlast the owner

2. you can have one of the following, but not both:

2.1. zero or more references to a resource; or

2.2. exactly one mutable reference

Rule 1 makes sense since the owner needs to clean up the resource when it goes out of scope.

For a data race to exist we need to have:

a. two or more pointers to the same resource

b. at least one is writing

c. the operations are not synchronized

Since the ownership system aims to eliminate data races at compile time, there’s no need for runtime synchronization, so condition c always holds.

When you have only readers (immutable references) then you can have as many as you want (rule 2.1) since condition b does not hold.

If you have writers then you need to ensure that condition a does not hold – i.e. there is only one mutable reference (rule 2.2).

Therefore, rule 2 ensure data races cannot exist.

Here are some issues that borrowing prevents.

Beyond Ownership

There are lots of other things to like about Rust, there’s immutability by default, pattern matching, macros, etc.

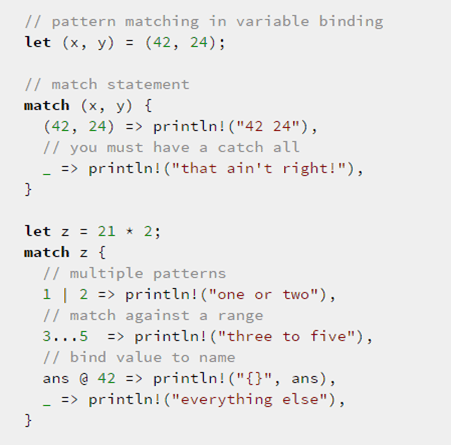

Pattern Matching

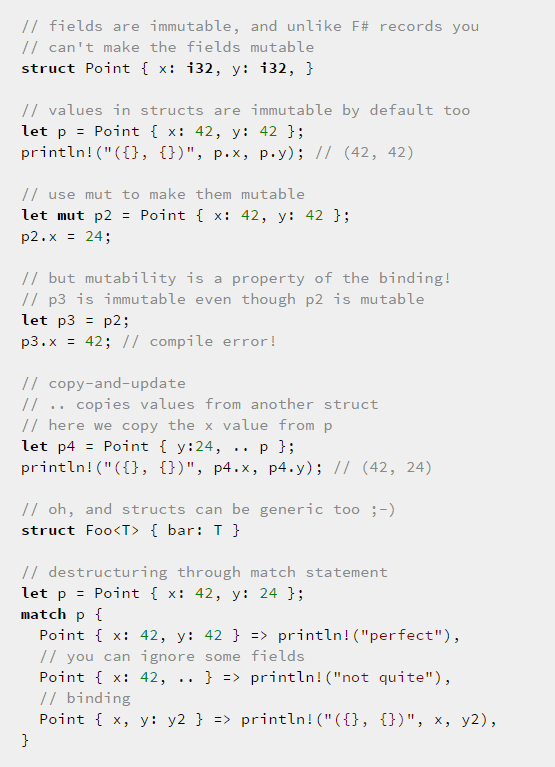

Structs

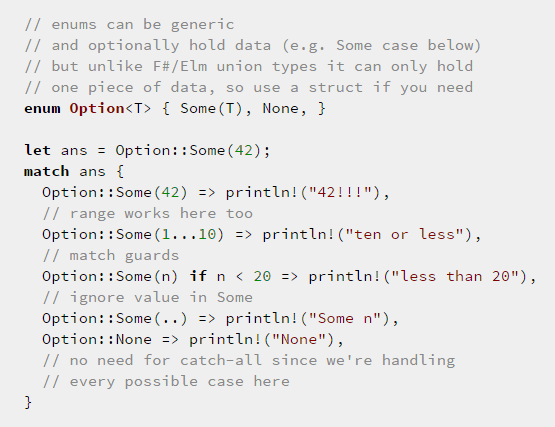

Enums

Even from these basic examples, you can see the influence of functional programming. Especially with immutability by default, which bodes well with Rust’s goal of combining safety with speed.

Rust also has a good concurrency story too (pretty much mandatory for any modern language) which has been discussed in detail in this post.

Overall I enjoy coding in Rust, and the ownership system is pretty mind opening too. With both Go and Rust coming of age and targeting a similar space around system programming, it’ll be very interesting to watch this space develop.

Links

- Rust by Examples

- The Rust book

- Learn Rust in Y minutes

- Fearless Concurrency with Rust

- Rust means never having to close a socket

Whenever you’re ready, here are 3 ways I can help you:

- Production-Ready Serverless: Join 20+ AWS Heroes & Community Builders and 1000+ other students in levelling up your serverless game. This is your one-stop shop for quickly levelling up your serverless skills.

- I help clients launch product ideas, improve their development processes and upskill their teams. If you’d like to work together, then let’s get in touch.

- Join my community on Discord, ask questions, and join the discussion on all things AWS and Serverless.

so you pretty much have a subset of the new c++ standard. you can have all those + more.

very similar pattern matching is coming too!

so no need to look further than the new c++14/17 which makes less the point for Rust/Go

I think whether Rust/Go is a reasonable option depends on where you’re coming from – if you’re already proficient with C++ then the new features might mean that you don’t need to switch language to get those nice high-level language features.

But if on the other hand, you’re coming in from outside the C++ world then C++ is a very large language to come to terms with, and the new C++ standards in fact makes it even bigger.

I might be naive here, but I suspect you can probably make life easier for yourself if you just stick to using the high-level features that are becoming available, but I imagine you’ll still have to learn everything else in order to understand and work with the vast amounts of existing C++ libraries out there.

Is this a fair assumption?

Rust & Go are appealing to me – who has never worked with C++ professionally – because both are quite small and easy to pick up and be productive with. In the case of Go, it’s also supported by Google AppEngine (although the support for Go is still marked as beta) which is a pretty awesome PAAS and something we already use heavily in our Python team.

” and the new C++ standards in fact makes it even bigger”

True we have now ISO 1700pages, but in fact in many expect the language became simpler. Less verbose and more flexible. It really looks and feel as a brand new language. Like python but strong typed!

Only one way the language became more complicated and it has to do with move-semantics. I see Rust approach is to make it more transparent for the user, while c++ forces you to truly understand the new concept before using it.

No NEED to understand everything else to jump on the new c++ IMHO. In fact all the mental baggage from c++98 and especially C must be put aside to truly appreciate the new c++ lang. Like naked pointers and manual mem-management are things of the past.

However, I see why Go&Rust might as well be better suited for beginners to sys-prog. Having less is sometimes more :P

Anyone serious about sys-prog though would still need to jump to c++ IMO. Where you can exactly replicate Rust mentality and more!

Looking for your take on Go.

Not much time left to invest myself. There are 2 selling points I was referred to:

– clean&mean interfaces

– go-coroutines

what do you think?

Thanks for the info regarding the state of C++, what would you recommend as reading material to get started with the new C++ without also having to learn the mental baggages of old?

Agree with your point on needing to dive into C++ for system programming, and even high-level languages like Erlang have to often rely on C++ for the low-level, performance-critical parts of systems.

Personally I think there’ll always be a space for C++ in the language space, but system programming is big enough a space to accommodate Rust and Go too. In Go’s case it helps to have Google on its back and they already have a champion product in Docker (I hear a lot of people learn to programme in Go just so they can contribute towards Docker).

I do like Go’s interfaces a lot (https://theburningmonk.com/2015/05/why-i-like-golang-interfaces/), and the programming model that goroutines and channels give you is nice too (although a few other languages have a equally nice programming model).

On goroutines, it’s based on CSP and based on my understanding there are a couple of interesting differences to the actor model (Erlang, Akka):

* in CSP, message passing is synchronous

* in CSP, processes have a fixed topology

(interestingly, Go does support non-blocking send and receives through its ‘select’ keyword, which also allows a variable topology through multi-way selects, see example here: https://gobyexample.com/non-blocking-channel-operations)

* in actor model you tend to design around behaviour with encapsulated state, where as in CSP the focus seems to be around parallel computation (but this is just based on my limited exposure to Go so I could be totally wrong here!)

A nice crash in guide to the new cpp is : http://cpprocks.com/

It also has compiler specific editions. They are relatively cheep lessons like book. For more in-depth coverage “Effective modern c++” is a good one from what I heard.

I recall reading your blog post with the strong duck typing in Go. Neat but can you be more explicit and are there more to it?

For the goroutines I really need find how they are implemented internally. At the point of communication there must be some blocking-sync part at one point or?

will look into: http://www.usingcsp.com/

Cheers for that, added to my ever-growing to-read list!

For go interfaces, there isn’t more to it, they’re satisfied implicitly, that’s it, simple. But that simplicity is quite powerful really, in .Net for instance, interfaces are much more expensive in the sense that you have to go around and tag every type that you want to use as that interface (and sometimes you can’t because it might be a BCL class or defined in a 3rd party library).

Also you tend to overfit for the purpose of functions that depend on them, and sometimes that creates interesting problems – e.g. there are two interfaces, both satisfy the requirement for a function, which one do I use when I’d potentially exclude a whole set of implementation classes either way, so I end up either:

a) overloading the function to support both interfaces, or;

b) create yet another interface that contains just the members I need and then tag every implementation out there and hope I can come up with a good name for the interface and that the function I created the interface for doesn’t go out of existence!

And then there’s the expression problem where you cannot change interface or implementation without also changing the other, since interfaces in most languages are still quite a strong form of coupling.

For goroutines, by default they’re blocking (in the CSP model processes don’t start sending until the other side is ready to receive) though I don’t how it’s implemented internally.

something very recent about pattern matching for iso cpp:

https://isocpp.org/blog/2015/05/mach7-pattern-matching-for-cpp

co-routines are something well overdue for cpp, but hey before cpp11 we didn’t have even the concept of thread. it was up to the sys and of-course fundamentally is an OS not a language issue. One of the ‘side-effects’ of driven by comity, I guess.

We still don’t have sockets and no idea about Internet ;)

I’m interested to find how they implemented it in Go. For instance co-routines in LUA make use of chunking. The idea is that you create pieces out of lang-expressions you can break for later active interpretation. This way you guarantee that a busy loop won’t block but more importantly not starve any communication queue. This way you have concurrency (or async execution) without the need to involve parallel execution, everything is safe on one thread. IMO best way to do async when it involves talking to a GUI.

Same approach is hardly applicable for cpp though, up to my understanding. But hey at least now that cpp is getting more functional we can get around with continuations : https://isocpp.org/blog/2015/05/mach7-pattern-matching-for-cpp

combined with the soon expected std::futures for non-blocking/async calls and lambdas will be quite powerful and clean.

Going beyond async when we are talking about heavy sys messaging communication we have circular buffers for the task. With std::atomics you can make the index managment more efficient being lock-free,btw not to be mistaken for zero-cost ;)

About your class examples I think it boils down to the card tower structure we have with class-based OO. The cross coupling causes the affect of once you pull one card and the whole tower might fall down. Not everything fits well for tight hierarchical structures and in general the idea behind cpp stl is to keep data and functions separate. Attempting generic programming through cpp templates gives you the exact duck typing enforced at compile time!

However the use case you elaborate on I’m afraid eludes me. Maybe a some pseudo code ala C# will help better.

I can think of my own examples with example models where class-based breaks. Usually involves the problem of applying polymorphic behavior on heterogeneous collection of objects , ironically what they were designed for :(

Pingback: /dev/summer had some good talks as usual | Windows Live space

You don’t have *any* of this in C++. Don’t spread nonsense.