Yan Cui

I help clients go faster for less using serverless technologies.

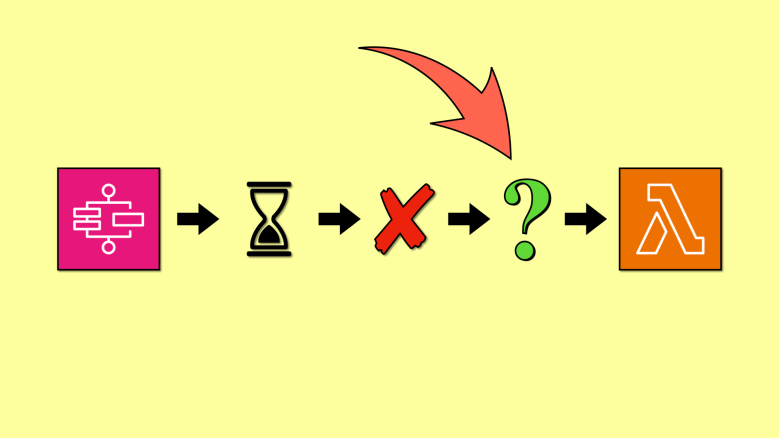

Step Functions lets you set a timeout on Task states and the whole execution.

An execution can run for a year if no TimeoutSeconds is configured. That’s a lot of time for the workflow to finish its job. But without a more sensible timeout, an execution can appear as “stuck” to the user.

AWS best practices recommend using timeouts to avoid such scenarios [1]. So it’s important to consider what happens when you experience a timeout.

You can use the Catch clause to handle the States.Timeout error when a Task state times out. You can then perform automated remediation steps.

But what happens when the whole execution times out? How can we catch and handle execution timeouts like we do with Task states?

Here are 3 ways to do it.

EventBridge

Standard Workflows publish TIMED_OUT events to the default EventBridge bus. We can create an EventBridge rule to match against these events. That way, we can trigger a Lambda function to handle the error.

The event contains the state machine ARN, execution name, input and output. We can even use the execution ARN to fetch the full audit history of the execution.

That should give us everything we need to figure out what happened.

Unfortunately, this approach only works for Standard Workflows. Express Workflows do not emit events to EventBridge.

CloudWatch Logs

Both Standard and Express Workflows can write logs to CloudWatch. When an execution times out, it writes a log event like this:

We can use CloudWatch log subscription to send these events to a Lambda function to handle the timeout.

However, these log events are not as easy to use as the EventBridge events.

We can extract the state machine name and execution name from the execution ARN. But not the input and output.

For Standard Workflows, we can use the GetExecutionHistory [2] API to fetch the execution history. But this does not support Express Workflows. Instead, we must rely on the audit history logged to CloudWatch.

These are not always available. Because we will likely set the log level to ERROR to minimize the cost of CloudWatch Logs.

This approach can work for both Standard and Express Workflows. However, it might not be practical because the log event provides limited information about the execution.

Nested workflows

We can solve the abovementioned problems by nesting our state machine inside a parent Standard Workflow.

- It works for both Standard and Express Workflows.

- We have the input and output for the execution.

This is a simple and elegant solution. It’s definitely my favourite approach for handling execution timeouts.

Honourable mentions

There are other variants besides the approaches we discussed here. You can even turn this problem into an ad-hoc scheduling problem.

For example, you can send a message to SQS with a delivery delay matching the state machine timeout. Or create a schedule in EventBridge Scheduler to be executed when the state machine would have timed out.

In both cases, you run into the limitation that Step Functions’ DescribeExecution and ListExecutions APIs don’t support Express Workflows.

This makes it difficult to find out if an execution timed out in the end. It’s only possible to do this by querying CloudWatch Logs. I don’t think the extra complexity and cost are worth it. So, I’d recommend using one of the three proposed solutions here instead.

Links

[1] Use timeouts to avoid stuck executions

[2] Step Function’s GetExecutionHistory API

Related Posts

Whenever you’re ready, here are 3 ways I can help you:

- Production-Ready Serverless: Join 20+ AWS Heroes & Community Builders and 1000+ other students in levelling up your serverless game. This is your one-stop shop for quickly levelling up your serverless skills.

- I help clients launch product ideas, improve their development processes and upskill their teams. If you’d like to work together, then let’s get in touch.

- Join my community on Discord, ask questions, and join the discussion on all things AWS and Serverless.