Yan Cui

I help clients go faster for less using serverless technologies.

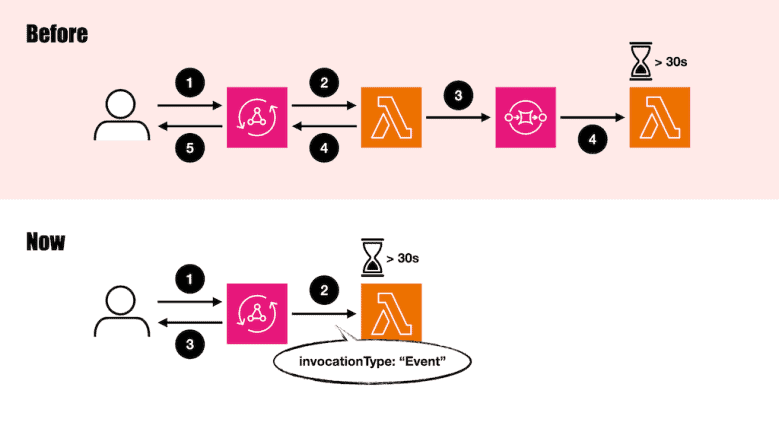

A common challenge in building GenAI applications today is the slow performance of most LLMs (except ChatGPT 4-o and Groq). To minimize delays and enhance user experience, streaming the LLM response is a must. As such, we see a common pattern emerge in AppSync:

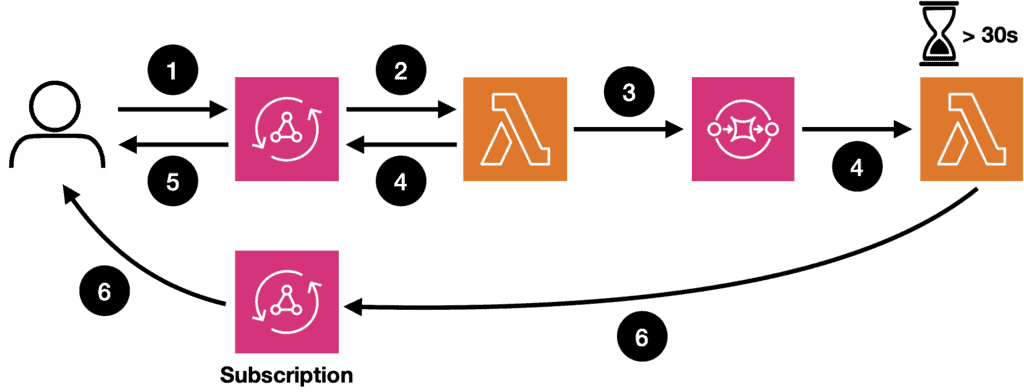

- The caller makes a GraphQL request.

- AppSync invokes a Lambda resolver.

- The Lambda function queues up a task in SQS.

- The Lambda resolver returns so that AppSync can respond to the caller immediately. In the meantime, a background SQS function picks up the task and calls the LLM.

- The caller receives an acknowledgement from the initial request.

- The background function receives the LLM response as a stream and forwards it in chunks (as they are received) to the caller via a subscription endpoint.

This workaround is necessary because AppSync could only invoke Lambda functions synchronously. To support response streaming, the first function has to hand off calling the LLM to something else.

AppSync now supports async Lambda invocations.

On May 30th, AppSync announced [1] support for invoking Lambda resolvers asynchronously.

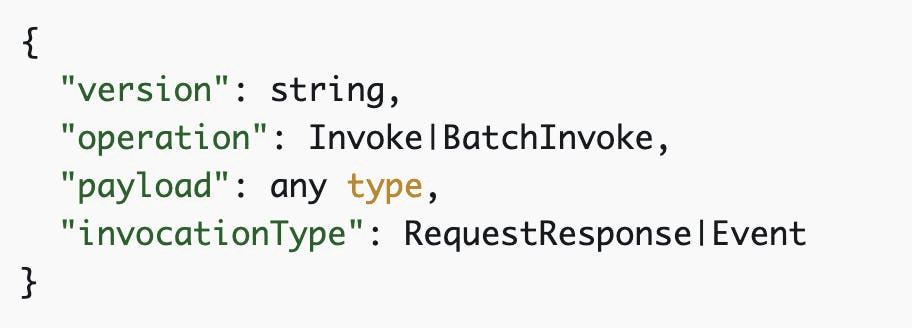

This works for both VTL and JavaScript resolvers. Setting the new invocationType attribute to Event will invoke the Lambda resolver asynchronously.

Here’s how the VTL mapping template would look:

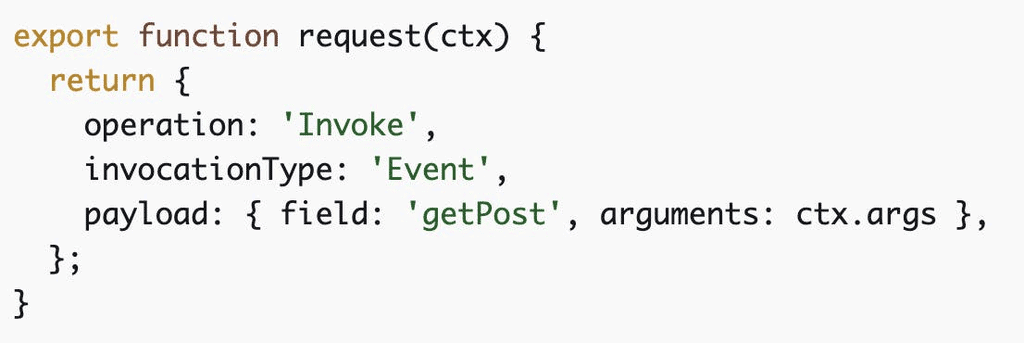

And here’s the JavaScript resolver:

The response from an async invocation will always be null.

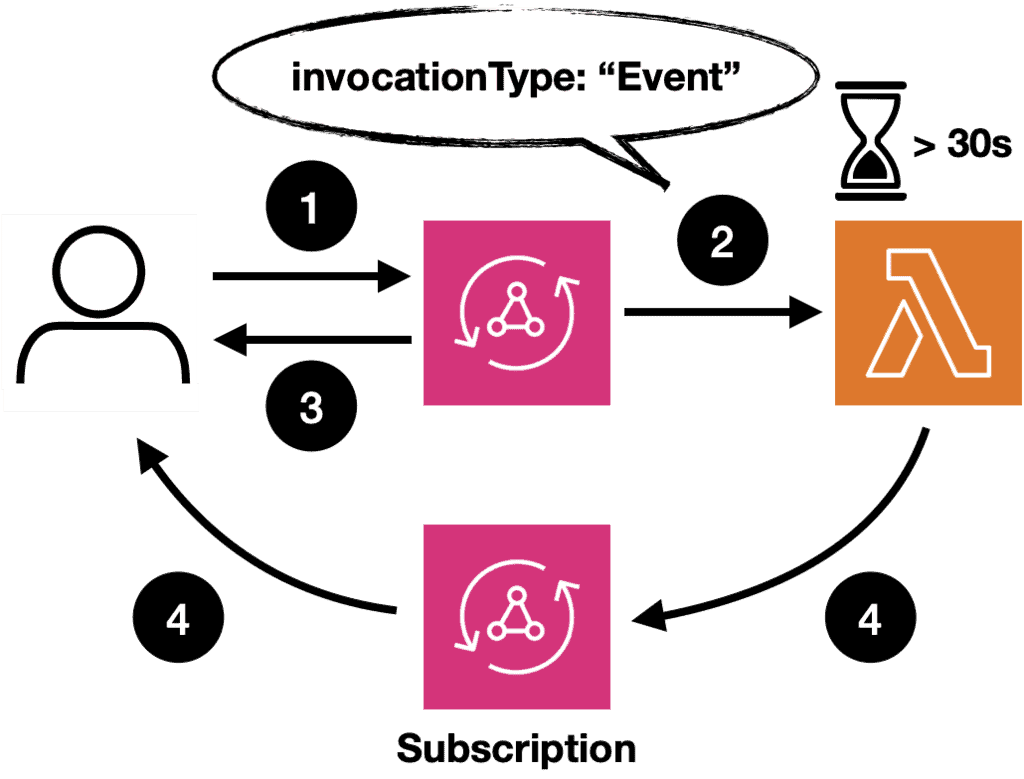

The new architecture

With this change, we no longer need the background function.

- The caller makes a GraphQL request.

- AppSync invokes a Lambda resolver asynchronously.

- AppSync immediately receives a null response and can respond to the original request.

- The Lambda function receives the LLM response as a stream and forwards it in chunks (as they are received) to the caller via a subscription endpoint.

This is a simple yet significant quality-of-life improvement from the AppSync team.

It’s not just for GenAI applications.

The same pattern can be applied to any long-running task requiring more than AppSync’s 30s limit.

Links

[1] AWS AppSync now supports long running events with asynchronous Lambda function invocations

Whenever you’re ready, here are 3 ways I can help you:

- Production-Ready Serverless: Join 20+ AWS Heroes & Community Builders and 1000+ other students in levelling up your serverless game. This is your one-stop shop for quickly levelling up your serverless skills.

- I help clients launch product ideas, improve their development processes and upskill their teams. If you’d like to work together, then let’s get in touch.

- Join my community on Discord, ask questions, and join the discussion on all things AWS and Serverless.