Yan Cui

I help clients go faster for less using serverless technologies.

This article is brought to you by

The real-time data platform that empowers developers to build innovative products faster and more reliably than ever before.

Having run into a bit of deadlocking issue while working on Bingo.Net I spent a bit of time reading into the Producer-Consumer pattern and here’s what I learnt which I hope you’ll find useful too.

AutoResetEvent and ManualResetEvent

To start off, MSDN has an introductory article on how to synchronize a producer and a consumer thread. It’s a decent starting point, however, as some of the comments pointed out the sample code is buggy and allows for a race condition to happen when the AutoResetEvent is reset in quick succession whilst the consumer thread is processing the previous reset.

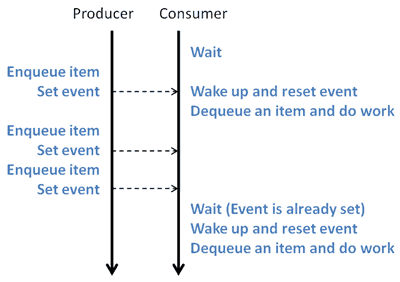

The problem with the AutoResetEvent is that you can set an event that is already set which does not have any effect:

And just like that, the Consumer has missed one of the items! However, you can work around this easily enough by locking onto the queue’s sync root object when it wakes up and dequeue as many item as there are on the queue. This might not always be applicable to your requirement though.

A much better choice would be to use the Monitor class instead.

Using Monitor.Pulse and Monitor.PulseAll

As Jon Skeet pointed out in his Threading article (see reference) the Auto/ManualResetEvent and Mutex classes are significantly slower than Monitor.

Using the Monitor.Wait, Monitor.Pulse and Monitor.PulseAll methods, you can allow multiple threads to communicate with each other similar to the way the reset events work but only safer. I have found quite a number of different implementations of the Producer-Consumer pattern using these two methods, see the references section for details.

Whilst the implementations might differ and depending on the situation sometimes you might want multi-producer/consumer support and other times you might want to ensure there can only be one producer/consumer. Whatever your requirement might be, the general idea is that both producer(s) and consumer(s) have to acquire a lock on the same sync object before they can add or remove items from the queue. And depending on who’s holding the lock:

- producer(s) would acquire a lock, add item to the queue, and then pulse, which gives up the lock and wait up other waiting threads (the consumers)

- consumer(s) would acquire a lock, start listening for items in a continuous loop and wait for a pulse, which gives up the lock (allowing other producers/consumers to get in and acquire the lock). When the consumer is woken up it reacquires the lock and can then safely process new items from the queue.

There are a number of other considerations you should take into account, such as the exit conditions of the consumer’s continuous loop, etc. Have a look at the different implementations I have included in the reference section to get a feel of what you need to do to implement the Producer-Consumer pattern to suit your needs.

Parting thoughts..

Another thing which you should be aware of when implementing any sort of Producer-Consumer model is the need to make the queue thread-safe seeing as multiple threads can be reading/writing to it concurrently. As you might know already, there is a Queue.Synchronized method which you can use to get a synchronized (in other words, thread-safe) version of the queue object.

But as discussed on the BCL Team Blog (see reference), the synchronized wrapper pattern gave developers a false sense of security and led some Microsoft developers to write unsafe code like this:

if (syncQueue.Count > 0) {

Object obj = null;

try {

// count could be changed by this point, hence invalidating the if check

bj = syncQueue.Dequeue();

}

catch (Exception) { } // this swallows any indication we have a race condition

// use obj here, dealing with null.

}

Which is why they decided it’s better to force developers to use explicit lock statement around the whole operation.

Another way to think about the problem is to picture the Queue.Synchronized method as the equivalent of a volatile modifier for a reference type which guarantees the latest value of the instance (as opposed to the reference pointer only). Which means it’s save to use in atomic operations (a single instruction) but does not stop interleaving instructions from multiple threads between operations.

References:

MSDN article on how to synchronize a producer and a consumer thread

Jon Skeet’s article on WaitHandles

Mike Kempf’s implementation of the Producer-Consumer pattern using Monitor

BCL Team Blog post on why there is not Queue<T>.Synchronized

StackOverflow question on why there’s no Queue<T>.Synchronized

Whenever you’re ready, here are 4 ways I can help you:

- Production-Ready Serverless: Join 20+ AWS Heroes & Community Builders and 1000+ other students in levelling up your serverless game. This is your one-stop shop for quickly levelling up your serverless skills.

- Do you want to know how to test serverless architectures with a fast dev & test loop? Check out my latest course, Testing Serverless Architectures and learn the smart way to test serverless.

- I help clients launch product ideas, improve their development processes and upskill their teams. If you’d like to work together, then let’s get in touch.

- Join my community on Discord, ask questions, and join the discussion on all things AWS and Serverless.