Yan Cui

I help clients go faster for less using serverless technologies.

This article is brought to you by

The real-time data platform that empowers developers to build innovative products faster and more reliably than ever before.

I stumbled upon this interesting question on StackOverflow today, Jon Harrop’s answer mentions a significant overhead in adding and iterating over a SortedDictionary and Map compared to using simple arrays.

Thinking about it, this makes sense, the SortedDictionary class sorts its constituent key-value pairs by key, which will naturally incur some performance overhead.

F#’s Map construct on the other hand, is immutable, and adding an item to a Map returns the resulting Map – a new instance of Map which includes all the items from the original Map instance plus the newly added item. As you can imagine, this means copying over a lot of data when you’re working with a large map which is an obvious performance hit.

This is a similar problem to using List.append ( or equivalently using the @ operator ) on lists as it also involves copying the data in the first list, more on that on another post.

Anyhow, the question piqued my interest and I had to test it out and get some quantitative numbers for myself, and I was also interested in seeing how the standard Dictionary class does compared to the rest. :-)

The test code is very simple, feel free to take a look here and let me know if them are unfair in any way. In short, the test was to add 1,000,000 items and then iterate over them with each type of construct and record the time each step took.

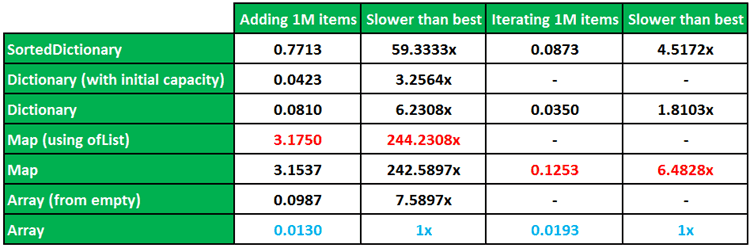

The results are below, the times are recorded in seconds, averaged over 5 runs.

Aside from the fact that the Map construct did particularly poorly in these tests, it was interesting to see that initializing a Dictionary instance with sufficient capacity to begin with allowed it to perform twice as fast!

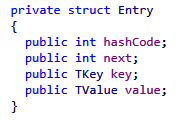

To understand where that performance boost came from, you need to understand that a Dictionary uses an internal array of entry objects (see below) to keep track of what’s in the dictionary:

When that internal array fills up, it replaces the array with a bigger array and the size of the new array is, roughly speaking, the smallest prime number that’s >= current capacity times 2, even though the implementation only uses a cached array of 72 prime numbers 3, 7, 11, 17, 23, 29, 37, 47, … 7199369.

So when I initialized a Dictionary without specifying its capacity (hence capacity = 0) and proceed to add 1 million items it will have had to resize its internal array 18 times, causing more overhead with each resize.

Closing thoughts…

Again, these results should be taken at face value only, it doesn’t mean that you should never use Map because it’s slower than the other structures for additions and iterations, or that you should start replacing your dictionaries with arrays…

Instead, use the right tool for the right job.

If you’ve got a set of static data (such as configuration data that’s loaded when your application starts up) you need to look up by key frequently, a Map is as good a choice as any, its immutability in this case ensures that the static data cannot be modified by mistake and has little impact to performance as you never need to mutate it once initialized.

Whenever you’re ready, here are 4 ways I can help you:

- Production-Ready Serverless: Join 20+ AWS Heroes & Community Builders and 1000+ other students in levelling up your serverless game. This is your one-stop shop for quickly levelling up your serverless skills.

- Do you want to know how to test serverless architectures with a fast dev & test loop? Check out my latest course, Testing Serverless Architectures and learn the smart way to test serverless.

- I help clients launch product ideas, improve their development processes and upskill their teams. If you’d like to work together, then let’s get in touch.

- Join my community on Discord, ask questions, and join the discussion on all things AWS and Serverless.

Pingback: Performance Test – Binary serializers Part II | theburningmonk.com

It would be nice to show numbers for smaller cases as well, say for 10, 100, 1000, 10,0000 and 100,000 elements.

I just ran a similar test myself after running into poor performance with maps. I was shocked to see how much worse the map was than virtually any other collection, and I started wondering what niche it fills that dictionary doesn’t. Otherwise, why wouldn’t the F# team just throw dictionary code in an immutable wrapper and call it a day?

The more I thought about it, the more I began to realize exactly how different map and dictionary are. Nothing can change a map, so any time it’s used, you have a 100% guarantee that it’s exactly as it was when it was created. That hold true for each iteration of modifications (add, remove, etc), so in theory one could create an absurdly ridiculous function that keeps a copy of each step of construction.

With a dictionary, you would have to copy the entire object with every modification in order to achieve the same results, and I have a feeling that copying a dictionary 1,000,000 times during an iterative build wouldn’t be very pretty–for you, your memory, or your CPU. Something tells me that map’s red-black trees play a big role in this, but I’ll leave that for others to test out.

@Daniel – the problem with Map or other similar immutable collection is that, when you modify it the existing tree gets copied in its entirety which is what makes it so bad performance-wise.

Clojure solves this problem with its persistent data structures by using a technique called Path Copying where mutation only requires modification to a subset of the nodes, you should watch this video and find out more about it: http://www.infoq.com/presentations/Value-Identity-State-Rich-Hickey

Steffen Forkmann is in the process of porting them over to F# : http://www.navision-blog.de/2012/05/29/porting-clojures-persistent-data-structures-to-net-part-1-of-n-persistentvector/

It’s also worth noting that they have already been implemented in the CLR version of Clojure:

https://github.com/clojure/clojure-clr/blob/master/Clojure/Clojure/Lib/PersistentTreeMap.cs

also I haven’t tested them to see how much better they are.

Well, I ran the test I mentioned last night. Over 100,000 adds, storing an immutable copy of the map at each iteration, the process completed in around 500 ms on my machine. Total memory usage for the executable was about 60 MB.

I did the same thing with a Dictionary, and the process took longer and caused an out of memory exception at around 1.4 GB memory usage. The exact same data was used for each process, and I tried several different ways of copying the dictionary prior to each add.

60 MB vs 1.4 GB (and counting) is fairly significant. Are you sure that map copies the entire tree, or is dictionary just that inefficient at resource utilization? Is there some other mechanic at work here I’m missing?

@Daniel – can you put your test code somewhere so I can have a look?

I’ll post it somewhere tonight so you can have a look, but the code is pretty easy to duplicate.

let values = [0..100000] |> List.map (fun n -> n, n)

let map = values |> List.scan (fun map (key, value) -> map |> Map.add key value) Map([])

let dic =

values |> List.scan (fun last kvAdd -> let next = new Dictionary()

[for (kvPair:KeyValuePair) in last do next.Add(kvPair.Key, kvPair.Value)] |> ignore;

next ) (new Dictionary())

Something along those lines to generate, other functions to iterate. I had stopwatches running, and added breakpoints between generation of each construct to check memory usage.

I do remember reading an article on F# that highlighted the key differences between it and other .NET languages. It’s been a couple months so my memory is a bit foggy, but IIRC they justified the constant churning of memory with F#’s tendency to reuse structure. This would seem to jive with what I saw in the test.

(bug: in the sample above, the new kv pair isn’t actually added to the dictionary. It was in the test.)

Is there an equivalence set of tests for C#?

Hi Mark, I suggest running your own benchmarks, Matt Warren’s BenchmarkDotNet library is great at orchestrating those benchmarks and gives you far more info than the tool I built.