Yan Cui

I help clients go faster for less using serverless technologies.

You may not realise that you can write AWS Lambda functions in a recursive manner to perform long-running tasks. Here’s two tips to help you do it right.

AWS Lambda limits the maximum execution time of a single invocation to 5 minutes. Whilst this limit might be raised in the future, it’s likely that you’ll still have to consider timeouts for any long-running tasks. For this reason, I personally think it’s a good thing that the current limit is too low for many long running tasks?—?it forces you to consider edge cases early and avoid the trap of thinking “it should be long enough to do X” without considering possible failure modes.

Instead, you should write Lambda functions that perform long-running tasks as recursive functions – eg. processing a large S3 file.

Here’s 2 tips to help you do it right.

use context.getRemainingTimeInMillis()

When your function is invoked, the context object allows you to find out how much time is left in the current invocation.

Suppose you have an expensive task that can be broken into small tasks that can be processed in batches. At the end of each batch, use context.getRemainingTimeInMillis() to check if there’s still enough time to keep processing. Otherwise, recurse and pass along the current position so the next invocation can continue from where it left off.

module.exports.handler = (event, context, callback) => {

let position = event.position || 0;

do {

... // process the tasks in small batches that can be completed in, say, less than 10s

// when there's less than 10s left, stop

} while (position < totalTaskCount && context.getRemainingTimeInMillis() > 10000);

if (position < totalTaskCount) {

let newEvent = Object.assign(event, { position });

recurse(newEvent);

callback(null, `to be continued from [${position}]`);

} else {

callback(null, "all done");

}

};

use local state for optimization

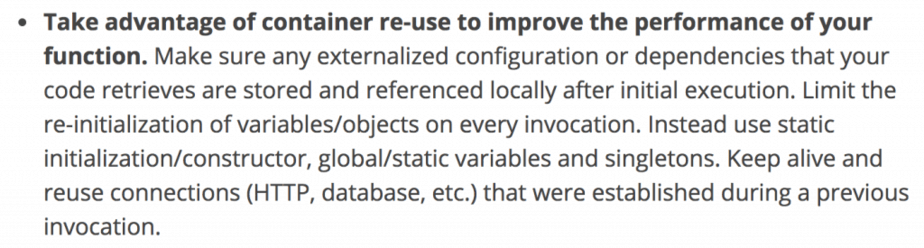

Whilst Lambda functions are ephemeral by design, containers are still reused for optimization which means you can still leverage in-memory states that are persisted through invocations.

You should use this opportunity to avoid loading the same data on each recursion?—?eg. you could be processing a large S3 file and it’s more efficient (and cheaper) to cache the content of the S3 file.

I notice that AWS has also updated their Lambda best practices page to advise you to take advantage of container reuse:

However, as Lambda can recycle the container between recursions, it’s possible for you to lose the cached state from one invocation to another. Therefore, you shouldn’t assume the cached state to always be available during a recursion, and always check if there’s cached state first.

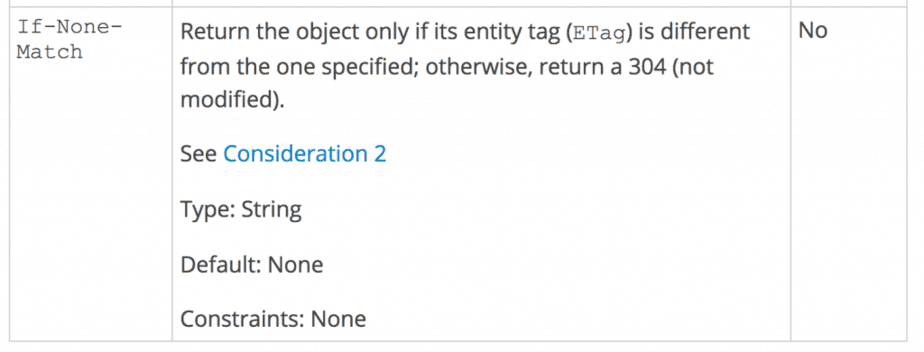

Also, when dealing with S3 objects, you need to protect yourself against content changes?—?ie. S3 object is replaced, but container instance is still reused so the cache data is still available. When you call S3’s GetObject operation, you should set the optional If-None-Match parameter with the ETag of the cached data.

Here’s how you can apply this technique.

'use strict';

const co = require('co');

const _ = require('lodash');

const AWS = require('aws-sdk');

const s3 = new AWS.S3();

// Data loaded from S3 and chached in case of recursion.

let cached;

let loadData = co.wrap(function* (bucket, key) {

try {

console.log('Loading data from S3', { bucket, key });

let req = {

Bucket: bucket,

Key: key,

// check ETAG to make sure the data hasn't changed

IfNoneMatch: _.get(cached, 'etag')

};

let resp = yield s3.getObject(req).promise();

console.log('Caching data', { bucket, key, etag: resp.ETag });

let data = JSON.parse(resp.Body);

cached = { bucket, key, data, etag: resp.ETag };

return data;

} catch (err) {

if (err.code === "NotModified") {

console.log('Loading cached data', { bucket, key, etag: cached.etag });

return cached.data;

} else {

throw err;

}

}

});

module.exports.handler = co.wrap(function* (event, context, callback) {

let position = event.position || 0;

let bucket = _.get(event, 'Records[0].s3.bucket.name');

let key = _.get(event, 'Records[0].s3.object.key');

let data = yield loadData(bucket, key);

let totalTaskCount = data.tasks.length;

do {

... // process the tasks in small batches that can be completed in, say, less than 10s

// when there's less than 10s left, stop

} while (position < totalTaskCount && context.getRemainingTimeInMillis() > 10000);

if (position < totalTaskCount) {

let newEvent = Object.assign(event, { position });

yield recurse(newEvent);

callback(null, `to be continued from [${position}]`);

} else {

callback(null, "all done");

}

});

Have a look at this example Lambda function that recursively processes a S3 file, using the approach outlined in this post.

Whenever you’re ready, here are 3 ways I can help you:

- Production-Ready Serverless: Join 20+ AWS Heroes & Community Builders and 1000+ other students in levelling up your serverless game. This is your one-stop shop for quickly levelling up your serverless skills.

- I help clients launch product ideas, improve their development processes and upskill their teams. If you’d like to work together, then let’s get in touch.

- Join my community on Discord, ask questions, and join the discussion on all things AWS and Serverless.