Yan Cui

I help clients go faster for less using serverless technologies.

After watching the talks at Monitorama for a few years, I finally have the chance to attend this year and share my insights on serverless observability on Wednesday.

Day 1 was great (despite my massive jet lag!), and the talks were mostly focused on the human side of monitoring. Of the talks two stood out for me:

- Optimizing for Learning by Logan McDonald

- Alert Fatigue (the original title is a lot longer..) by Aditya Mukerjee

Here are my summaries from these two talks.

Optimizing for Learning

During an incident we often find ourselves overwhelmed by the shear amount of data available to us. Expert intuition is the thing that allows us to quickly filter out the noise and strike at the heart of the problem.

Therefore, to improve the overall ability of the team, we need to improve the way we learn from incidents to optimize for expert intuition.

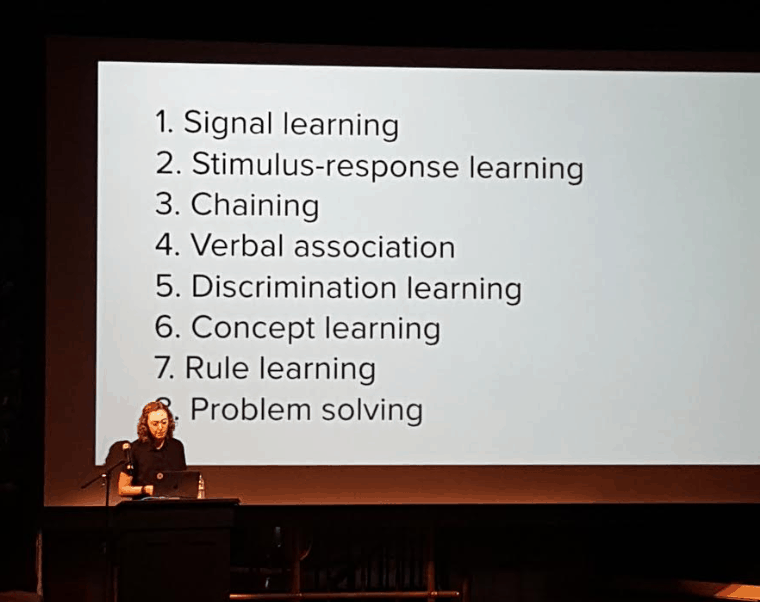

Logan then touched on the constraint theory (which I still need to read up on to understand myself) and the hierarchy of learning.

https://twitter.com/loganmeetsworld/status/1003683457338261504

She talked about the importance of giving people the opportunities to learn the rules of the world, which grant inroads to knowledge. As a senior engineer, you should vocalise your rationales for actions during an incident. This helps beginners establish mental model of the world they operate in.

Whilst many of us are good at memorising textbooks and passing exams, these methods give “the illustration of learning”. They are ineffective at storing information for long term memory, which is critical for expert intuition.

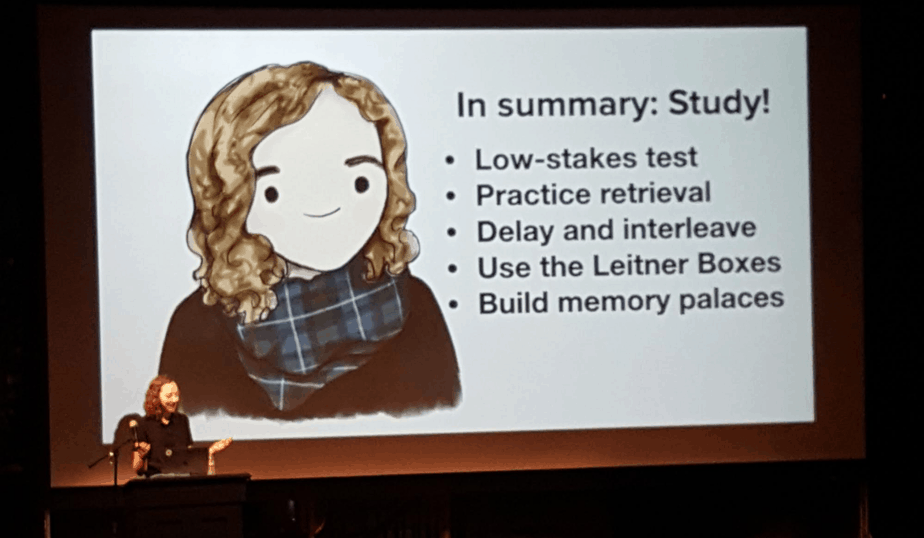

Instead, look to test yourself often in low-state environments. Because in order to learn, we need to exercise retrieval of information often. These retrieval exercises help strengthen those neural pathways in our brains.

It’s also more beneficial when the retrieval is effortful, so you should make these exercises difficult and force yourself to struggle a little.

Logan went on to outline a simple technique for us to practice delay retrieval and interleaving, using cards. Every time we acquire a new piece of information, we can write it down on a card and keep the cards handy.

From time-to-time you can use the cards to test yourself, and questions you get right often are moved up to another box which you test yourself with less frequently. Questions you get wrong often are moved down to the previous box which you use to test yourself more frequently.

Logan also talked about Memory Palace, which is a technique that memory athletes use to improve their memory.

This section of the talk echoes the researches that Supermemo is built on, and Josh Kaufman’s TED talk about the 10k hours rule where he specifically talked about the need for those exercises to be deliberate and challenging.

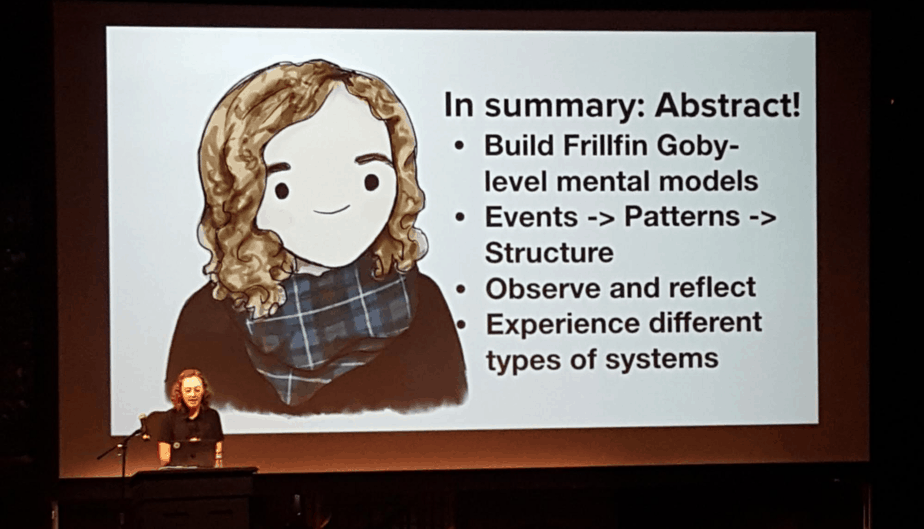

We need to build knowledge into mental models, which is the basis of expert intuition. Mental models prepare you for dealing with incidents and make you a better story teller, and you become better at automatically recognising causalities.

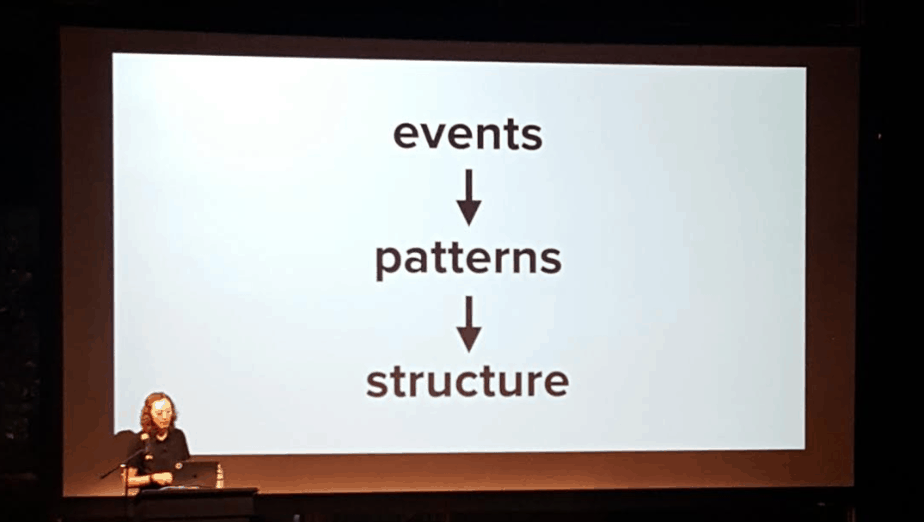

To build these mental models, we need to recognise patterns from observable events, and from build up our mental model from those patterns.

But this is hard.

If these events and patterns are incomplete or false, so would the mental models you build on top of it.

For true observability, you also need to include historical context and decisions. This can be as simple as document that outlines previous incidents and decisions, to help newcomers build a shared understanding of those historical context.

This is very similar to the architectural decision record (ADR) used by some development teams.

Regular reflection also helps sustain memory and learning. Practicing active reflection helps you build mental models faster.

Incident reviews help with this.

You should go beyond a singular mental model. By exposing yourself to multiple mental models, and therefore different ways of looking at the world, it helps enforce true understanding of the models.

Again, I couldn’t agree more with this! I have long held the belief that you need to learn different programming paradigms in order to open yourself up to solutions that you might even be able to perceive otherwise.

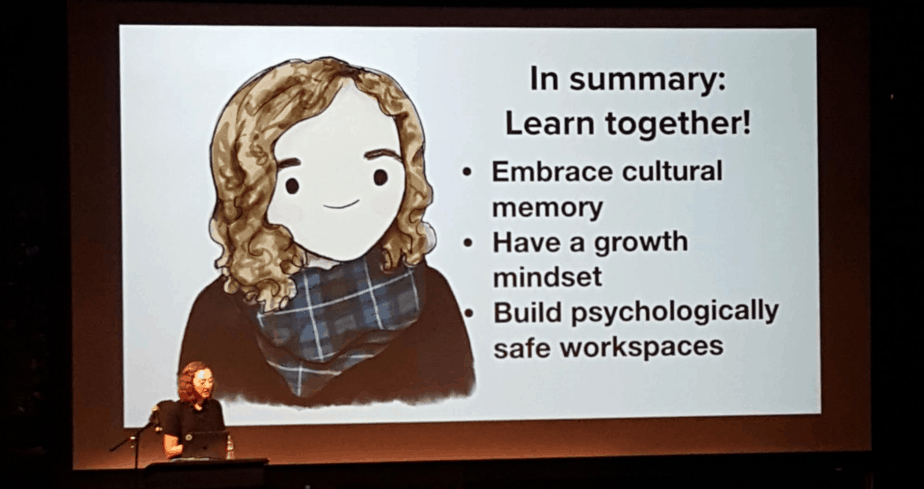

Logan then talked about cultural memory and the growth mindset.

Cycling back to the earlier point on the need to document historical context. We need to share experiences from incidents with newcomers. Your operational ability is built on bad memories that leave a mark. Institutional knowledge is essential for newcomers.

Also, you need to recognize that leaning requires a lot of failure.

Finally, Logan finished off on the importance of psychological safety. Which incidentally, is also the most important attribute of a successful team, based on research conducted by Google.

Build an environment where people feel safe to ask any question, even ones that might be perceived as naive.

Don’t carry blame, optimise for learning instead.

Working in environments without psychological safety can be detrimental to your mental health. Your memory suffers from bad mental health, which ultimately impacts your ability to respond quickly.

Alert Fatigue

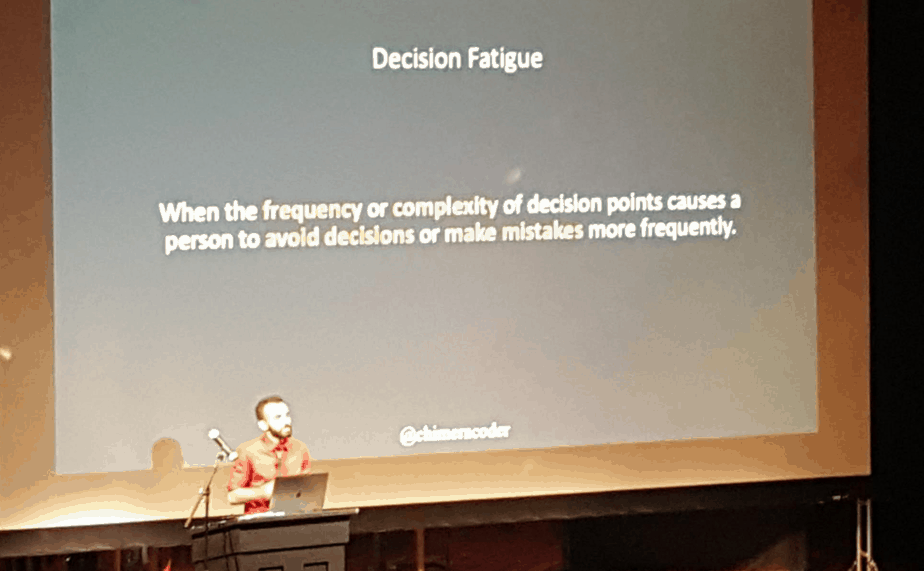

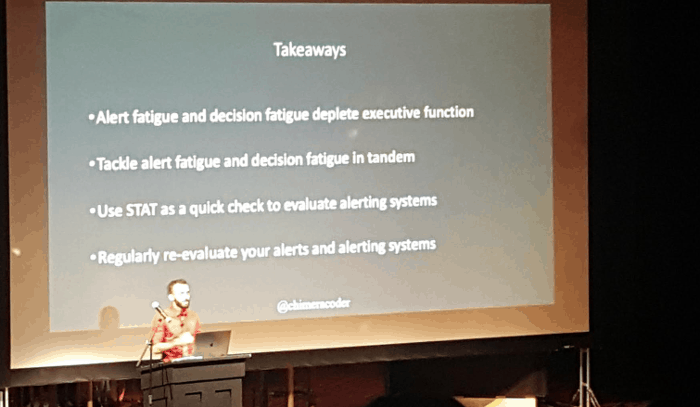

If you have worked in the ops space, then you’re probably familiar with alert fatigue and decision fatigue.

They happen because we run out of executive functions, which is a set of cognitive processes required for decision making.

Our executive function is limited over a period of time. Although it does replenish over time, but it’s not an unlimited resource.

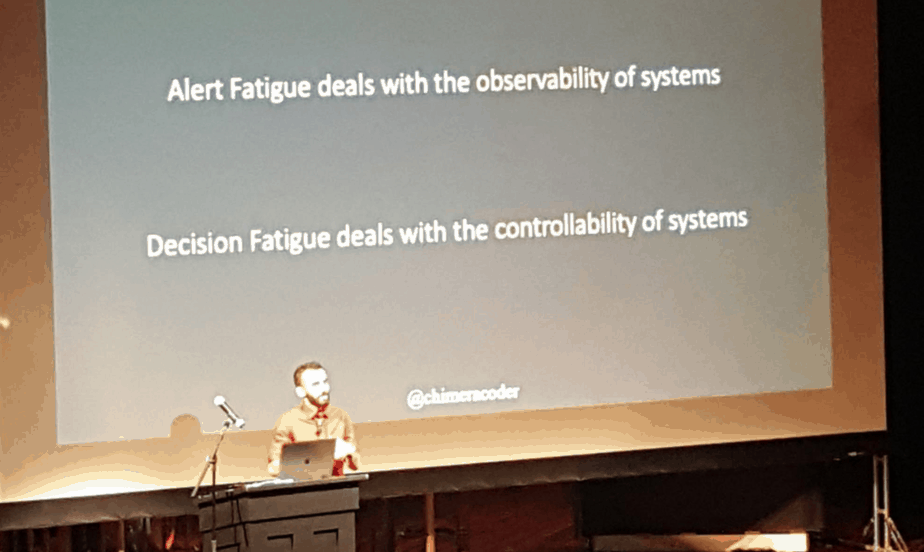

Alert and decision fatigue are related because they’re both to do with our ability to observe changes and our ability to affect them.

Aditya used alert fatigue in hospital stuffs to draw many parallels with the software industry.

In the healthcare industry, between 72-99% of all alarms are false alarms!

If you receive multiple false alarms for the same patient, it doesn’t just create alert fatigue for that patient. It greatly impacts your alert fatigue level systemically for ALL patients.

This is the same with software, where one frequently mis-fired alarm can cause alert fatigue for all alarms. Which is why we need to actively chase down edge cases that generate high numbers of false alarms. These guys are likely responsible for draining our executive functions.

Aditya then shared four steps to reduce alert fatigue: supported, trustworthy, actionable and triaged, or STAT for short.

For an alert to be supported, you need to be able to identify ownership of this alert, and the owner needs to have the ability to affect the alerts. Don’t forget, alert systems also include the people who participate in responding to alerts, not just the alerts themselves.

A common bad practice is to not allow responders to change the thresholds of the alerts for fear of tempering. Remember, the responders already have the power to simply ignore the alerts.

Instead, we should empower people to change thresholds rather than forcing them to ignore them. We are not granting them with any more power that they didn’t have before.

Person who respond to alert must have ownership to affect the end result as well, which could be business processes that the alert is intended to protect.

Don’t keep alerts past their usefulness. If an alert is no longer relevant as our architecture change, get rid of it. If the goal is to reduce alert fatigue, then we don’t want a system that systematically induces alert fatigue!

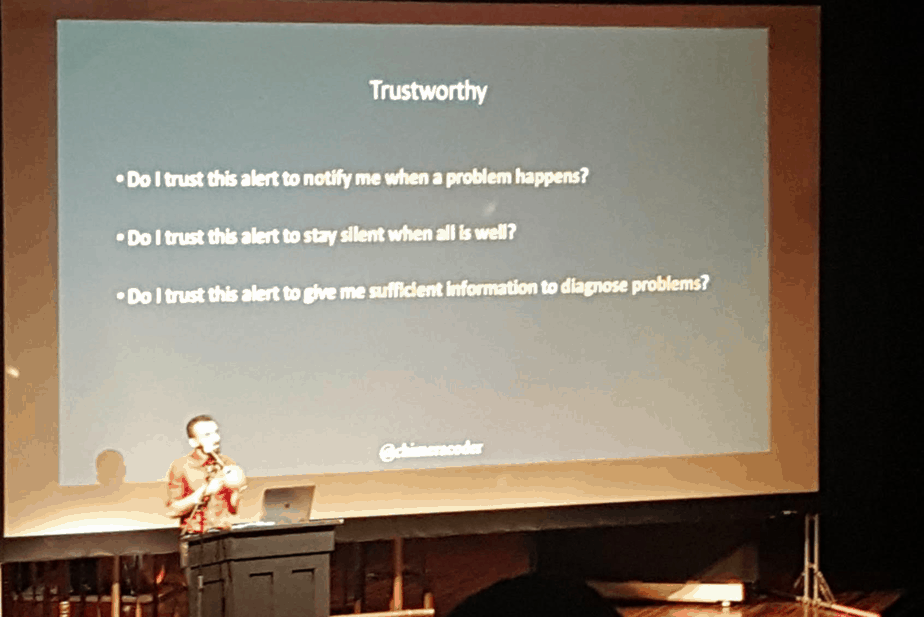

We need to be able to trust our alerts, at a systemic level, not just individual alerts. People needs to trust the system. Even one alert can corrode the trust in the whole system.

We need to maximise collective trustworthiness of the alerts. Which is an important point to consider when using automated process for generating alerts. Do these processes generate that I can actually trust? Can I adjust the thresholds?

If you can’t trust your alerts, then it leads to even more decisions when responding to an alert. The more decisions you have to make in response to an alert, the more chance for mistakes.

This creates decision fatigue, and you’re more likely to ignore these alerts in the future. In turn, it creates a fear towards the alerts, and sets off alert fatigue before it even happens.

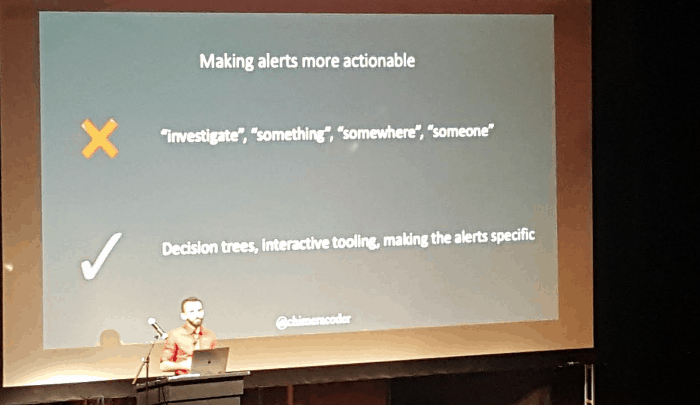

Spell out the actions, use a decision tree. Decision tress are good.

An alert is not actionable without an actor. It needs a clear decision of who should be taking charge. A common bad pattern is for the alert to fire blindly into a slack channel with no clear indication as to who should deal with it.

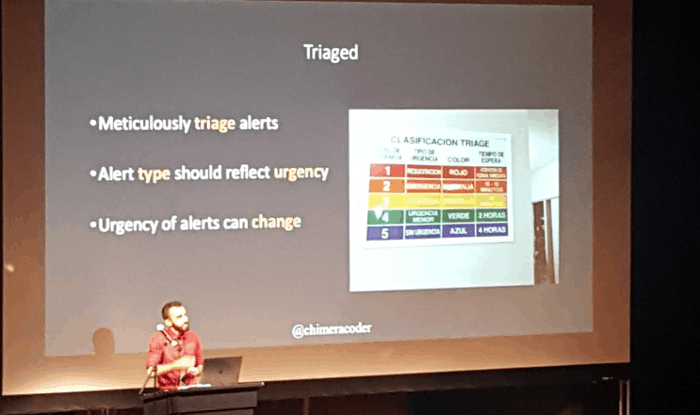

We also need a sense of severity with alerts so we can triage them.

Having a system for triaging alerts is great, but we also need to keep up-to-date on the dynamic things that change as our system and architecture changes.

We need to have regular re-evaluation of what should be alerted, their severity, and what is causing us alert fatigue.

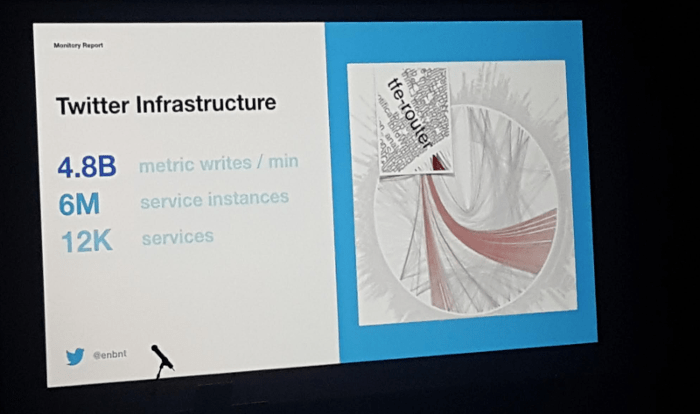

Right at the end of the day, Ian Bennet of Twitter shared his experience of migrating Twitter to their new observability stack.

It was interesting to hear about all the problems they ran into, and it also struck me the scale of their infrastructure!

Alright, I’m still jet lagged as hell (I’m writing this at 2am in the morning..) but I’m looking forward to day 2!

Whenever you’re ready, here are 3 ways I can help you:

- Production-Ready Serverless: Join 20+ AWS Heroes & Community Builders and 1000+ other students in levelling up your serverless game. This is your one-stop shop for quickly levelling up your serverless skills.

- I help clients launch product ideas, improve their development processes and upskill their teams. If you’d like to work together, then let’s get in touch.

- Join my community on Discord, ask questions, and join the discussion on all things AWS and Serverless.