Yan Cui

I help clients go faster for less using serverless technologies.

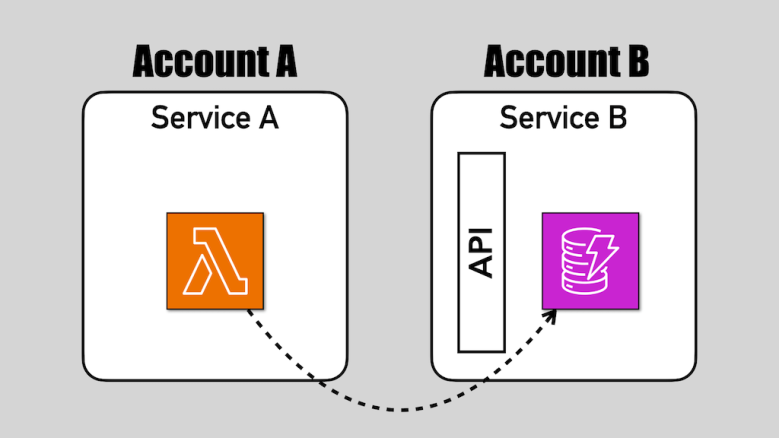

DynamoDB announced support for resource-based policies [1] a few days ago. It makes cross-account access to DynamoDB tables easier. You no longer need to assume an IAM role in the target table’s account.

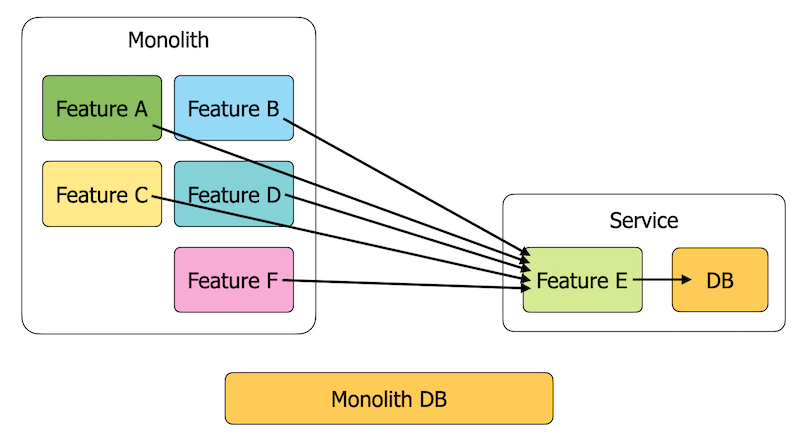

I was confused by this update and wondered if it was even a good idea. If you need cross-account access to DynamoDB, then it’s surely a sign you’re breaking service boundaries, right?

As I said before [2], a microservice should own its data and shouldn’t share a database with another microservice.

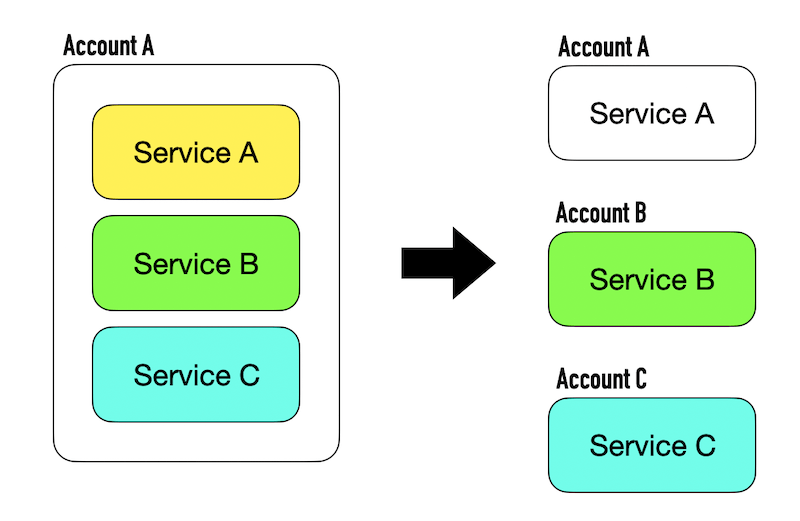

In many organizations, microservices run in their own accounts. This provides another layer of insulation between services. It can also compartmentalize security breaches and problems related to AWS limits.

When a service needs to access another service’s data, it should go through its API. It shouldn’t reach in and help itself to their data. It’s insecure and creates a hidden coupling between the two services.

This is not how you should use the new resource-based policies!

However, there are legitimate use cases where cross-account access can be helpful. I want to thank everyone who shared their thoughts and experiences with me.

ETL / data pipelines

ETL jobs and data pipelines often need to read data from multiple sources or subscribe to DynamoDB streams to capture live data change events.

These require cross-account access to DynamoDB tables, and the new resource-based policies make them easier.

Migration to new service/account

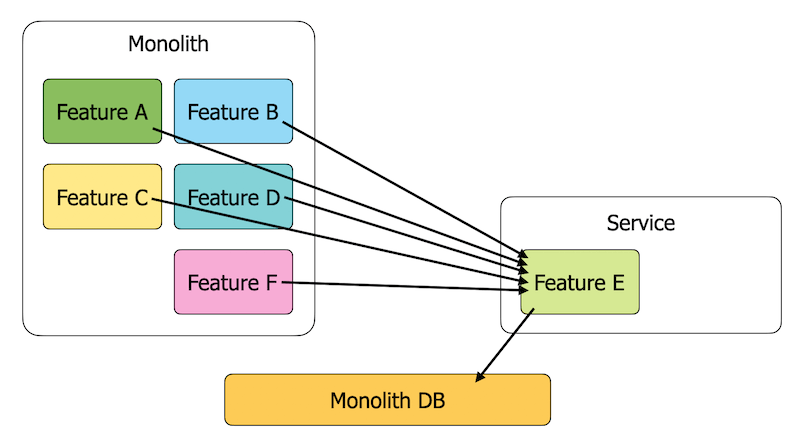

In this video [3], I discussed using the Strangler Pattern to migrate an existing monolith to serverless. The new service must still access the old database as part of the transitional phase.

This is when you will need cross-account access to the original DynamoDB table. Once your new service owns all the data access patterns, you can safely migrate existing data to a new table in the new account. See this post [4] to see how you can do this without downtime.

Once the migration is complete, you can drop the cross-account access.

There are other cases when this type of transitional need arises.

For example, when moving from a single-account strategy to a multi-account strategy.

In that scenario, you’d create a new instance of the service in a new account. During the transition period, the new account must access data in the old account until the data is migrated to the new account.

Another layer of access control

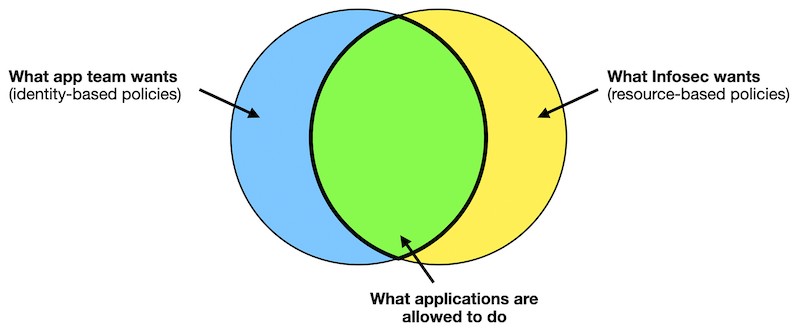

Resource-based policies give InfoSec teams more control.

Application teams can use identity-based policies to control data access, and InfoSec teams can use resource-based policies to add another layer of control and governance.

This is important in regulated environments that handle sensitive user information. For example, developers need read-only permissions to debug problems in production. However, InfoSec can use resource-based policies to deny access to DynamoDB tables by IAM users.

This can be applied selectively to only the tables holding sensitive user information. You can also carve out exceptions, such as allowing an “emergency breakglass” role to modify table settings (e.g. provisioned throughput settings) without granting data access.

Sharing data with external parties

In rare situations, you might need to share data with external vendors or partners. Resource-based policies make it easier to control who can access what in your account.

Instead of keeping track of the different IAM roles the partners have to assume, you can see all the policies in one place.

Multi-account global table

Several Amazon employees mentioned a common architectural pattern within Amazon. A global service would deploy to different accounts – one region per account.

DynamoDB global tables do not support cross-account replication, so the application must replicate data changes across regions.

Understandably, this is a rather painful exercise. Resource-based policies significantly simplify this process.

This is an interesting architectural pattern. It’s not something that I have seen myself. All the global services I have seen or worked on would deploy to multiple regions in the same account.

From a security point of view, I can understand the rationale. If an account is compromised, an attacker will likely gain access to other regions. An account boundary offers stronger isolation.

Internal tooling likely played a role as well. This pattern is probably ingrained in how internal automation platforms work within Amazon.

Summary

So, DynamoDB finally supports resource-based policies.

While it simplifies cross-account access to DynamoDB tables, eliminating the need to assume IAM roles. It still begs the question: “Is cross-account data access even a good idea?”

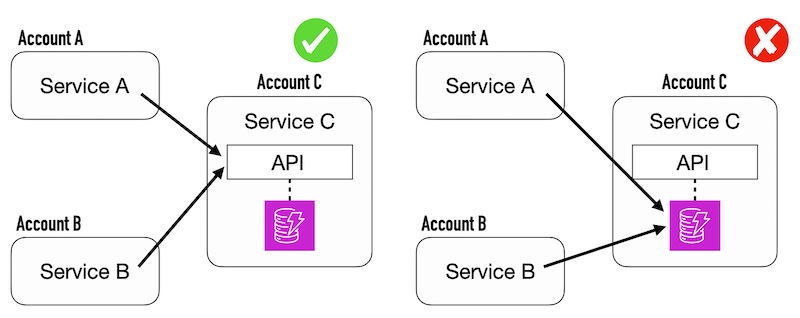

Suggestions of a “central data account” are misguided. Placing an account boundary between a service and its data is a bad idea. It will introduce unnecessary complexity to IaC and CI/CD and increase the likelihood of hitting service limits. It also erodes service boundaries and the autonomy of a team to manage and own its services.

It’s also a single point of failure from a security perspective. A compromised account would give the attacker access to all of your data!

Despite these concerns, several valid use cases will benefit from this, such as:

- ETL jobs or data pipelines.

- The transitional phase of an account migration process.

- InfoSec teams looking for strong data governance.

- When you need to share data with external parties.

- When you require multi-account global tables.

I will leave you with this: cross-account access to DynamoDB tables is almost always a smell. But as with everything, there are exceptions and edge cases. You should think carefully before you use resource-based policies to enable cross-account access to your DynamoDB tables.

Because exceptions are just that, exceptions. Most of you (myself included) are the rule, not the exception.

Links

[1] DynamoDB now supports resource-based policies

[2] My thoughts on the microservices vs. serverless false dichotomy

[3] Migrating existing monolith to serverless in 8 steps

[4] How to perform database migration for a live service with no downtime

Related Posts

Whenever you’re ready, here are 3 ways I can help you:

- Production-Ready Serverless: Join 20+ AWS Heroes & Community Builders and 1000+ other students in levelling up your serverless game. This is your one-stop shop for quickly levelling up your serverless skills.

- I help clients launch product ideas, improve their development processes and upskill their teams. If you’d like to work together, then let’s get in touch.

- Join my community on Discord, ask questions, and join the discussion on all things AWS and Serverless.