Yan Cui

I help clients go faster for less using serverless technologies.

Note: Don’t forget to check out Benchmarks page to see the latest round up of binary and JSON serializers.

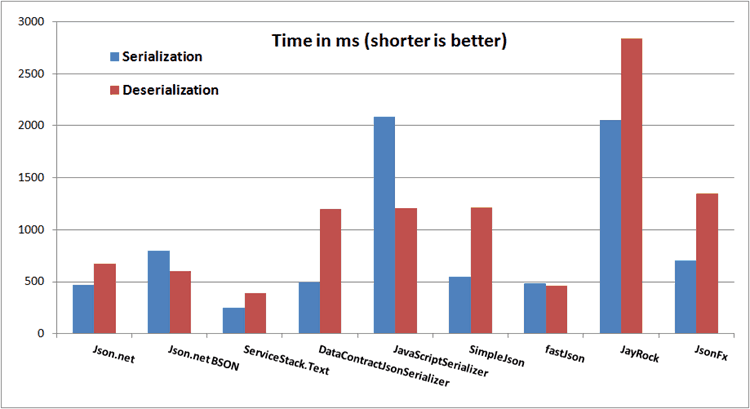

Following on from my previous test, I have now included JsonFx and as well as the Json.Net BSON serializer in the mix to see how they match up.

The results (in milliseconds) as well as the average payload size (for each of the 100K objects serialized) are as follows.

![image[4] image[4]](/wp-content/uploads/2012/08/image4.png)

Graphically this is how they look:

I have included protobuf-net in this test to provide more meaningful comparison for Json.Net BSON serializer since it generates a binary payload and as such has a different use case to the other JSON serializers.

In general, I consider JSON to be appropriate when the serialized data needs to be human readable, a binary payload on the other hand, is more appropriate for communication between applications/services.

Observations

You can see from the results above that the Json.Net BSON serializer actually generates a bigger payload than its JSON counterpart. This is because the simple POCO being serialized contains an array of 10 integers in the range of 1 to 100. When the integer ‘1’ is serialized as JSON, it’ll take 1 byte to represent as one character, but an integer will always take 4 bytes to represent as binary!

In comparison, the protocol buffer format uses varint encoding so that smaller numbers take a smaller number of bytes to represent, and it is not self-describing (the property names are not part of the payload) so it’s able to generate a much much smaller payload compared to JSON and BSON.

Lastly, whilst the Json.Net BSON serializer offers a slightly faster deserialization time compared to the Json.Net JSON serializer, it does however, have a much slower serialization speed.

Disclaimers

Benchmarks do not tell the whole story, and the numbers will naturally vary depending on a number of factors such as the type of data being tested on. In the real world, you will also need to take into account how you’re likely to interact with the data, e.g. if you know you’ll be deserializing data a lot more often than serializing them then deserialization speed will of course become less important than serialization speed!

In the case of BSON and integers, whilst it’s less efficient (than JSON) when serializing small numbers, it’s more efficient when the numbers are bigger than 4 digits.

Whenever you’re ready, here are 3 ways I can help you:

- Production-Ready Serverless: Join 20+ AWS Heroes & Community Builders and 1000+ other students in levelling up your serverless game. This is your one-stop shop for quickly levelling up your serverless skills.

- I help clients launch product ideas, improve their development processes and upskill their teams. If you’d like to work together, then let’s get in touch.

- Join my community on Discord, ask questions, and join the discussion on all things AWS and Serverless.

“I have included protobuf-net in this test to…” – did I miss it? You have got me wondering whether I should try my hand at BSON, though :)

Ah, my bad – I was looking at the graph, not the table. Feel free to delete the above.

Hey Marc, not to worry, I just included the serialization/deserialization times for protobuf-net purely as a reference to see how well BSON matches up to it.

I would probably put it in the binary serializers test instead as it’s not really fair to put them up against JSON serializers as the use cases for a wire format and a text format are very different.

Any chance of adding the MongoDB c# driver Bson Serializer/Deserializer? Kind of an obvious one to use if you’re using Mongo, but I’d be interested to see how it stacks up against the competition.

Love the benchmarks and the test harness you made. Thanks so much for putting in the work. I have few suggestions: some of the time spent serializing is actually spent by the garbage collector running so I think before testing each of the serializers you should be doing a full collection. This would keep serializer n from paying for the sins (excessive allocations) of serializer n-1. If you wanted to get really fancy there is a native api that would allow you to track just the time spent by the thread doing the serialization.

Another suggestion would be to separate initialization/setup time from serialization time. Most of the serializers you are testing build a cache of information about the types they are serializing so the first serialization of a type necessarily takes much longer than subsequent serializations. It would be cool to see this quantified for each of the serializers. I.E. maybe I should use serializer X for my high throughput web services because though it warms up slowly it serializes much faster than the others.

Just my two cents. Again thanks so much for the effort.

@John Cole – sure, I’ll include it in the benchmarks, sounds like a good addition to the tests

@Justin – Thanks for the suggestions!

“track only time spent on serialization” – the amount of stress a serializer exerts on the GC and the consequent time spent in GC is also an important performance characteristic that should be taken into account. But it might add a lot of value to see both times (GC time + serialization time), I’ll look into collecting GC data for each test, they might reveal interesting stories.

“full GC before each test” – good idea, though I think it might make more sense to do the full GC at the end of each test and include the GC time for that test for the same reason as above.

“separate initialization/setup time” – over a large number of serializations in each trial, over 5 trials, the impact of the initialization time should become negligible, so I don’t think it’ll produce any noticeable difference in the results.

Also, the tests are filtering out the min and max trial times, so if the first trial takes significantly longer because of the initialization, the times from that trial would not be taken into account when working out the final average.

once again, thanks for the suggestions, they’re much appreciated :-)

Pingback: JSON serializers benchmark updated – including MongoDB Driver | theburningmonk.com

Pingback: ASP.NET MVC Series: High Performance JSON Parser for ASP.NET MVC « Nine MVP's Blog

Hye !

Thanks a lot for this performance studies….

Could you precise the version that you use for yours tests ?

For example the Newtonsoft.Json library is currently at 5.0.5 , ServiceStack at 3.9.48 (on NuGet packages) , fastJson at 2.0.15 etc…

The best for you !

@Gourdet – you can refer to the main benchmarks page (https://theburningmonk.com/benchmarks/), I’ve listed the specific version numbers there :-)

OK, thanks for your answer and link …

All the Best for you !