Yan Cui

I help clients go faster for less using serverless technologies.

Day three of QCon London was a treat, with full day tracks on architecture and microservices, it presented some nice challenges of what to see during the day.

My favourite talk of the day was Randy Shoup’s Service Architectures at Scale, Lessons from Google and eBay.

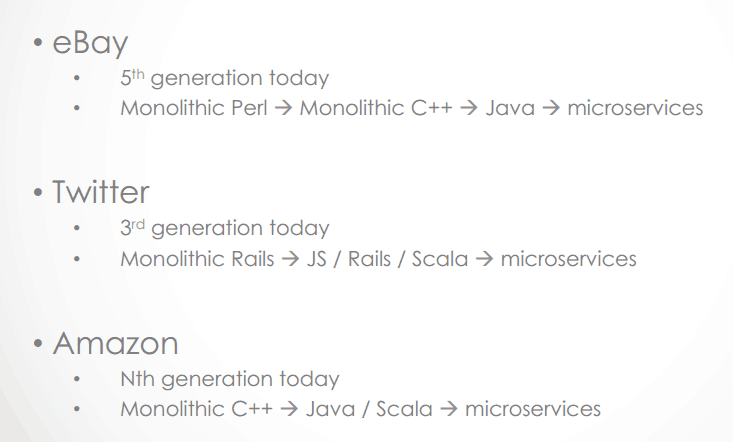

Randy kicked off the session by identifying a common trend in the architecture evolution at some of the biggest internet companies.

An ecosystem of microservices also differ from their monolithic counterparts in that they tend to organically form many layers of dependencies rather than fall into strict tiers in a hierarchy.

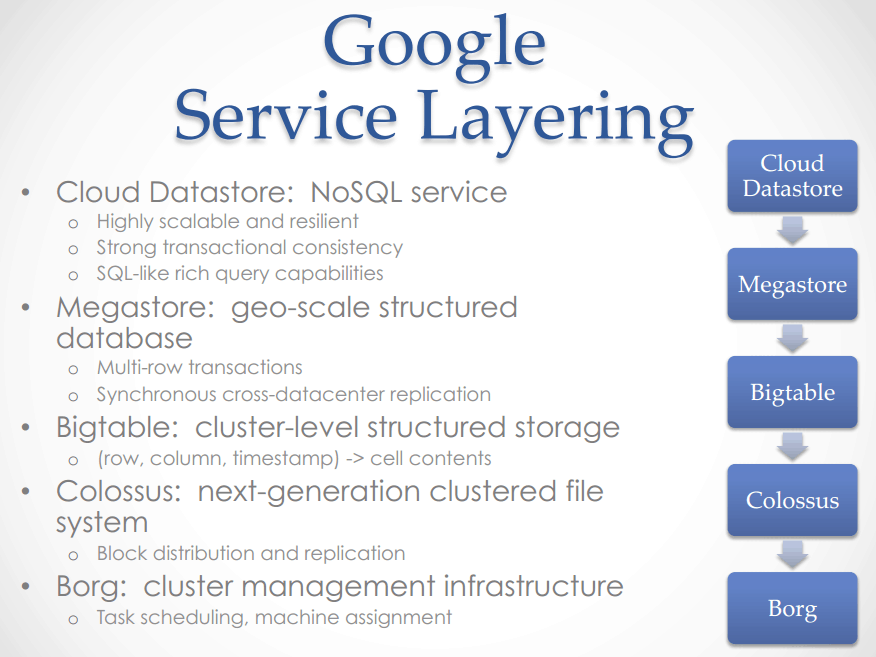

At Google, there has never been a top-down design approach to building systems, but rather an evolutionary process using natural selection – services survive by justifying their existence through usage or they are deprecated. What appears to be a clean layering by design turned out to be an emergent property of this approach.

Services are built from bottom-up but you can still end up with clean, clear separation of concerns.

At Google, there are no “architect” roles, nor is there a central approval process for technology decisions. Most technology decisions are made within the team, so they’re empowered to make the decisions that are best for them and their service.

This is in direct contrast to how eBay operated early on, where there was an architecture review board which acted as a central approval body.

Even without the presence of a centralized control body, Google proved that it’s still possible to achieved standardization across the organization.

Within Google, communication methods (e.g.. network protocol, data format, structured way of expressing interface, etc.) as well as common infrastructure (source control, monitoring, alerting, etc.) are standardized by encouragement rather than enforcement.

By the sound of it, best practices and standardization are achieved through a consensus-based approach in teams and then spread out throughout the organization through:

- encapsulation in shared/reusable libraries;

- support for these standards in underlying services;

- code reviews (word of mouth);

- and most importantly the ability to search all of Google’s code to find existing examples

One drawback with following existing examples is the possibility of random anchoring – someone at one point made a decision to do things one way and then that becomes the anchor for everyone else who finds that example thereafter.

Whilst the surface areas of services are standardized, the internals of the services are not, leaving developers to choose:

- programming language (C++, Go, Python or Java)

- frameworks

- persistence mechanisms

Rather than deciding on the split of microservices up ahead, capabilities tend to be implemented in existing services first to solve specific problems.

If it prove to be successful then it’s extracted out and generalized as a service of its own with a new team formed around it. Many popular services today all started life this way – Gmail, App Engine and BigTable to name a few.

On the other hand, a failed service (e.g. Google Wave) will be deprecated but reusable technology would be repurposed and the people in the team would be redeployed to other teams.

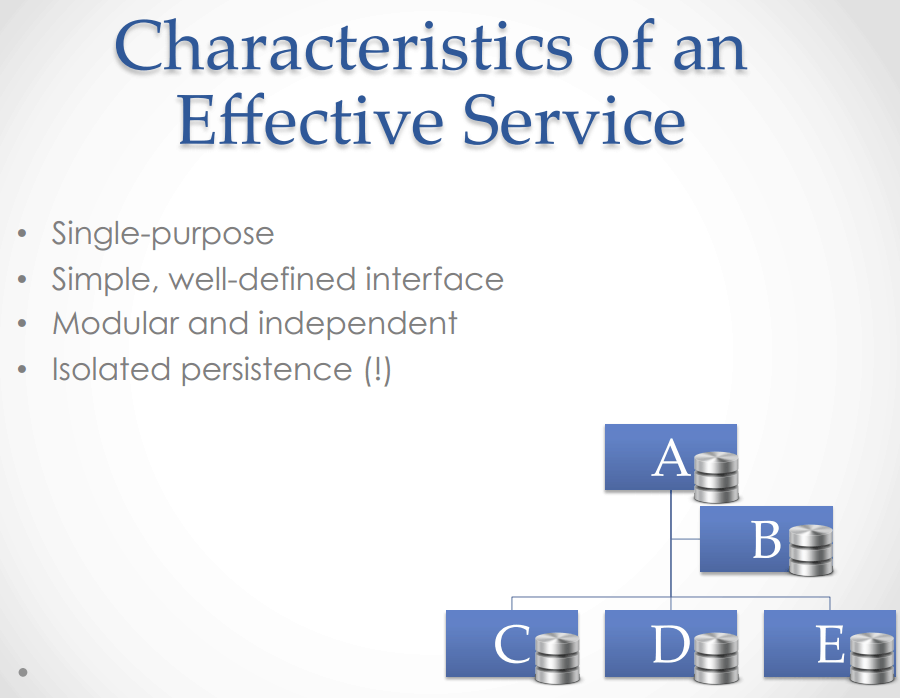

This is a fairly self-explanatory slide and an apt description of what a microservice should look like.

As the owner of a service, your primary focus should be the needs of your clients, and to meet their needs at minimum cost and effort. This includes leveraging common tools, infrastructures and existing service as well as automating as much as possible.

The service owner should have end-to-end ownership, and the mantra should be “You build it, you run it”.

The teams should have autonomy to choose the right technology and be held responsible for the results of those choices.

Your service should have a bounded context, its primary focus should be on the client and services that depend on the service.

You should not have to worry about the complete ecosystem or the underlying infrastructure, and this reduced cognitive load also means the teams can be extremely small (usually 3-5 people) and nimble. Having a small team also bounds the amount of complexity that can be created (i.e. use Conway’s law to your advantage).

Treat service-service relationship as a vendor-client relationship with clear ownership and division of responsibility.

To give people the right incentives, you should charge for usage of the service, this way, it aligns economic incentives for both sides to optimize for efficiency.

With a vendor-client relationship (with SLAs and all) you’re incentivized to reduce the risk that comes with making changes, hence pushing you towards making small incremental changes and employing solid development practices (code reviews, automated test, etc.).

You should never break your clients’ code, hence it’s important to keep backward/forward compatibility of interfaces.

You should provide an explicit deprecation policy and give your clients strong incentives to move off old versions.

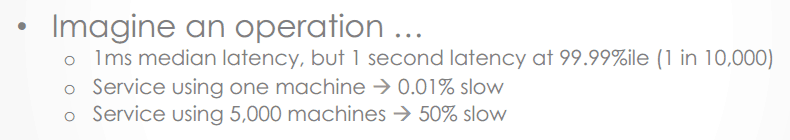

Services at scale are highly exposed to performance variability.

Tail latencies (e.g. 95%, 99% latency) are much more important than average latencies. It’s easier for your client to program to consistent performance.

Services at scale are also highly exposed to failures.

(disruptions are 10x more likely from human errors than software/hardware failures)

You should have resilience in depth with redundancy for hardware failures, and have capability for incremental deployments:

- Canary releases

- Staged rollouts

- Rapid rollbacks

eBay also use ‘feature flags’ to decouple code deployment from feature deployment.

And of course, monitoring..

Finally, here are some anti-patterns to look out for:

Mega-Service – services that does too much, ala mini-monolith

Shared persistence – breaks encapsulation, and encourages ‘backdoor’ violation, can lead to hidden coupling of services (think integration via databases…)

Gamesys Social

As I sat through Randy’s session, I was surprised and proud to find that we have employed many similar practices in my team (backend team at Gamesys Social), a seal of approval if you like:

- not having architect roles, instead using a consensus-based approach to make technology decisions

- standardization via encouragement

- allow you to experiment with approaches/tech and not penalizing you when things don’t pan out (the learning is also a valuable output from the experiment)

- organic growth of microservices (proving them in existing services first before splitting out and generalize)

- place high value on automation

- autonomy to the team, and DevOps philosophy of “you build it, you run it”

- deployment practices – canary release, staged rollouts, use of feature flags and our twist on the blue-green deployment

I’m currently looking for some functional programmers to join the team, so if this sounds like the sort of environment you would like to work in, then have a look at our job spec and apply!

Links

We’re hiring a Functional Programmer!

Whenever you’re ready, here are 3 ways I can help you:

- Production-Ready Serverless: Join 20+ AWS Heroes & Community Builders and 1000+ other students in levelling up your serverless game. This is your one-stop shop for quickly levelling up your serverless skills.

- I help clients launch product ideas, improve their development processes and upskill their teams. If you’d like to work together, then let’s get in touch.

- Join my community on Discord, ask questions, and join the discussion on all things AWS and Serverless.

Pingback: QCon London 2015–Takeaways from “Scaling Uber’s realtime market platform” | theburningmonk.com

Pingback: This is why you need Composition over Inheritance | theburningmonk.com

Pingback: My picks from OSCON keynotes | theburningmonk.com