Yan Cui

I help clients go faster for less using serverless technologies.

This article is brought to you by

The real-time data platform that empowers developers to build innovative products faster and more reliably than ever before.

This talk by Kyle Kingsbury (aka @aphyr on twitter) was my favourite at CraftConf, and gave us an update on the state of consistency with MongoDB, Elasticsearch and Aerospike.

Kyle opened the talk by talking about how we so often build applications on top of databases, queues, streams, etc. and that these systems we depend on are really quite flammable (hence the tyre analogy).

..as anybody who’s ever used any database knows, everything is on fire all the time! But our goal is to pretend, and ensure that everything still works… we need to isolate the system from failures.

– Kyle Kingsbury

Which led nicely into the type of failures that the rest of the talk will focus on – split brain, broken foreign keys, etc. And the purpose of his Jepsen project is to analyse a system against these failures.

A system has boundaries, and these boundaries should be protected by a set of invariants – e.g. if you put something into a queue then you should be able to read it out afterwards.

The rest of the talk splits into two halves.

The 1st half builds up a model for talking about consistency:

and the 2nd half of the talk looked at a number of specific instances of databases – Elasticsearch, MongoDB and AeroSpike – and see how they stacked up against the consistency guarantees they claim to have.

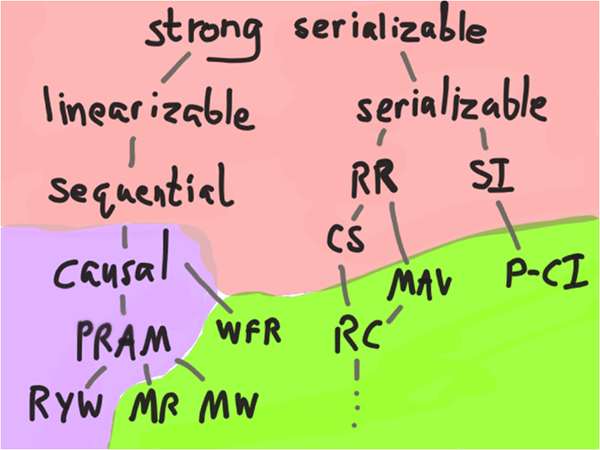

Rather than trying to explain them here and doing a bad job of it, I suggest you read Kyle’s post on the different consistency models from his diagram.

It’s a 15-20 mins read, after which you might also be interested to give these two posts a read too:

Instead I’ll just list a few key points I noted during the session:

- CAP theorem tells us that a linearizable system cannot be totally available

- for the consistency models in red, you can’t have total availability (the A in CAP) during a partition

- for total availability, look to the area

- weaker consistency models are more available in case of failure

- weaker consistency models are also less intuitive

- weaker consistency models are faster because they require less coordination

- weak is not the same as unsafe – safety depends on what you’re trying to do, e.g. eventual consistency is ok for counters, but for claiming unique usernames you need linearizability

Kyle’s Jepsen client uses black-box testing approach to test database systems (i.e. only looking at results from a client’s perspective) whilst inducing network partitions to see how the database behaves during a partition.

The clients generate random operations and apply them to the system. Since clients run on the same JVM so you can use linearizable data structures to record a history of results as received by the clients and use that history to detect consistency violations.

This is similar to the generative testing approach used by QuickCheck. Scott Wlaschin has two excellent posts to help you get started with FsCheck, a F# port of QuickCheck.

“MongoDB is not a bug, it’s a database”

– Kyle Kingsbury

and thus began a very entertaining second half of the talk as Kyle shared results from his tests against MongoDB, Elasticsearch and AeroSpike.

TL;DR

None of the databases were able to meet the consistency level they claim to offer, but at least Elasticsearch is honest about it and doesn’t promise you the moon.

Again, seeing as Kyle has recently written about these results in detail, I won’t repeat them here. The talk doesn’t go into quite as much depth, so if you have time I recommend reading his posts:

Whilst it was fun watching Kyle shoot holes through these database vendors’ consistency claims, and some of the fun-poking is really quite funny (and well deserved on the vendor’s part).

If there’s one thing you should takeaway from Kyle’s talk, and his work with Jepsen in general is, don’t drink the kool-aid.

Database vendors have a history of over-selling and at times out-right false marketing. As developers, we have the means to verify their claims, so next time you hear a claim that’s too good to be true, verify it, don’t drink the kool-aid.

Links

- Jepsen project (github)

- Call me maybe: MongoDB stale reads

- Call me maybe: Aerospike

- Call me maybe: Elasticsearch 1.5.0

- Call me maybe: Elasticsearch 1.1.0

- Linearizability vs Serializability

- Strong consistency models

- You can’t sacrifice partition tolerance

- Distributed systems for fun and profit

Whenever you’re ready, here are 4 ways I can help you:

- Production-Ready Serverless: Join 20+ AWS Heroes & Community Builders and 1000+ other students in levelling up your serverless game. This is your one-stop shop for quickly levelling up your serverless skills.

- Do you want to know how to test serverless architectures with a fast dev & test loop? Check out my latest course, Testing Serverless Architectures and learn the smart way to test serverless.

- I help clients launch product ideas, improve their development processes and upskill their teams. If you’d like to work together, then let’s get in touch.

- Join my community on Discord, ask questions, and join the discussion on all things AWS and Serverless.

Pingback: Fasterflect vs HyperDescriptor vs FastMember vs Reflection | theburningmonk.com