Yan Cui

I help clients go faster for less using serverless technologies.

(Update 2015/07/21 : read the next post in this series to learn about the places where implicit boxing happens with value types and how you can prevent them)

I chanced upon Sasha Goldshtein’s excellent book, Pro .Net Performance : Optimize Your C# Application, a few years back and I thoroughly enjoyed it.

Even though it has been almost 3 years since I read it and I have forgotten many of the things I learnt already but I still remember this line very clearly:

…in fact, even a class with no instance fields will occupy 12 bytes when instantiated…

this is of course, limited to a 32-bit system. On a 64-bit system, the smallest reference type instance will take up 24 bytes of memory!

How?!?

The reason for this is due to the way .Net objects are laid out in memory where you have:

- 4 bytes for Object Header Word;

- 4 bytes for Method Table Pointer; and

- min of 4 bytes for instance fields

When your class has only a byte field it’ll still take up 4 bytes of space for instance fields because objects have to align with 4-byte multiples. Even when your class has no instance fields, it’ll still take up 4 bytes. Therefore, on a 32-bit system, the smallest reference type instance will be 12 bytes.

On a 64-bit system, a word is 8 bytes instead of 4, and objects are aligned to the nearest 8-byte multiple, so the smallest reference type instance in this case will be 24 bytes.

sidebar : The Object Header Word and Method Table Pointer are used by the JIT and CLR. The book goes into a lot of detail on the structure and purpose of these, which for this blog post we’ll ignore. If you’re interested in learning more about them, go buy the book, it’ll be money well spent.

Reference vs Value Type

There are plenty to talk about when it comes to reference vs value type, including:

- stack vs heap allocation;

- default equality semantics, i.e. compare-by-ref vs compare-by-value;

- pass-by-value vs pass-by-ref;

- …

For the purpose of this post let’s focus on how they differ in terms of memory consumption and cache friendliness when you have a large array of each.

Memory Consumption

Suppose you have a Point2D class with only X and Y integer fields, each instance of this type will occupy a total of 16 bytes (including 8 bytes for X and Y) in the heap. Taking into account a 4 byte reference pointer (again, on a 32-bit system), it brings our total investment per instance of Point2D type to 20 bytes!

If you have an array with 10M instances of Point2D then you’ll have committed 190MB of memory for this array!

On the other hand, if Point2D was a value type then each instance would only take up 8 bytes for the X and Y values, and they’ll be tightly packed into the array without each needing an additional 4 bytes for reference pointer. Overall the amount of memory you’re committing to this array would drop to 76MB.

Cache Friendliness

Whilst there are no inherent differences in the speed of accessing stack vs heap allocated memory (they are just different ranges of addresses in the virtual memory after all) there are a number of performance-related considerations.

Stack allocated memory does not incur GC overhead

The stack is self-managed in the sense that when you leave a scope you just move the pointer back to the previous position and you’d have “deallocated” the previously allocated memory.

With heap allocated memory you incur the overhead of a rather sophisticated generational GC, which as part of a collection needs to move surviving objects around to compact the memory space (which becomes more and more expensive as you move from gen 0 to 2).

sidebar : I remember reading a post a few years ago that discussed how the StackOverflow guys went through their entire codebase and converted as many classes to struct as they can in order to reduce the amount of latency spikes on their servers due to GC pauses. Whilst I’m not advocating for you to do the same, just to illustrate that GC pauses is a common problem on web servers. The Background GC mode introduced in recent .Net releases would have reduced the frequency of these pauses but sensible uses of value types would still help in this regard.

On the stack, temporal locality implies spatial locality and temporal access locality

This means that, objects that are allocated close together in time are also stored close together in space. Further more, objects allocated close in time (e.g. inside the same method) are also likely to be used close together.

Both spatial and temporal access locality plays nicely with how the cache works (i.e. fewer cache misses) and how OS paging works (i.e. fewer virtual memory swaps).

On the stack, memory density is higher

Since value types don’t have the overheads with reference types – Object Header Word and Method Table Pointer – so you’re able to pack more objects in the same amount of memory.

Higher memory density leads to better performance because it means fewer fetches from memory.

On modern hardware, the entire thread stack can fit into CPU cache

When you spawn a new thread, it’s allocated with 1MB of stack space. Whereas CPUs nowadays comes with a much bigger L3 cache (e.g. Nehalem has up to 24MB L3 cache) so the entire stack can fit into the L3 cache which is a lot faster to access compared to main memory (have a look at this talk from Gael Fraiteur, creator of PostSharp, to see just how access time differs).

For better or worse, modern hardware is built to take advantage of both spatial and temporal localities and these optimizations manifest themselves in how data is fetched from main memory in cache lines.

Consider what happens at a hardware level when you iterate through an array of 10 million Point2D objects. If Point2D was a reference type, then each time you iterate over an instance of it in the array:

- CPU will fetch a cache line (typically 64 bytes) starting from where the array element is

- it’ll access the memory to get the reference pointer (4 bytes) for the object in the heap

- it’ll discard the rest of the cache line

- it’ll fetch another cache line (again, 64 bytes) starting from where the object is

- it’ll read the object and do whatever it is you asked it to do

- and then it’ll do the same for the next element in the array and so on…

notice how much work goes wasted here due to reference jumping?

On the other hand, if Point2D was a value type with only X and Y values (8 bytes):

- CPU will fetch a cache line (64 bytes) starting from where the array element is

- it’ll read the object (8 bytes) and do its work

- it’ll try to get the next object, realizing that it’s already in the cache (what a lucky day!)

- it’ll read the next object (8 bytes) from the existing cache line and so on

the CPU is able to read 8 objects from one fetch vs. 2 fetches per object!

To see how this cache friendliness translate to performance numbers we can easily measure – execution time. Let’s consider this simple example:

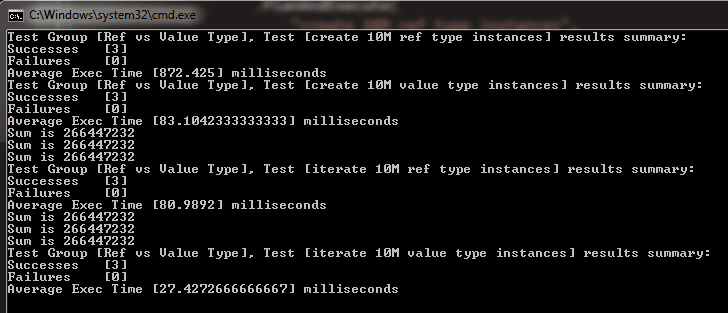

Here is the result of running the tests above in release mode:

or in tabular format:

|

create 10M objects |

iterate over 10M objects |

|

|

reference type |

872.425ms |

80.9892ms |

|

value type |

83.1042ms |

27.4273ms |

As well as the additional memory overhead, it’s also over 10x slower to create and 3x slower to iterate over an array of 10 million objects when they are defined as a reference type.

You might say “well, these are still only milliseconds!”, but for a web server that needs to deal with a large number of concurrent requests, these margins are very significant!

In some areas of software, such as high frequency trading or arbitrage trading, even nanoseconds can make a big difference to your bottom line.

sidebar : people are doing some really funky stuff in those areas to gain a slight edge over each other, including:

- building data centres right next to the exchange;

- using microwave to transmit data so that you receive data faster than exchanges connected by fiber optic cables;

- using FPGA to run your trading logic;

- etc.

It’s an area of finance that is genuinely quite interesting and I have heard some fascinating tales from friends who are working in these areas.

Conclusions

Using value types sensibly is a very powerful way to improve the performance of your .Net application – this is true for both C# and F#. Without going overboard, you should consider using structs if:

- the objects are small and you need to create lots of them;

- you need high memory density;

- memory consumption is a constraint;

- you need to iterate large arrays of them frequently

In addition, here are some tips to help you “get value types right”:

- make them immutable;

- override Equals (the one that takes an object as argument);

- overload Equals to take another instance of the same value type (e.g. Equals(Point2D other));

- overload operators == and !=

- override GetHashCode

In the next post we’ll look at some pitfalls wrt the use of value types, and why we have the best practices above.

Scott Meyers did a great talk on CPU cache at last year’s NDC Oslo and touched on other topics such as false sharing, etc. It’s a good starting point if you’re new to how CPU cache affects the performance of your code.

Martin Thompson (of the Disruptor fame) has an excellent blog where he writes about many related topics including:

- why memory access patterns are important

- memory barriers/fences

- false sharing

- CPU cache flushing fallacy

Martin’s posts use Java as example but many of the lessons are directly applicable in .Net too.

Links

- Beware of implicit boxing of value types

- Scott Meyers – CPU Cache and why you should care about it

- Machine Sympathy

- LMAX architecture

- Takeaways from “Multithreading beyond the lock keyword”

Whenever you’re ready, here are 3 ways I can help you:

- Production-Ready Serverless: Join 20+ AWS Heroes & Community Builders and 1000+ other students in levelling up your serverless game. This is your one-stop shop for quickly levelling up your serverless skills.

- I help clients launch product ideas, improve their development processes and upskill their teams. If you’d like to work together, then let’s get in touch.

- Join my community on Discord, ask questions, and join the discussion on all things AWS and Serverless.

nice post, I love that book as well, there’s loads of great info in it. You might also like this one http://www.writinghighperf.net/.

BTW the StackOverflow post you talk about is http://blog.marcgravell.com/2011/10/assault-by-gc.html.

The problem they had is that it’s expensive for the GC to scan a large array of reference types because it has to look at each one in turn (to check if it’s still live). But if you convert that to a large array of value types the GC can ignore the entire array as there’s nothing to collect

Thanks for the link, that was the post I was referring to. I also have that book by Ben Watson but never got around to actually reading it, will hopefully get to it at some point. If you’ve read both, any tips on which chapters to read first? I imagine there’s probably quite a bit of overlap between the two books.

Scanning a large array is a good example of where memory density and not needing to jump pointers (hence discarding an already fetched cache line) can help improve performance.

But array themselves are heap allocated so the GC will still need to collect the array itself though. That said, it’s far easier & cheaper to mark and sweep a continuous block of memory (array of value types) vs having to trace each of the elements too. In this regard, you also cause less fragmentation with a large array of value types too.

My understanding of the issue is that, when you have an array of value types the GC doesn’t need to scan it at all when looking for live objects, because all the items are value types (not references). So it’s during the mark/sweep part that the pauses happened, rather than the collection. But yes I agree with you about the memory and cache lines.

There’s a bit more in this post http://samsaffron.com/archive/2011/10/28/in-managed-code-we-trust-our-recent-battles-with-the-net-garbage-collector in the section “The worst possible abuse of the garbage collector”.

With regards to the books, there’s definitely a bit of overlap, although the one you’ve read probably goes a bit deeper in some areas (i.e. it features assembly code!). In Ben’s book the stuff on JIT and GC is a good read.

Ah, that’s what you meant by ‘scanning’, sorry I misunderstood your remark – I thought you meant they were ‘scanning’ the array in their application code and look for some ‘IsLive’ field/property on each object.

In which case we’re on the same page I think, that was also what I was meant by “it’s far easier & cheaper to mark and sweep a continuous block of memory (array of value types) vs having to trace each of the elements too”

Yeah your right, we’re talking about the same thing (sorry, I wasn’t precise with my terminology)

Pingback: Year in Review, 2015 | theburningmonk.com