Yan Cui

I help clients go faster for less using serverless technologies.

This article is brought to you by

The real-time data platform that empowers developers to build innovative products faster and more reliably than ever before.

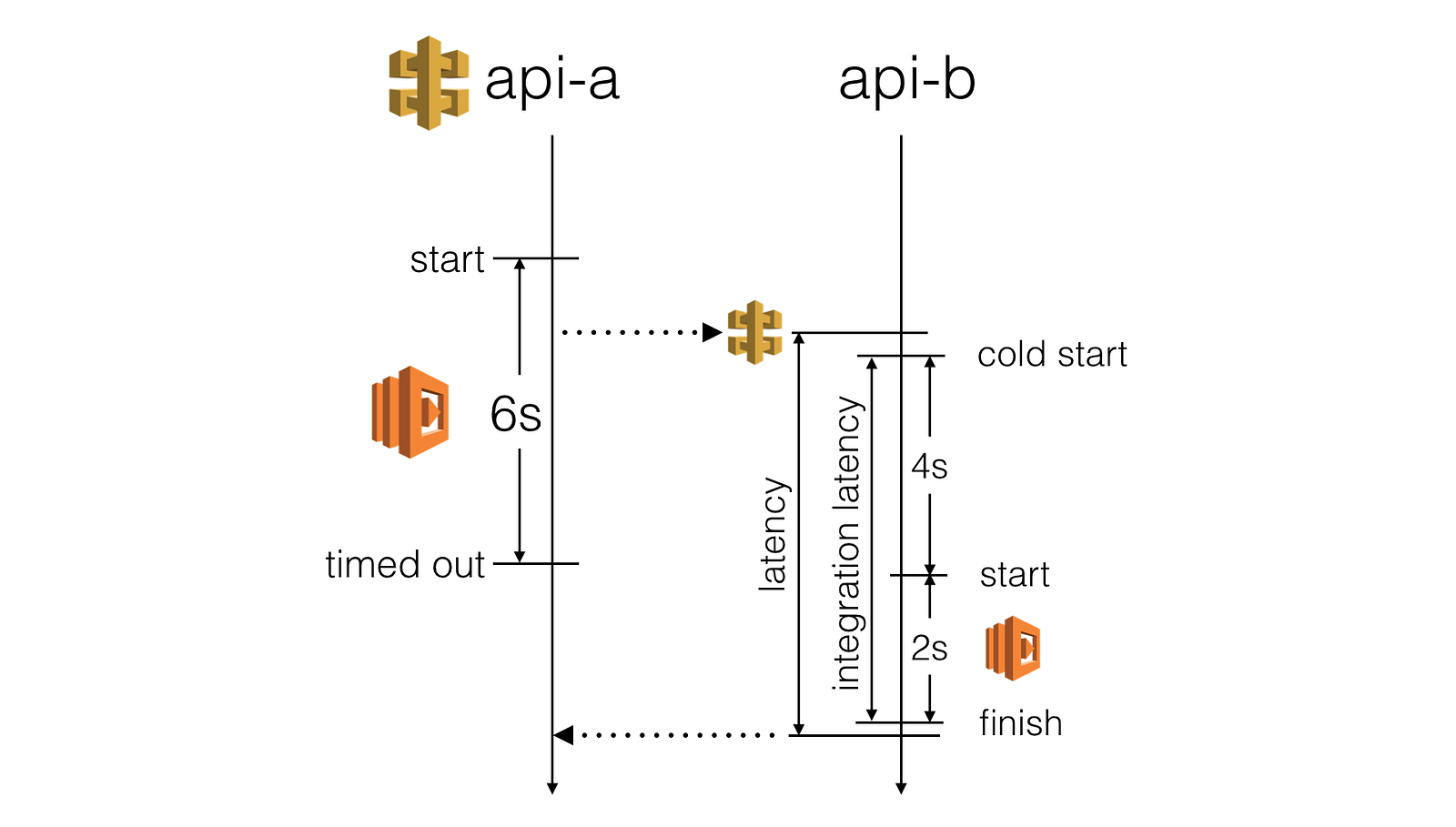

With API Gateway and Lambda, you’re forced to use relatively short timeouts on the server-side:

- API Gateway have a 30s max timeout on all integration points

- Serverless framework uses a default of 6s for AWS Lambda functions

However, as you have limited influence over a Lambda function’s cold start time and have no control over the amount of latency overhead API Gateway introduces, the actual client-facing latency you’d experience from a calling function is far less predictable.

To prevent slow HTTP responses from causing the calling function to timeout (and therefore impact the user experience we offer) we should make sure we stop waiting for a response before the calling function times out.

“the goal of the timeout strategy is to give HTTP requests the best chance to succeed, provided that doing so does not cause the calling function itself to err”

– me

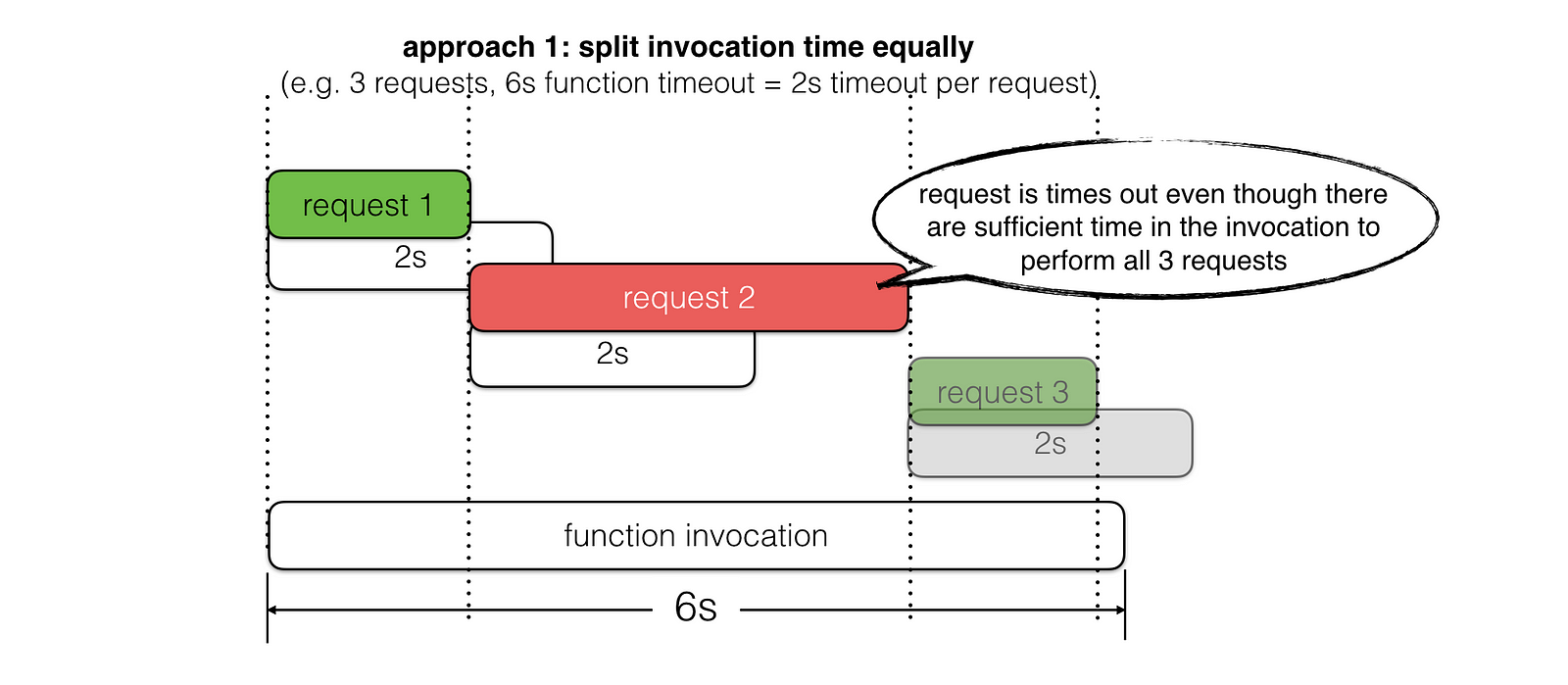

Most of the time, I see folks use fixed (either hard coded or specified via config) timeout values, which is often tricky to decide:

- too short and you won’t give the request the best chance to succeed, e.g. there’s 5s left in the invocation but we had set timeout to 3s

- too long and you run the risk of letting the request timeout the calling function, e.g. there’s 5s left in the invocation but we had set timeout to 6s

This challenge of choosing the right timeout value is further complicated by the fact that we often perform more than one HTTP request during a function invocation – e.g. read from DynamoDB, talk to some internal API, then save changes to DynamoDB equals a total of 3 HTTP requests in one invocation.

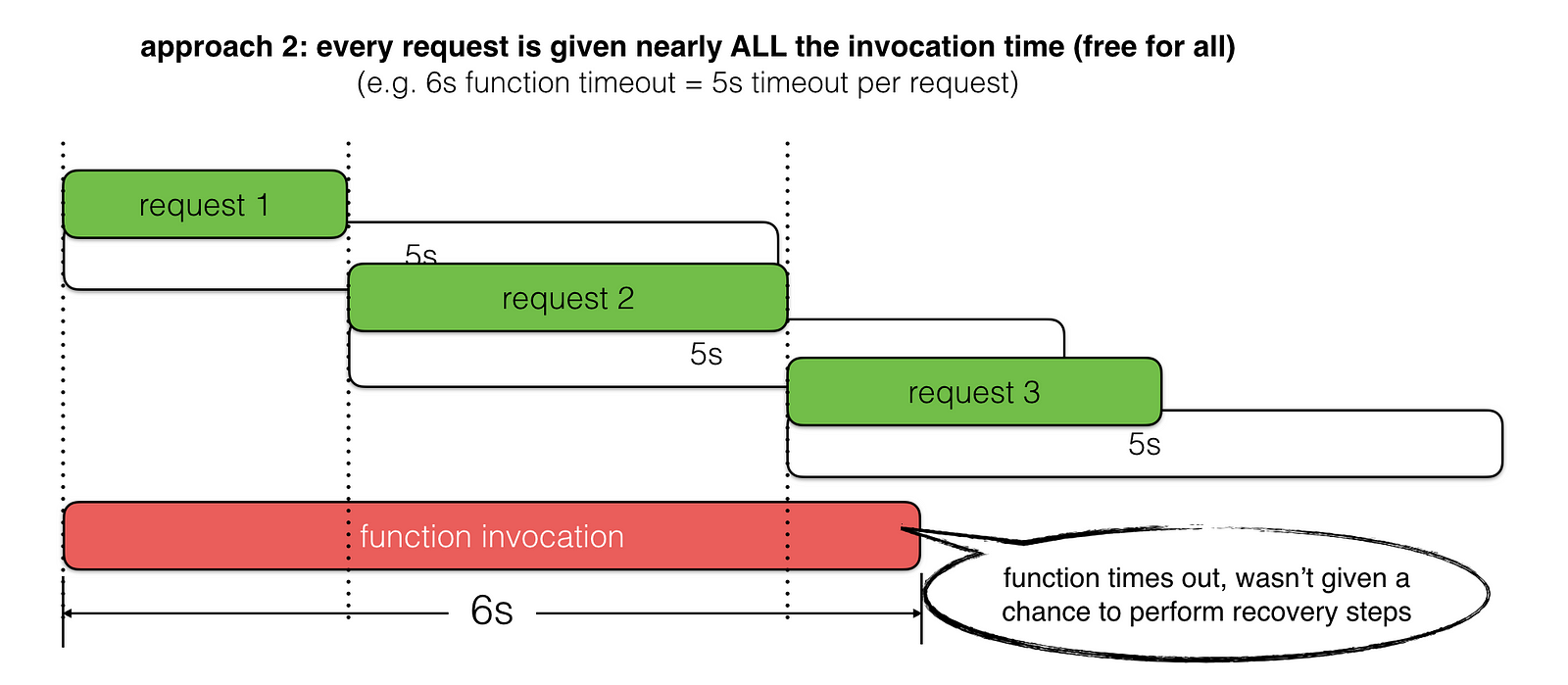

Let’s look at two common approaches for picking timeout values and scenarios where they fall short of meeting our goal.

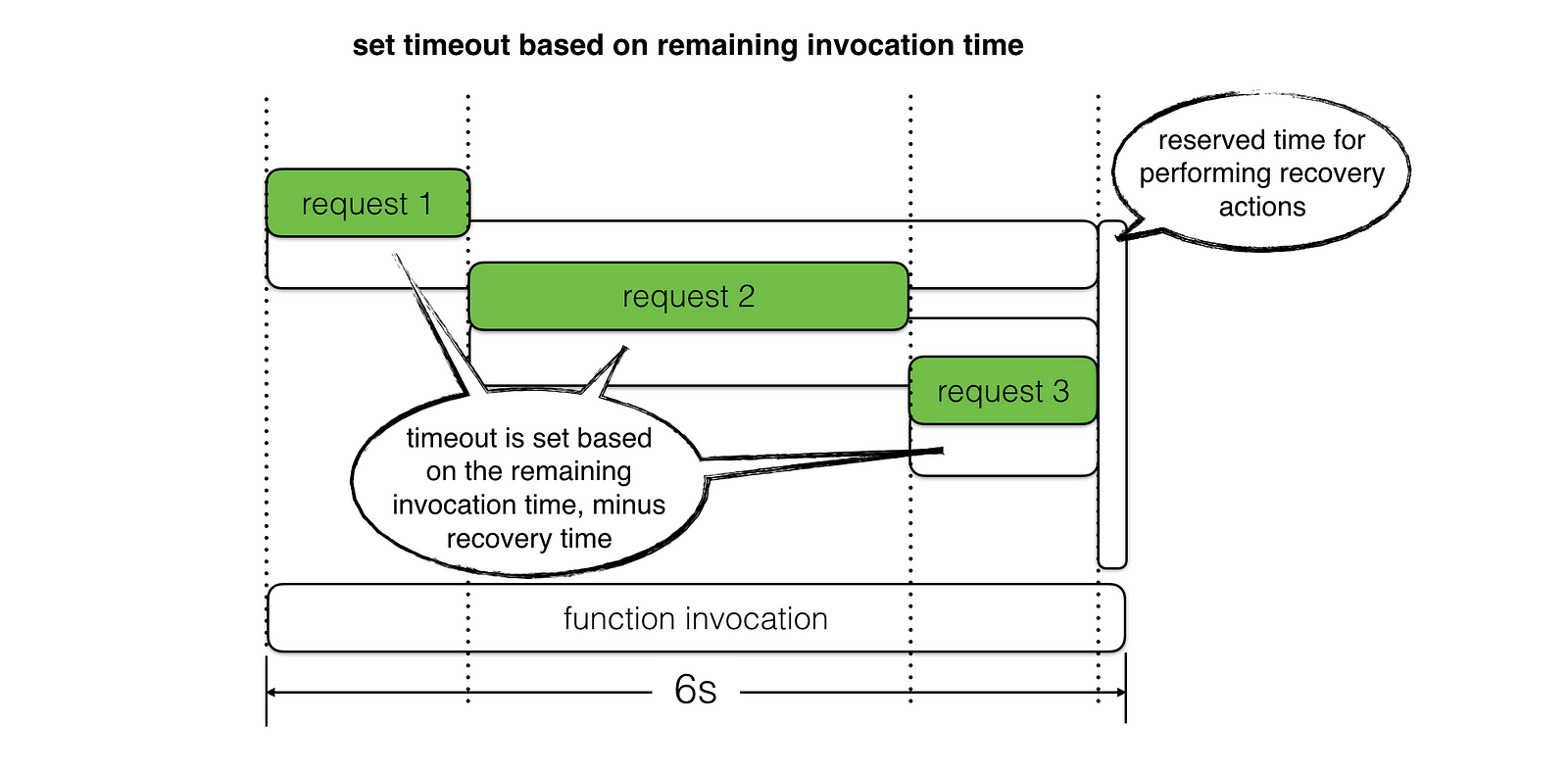

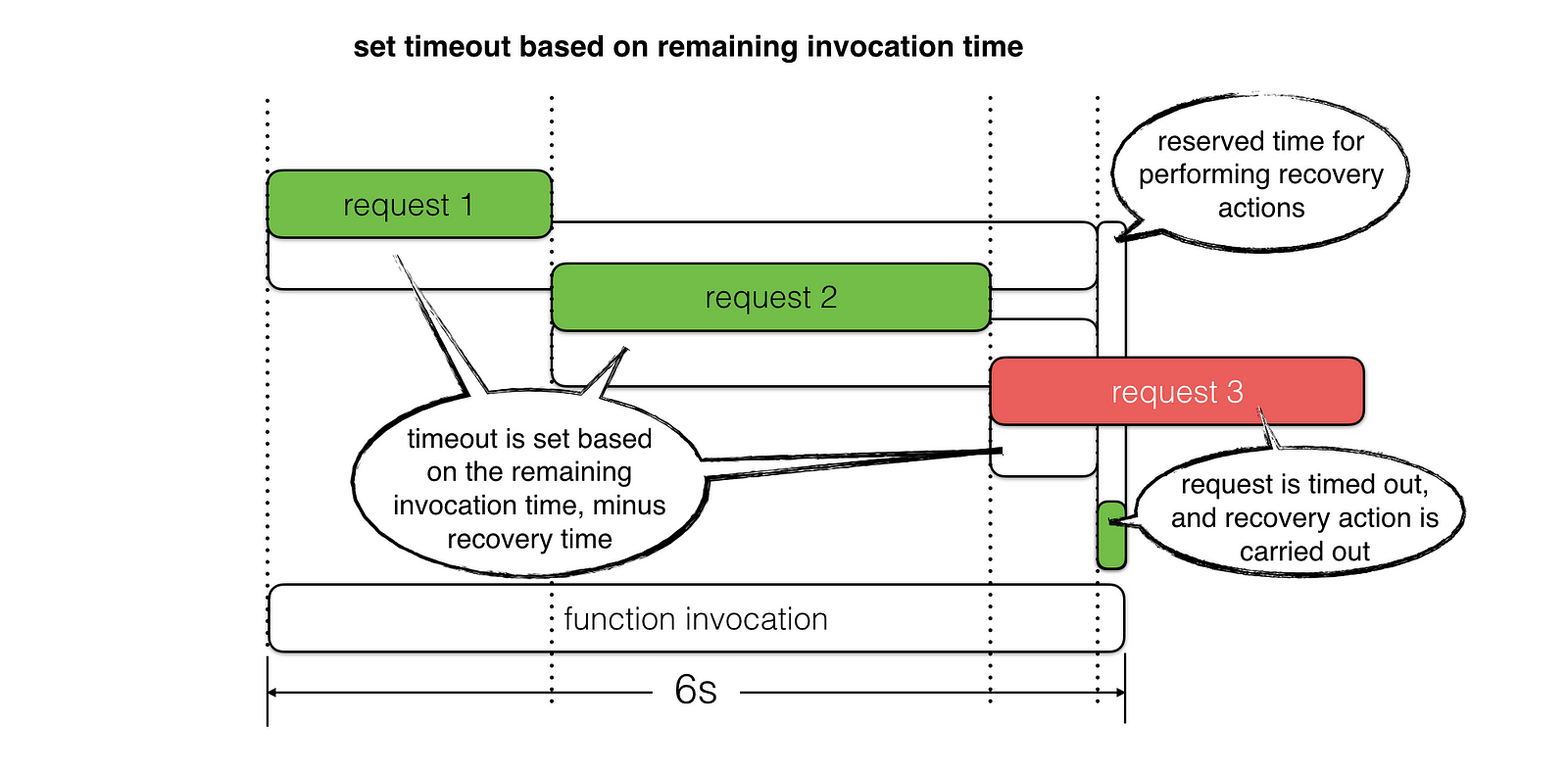

Instead, we should set the request timeout based on the amount of invocation time left, whilst taking into account the time required to perform any recovery steps – e.g. return a meaningful error with application specific error code in the response body, or return a fallback result instead.

You can easily find out how much time is left in the current invocation through the context object your function is invoked with.

For example, if a function’s timeout is 6s, but by the time you make the HTTP request you’re already 1s into the invocation (perhaps you had to do some expensive computation first), and if we reserve 500ms for recovery, then that leaves us with 4.5s to wait for HTTP response.

With this approach, we get the best of both worlds:

- allow requests the best chance to succeed based on the actual amount of invocation time we have left; and

- prevent slow responses from timing out the function, which allows us a window of opportunity to perform recovery actions

requests are given the best chance to succeed, without being restricted by an arbitrarily determined timeout.

But what are you going to do AFTER you time out these requests? Aren’t you still going to have to respond with a HTTP error since you couldn’t finish whatever operations you needed to perform?

At the minimum, the recovery actions should include:

- log the timeout incident with as much context as possible (e.g. how much time the request had), including all the relevant correlation IDs

- track custom metrics for

serviceX.timedoutso it can be monitored and the team can be alerted if the situation escalates - return an application error code in the response body (see example below), along with the request ID so the user-facing client app can display a user friendly message like “Oops, looks like this feature is currently unavailable, please try again later. If this is urgent, please contact us at xxx@domain.com and quote the request ID f19a7dca. Thank you for your cooperation :-)”

{

"errorCode": 10021,

"requestId": "f19a7dca",

"message": "service X timed out"

}

In some cases, you can also recover even more gracefully using fallbacks.

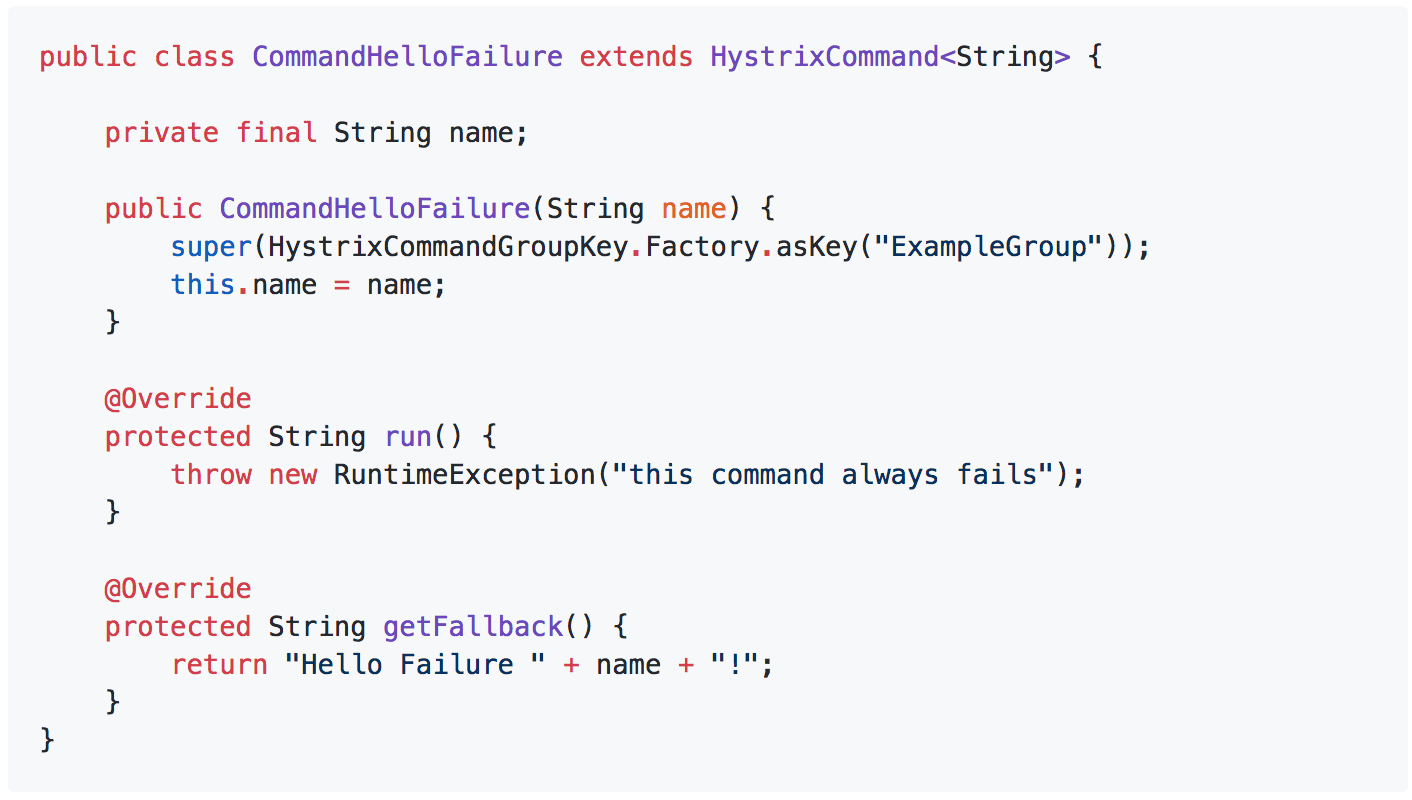

Netflix’s Hystrix library, for instance, supports several flavours of fallbacks via the Command pattern it employs so heavily. In fact, if you haven’t read its wiki page already then I strongly recommend that you go and give it a thorough read, there are tons of useful information and ideas there.

At the very least, every command lets you specify a fallback action.

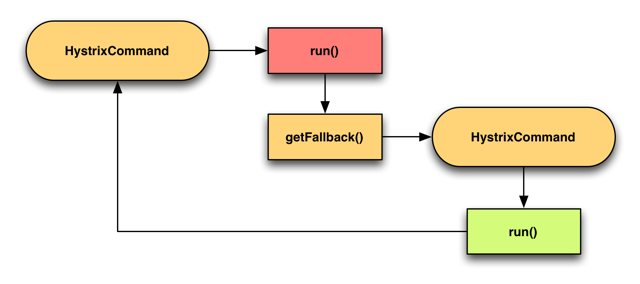

You can also chain the fallback together by chaining commands via their respective getFallback methods.

For example,

- execute a DynamoDB read inside

CommandA - in the

getFallbackmethod, executeCommandBwhich would return a previously cached response if available - if there is no cached response then

CommandBwould fail, and trigger its owngetFallbackmethod - execute

CommandC, which returns a stubbed response

Anyway, check out Hystrix if you haven’t already, most of the patterns that are baked into Hystrix can be easily adopted in our serverless applications to help make them more resilient to failures – something that I’m actively exploring with a separate series on applying principles of chaos engineering to Serverless.

Whenever you’re ready, here are 4 ways I can help you:

- Production-Ready Serverless: Join 20+ AWS Heroes & Community Builders and 1000+ other students in levelling up your serverless game. This is your one-stop shop for quickly levelling up your serverless skills.

- Do you want to know how to test serverless architectures with a fast dev & test loop? Check out my latest course, Testing Serverless Architectures and learn the smart way to test serverless.

- I help clients launch product ideas, improve their development processes and upskill their teams. If you’d like to work together, then let’s get in touch.

- Join my community on Discord, ask questions, and join the discussion on all things AWS and Serverless.