Yan Cui

I help clients go faster for less using serverless technologies.

This article is brought to you by

The real-time data platform that empowers developers to build innovative products faster and more reliably than ever before.

If you have done any DevOps work on Amazon Web Services (AWS) then you should be familiar with Amazon CloudWatch, a service for tracking and viewing metrics (CPU, network in/out, etc.) about the various AWS services that you consume, or better still, custom metrics that you publish about your service.

On top of that, you can also set up alarms on any metrics and send out alerts via Amazon SNS, which is a pretty standard practice of monitoring your AWS-hosted application. There are of course many other paid services such as StackDriver and New Relic which offer you a host of value-added features, personally I was impressed with some of the predicative features from StackDriver.

The built-in Amazon management console for CloudWatch provides the rudimentary functionalities that lets you browse your metrics and view/overlap them on a graph, but it falls short once you have a decent number of metrics.

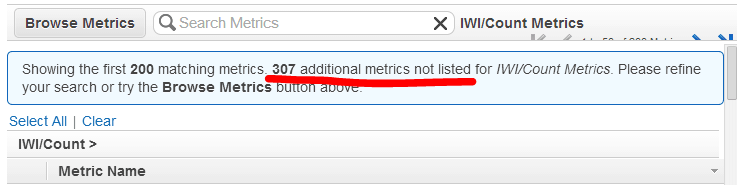

For starters, when trying to browse your metrics by namespace, you’re capped at 200 metrics so discovery is out of the question, you have to know what you’re looking for to be able to find it, which isn’t all that useful when you have hundreds of metrics to work with…

Also, there’s no way for you to filter metrics by the recorded datapoints, so to answer even simple questions such as

‘what other timespan metrics also spiked at mid-day when our service discovery latency spiked?’

you now have to manually go through all the relevant metrics (and of course you have to find them first!) and then visually check the graph to try and find any correlations.

After being frustrated by this manual process for one last time I decided to write some tooling myself to make my life (and hopefully others) a bit easier, and in comes Amazon.CloudWatch.Selector, a set of DSLs and CLI for querying against Amazon CloudWatch.

Amazon.CloudWatch.Selector

With this simple library you will get:

- an internal DSL which is intended to be used from F# but still usable from C# although syntactically not as intuitive

- an external DSL which can be embedded into a command line or web tool

Both DSLs support the same set of filters, e.g.

| NamespaceIs | Filters metrics by the specified namespace. |

| NamespaceLike | Filters metrics using a regex pattern against their namespaces. |

| NameIs | Filters metrics by the specified name. |

| NameLike | Filters metrics using a regex pattern against their names. |

| UnitIs | Filters metrics against the unit they’re recorded in, e.g. Count, Bytes, etc. |

| Average | Filters metrics by the recorded average data points, e.g. average > 300 looks for metrics whose average in the specified timeframe exceeded 300 at any time. |

| Min | Same as above but for the minimum data points. |

| Max | Same as above but for the maximum data points. |

| Sum | Same as above but for the sum data points. |

| SampleCount | Same as above but for the sample count data points. |

| DimensionContains | Filters metrics by the dimensions they’re recorded with, please refer to the CloudWatch docs on how this works. |

| DuringLast | Specifies the timeframe of the query to be the last X minutes/hours/days. Note: CloudWatch only keeps up to 14 days worth of data so there’s no point going any further back then that. |

| Since | Specifies the timeframe of the query to be since the specified timestamp till now. |

| Between | Specifies the timeframe of the query to be between the specified start and end timestamp. |

| IntervalOf | Specifies the ‘period’ in which the data points will be aggregated into, i.e. 5 minutes, 15 minutes, 1 hour, etc. |

Here’s some code snippet on how to use the DSLs:

In addition to the DSLs, you’ll also find a simple CLI tool as part of the project which you can start by setting the credentials in the start_cli.cmd script and running it up. It allows you to query CloudWatch metrics using the external DSL.

Here’s a quick demo of using the CLI to select some CPU metrics for ElasiCache and then plotting them on a graph.

As a side note, one of the reasons why we have so many metrics is because we have made it super easy for ourselves to record new metrics (see this recorded webinar for more information) to gives ourselves a very granular set of metrics so that any CPU-intensive or IO work is monitored as well as any top-level entry points to our services.

Links

- Amazon.CloudWatch.Selector project page

- Amazon.CloudWatch.Selector Nuget package

- Demo video of the CLI

- Webinar – performance monitoring with AOP & AWS

- Slides for the webinar

Whenever you’re ready, here are 4 ways I can help you:

- Production-Ready Serverless: Join 20+ AWS Heroes & Community Builders and 1000+ other students in levelling up your serverless game. This is your one-stop shop for quickly levelling up your serverless skills.

- Do you want to know how to test serverless architectures with a fast dev & test loop? Check out my latest course, Testing Serverless Architectures and learn the smart way to test serverless.

- I help clients launch product ideas, improve their development processes and upskill their teams. If you’d like to work together, then let’s get in touch.

- Join my community on Discord, ask questions, and join the discussion on all things AWS and Serverless.

Pingback: F# Weekly #5, 2014 | Sergey Tihon's Blog