Yan Cui

I help clients go faster for less using serverless technologies.

This article is brought to you by

The real-time data platform that empowers developers to build innovative products faster and more reliably than ever before.

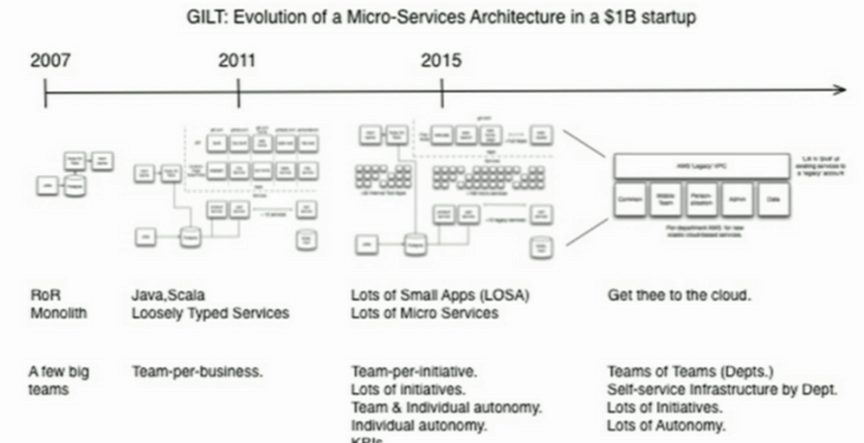

There were a couple of micro-services related talks at this year’s edition of CraftConf. The first was by Adrian Trenaman of Gilt, who talked about their journey from a monolithic architecture to micro-services, and from self-managed datacentres to the cloud.

From Monolith to Micro-Services

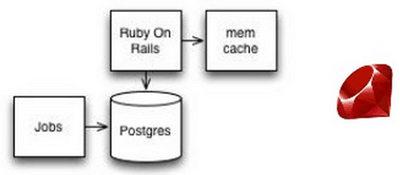

They started off with a Ruby on Rails monolithic architecture in 2007, but quickly grew to a $400M business within 4 years.

With that success came the scalability challenge, one that they couldn’t meet with their existing architecture.

It was at this point that they started to split up their monolith.

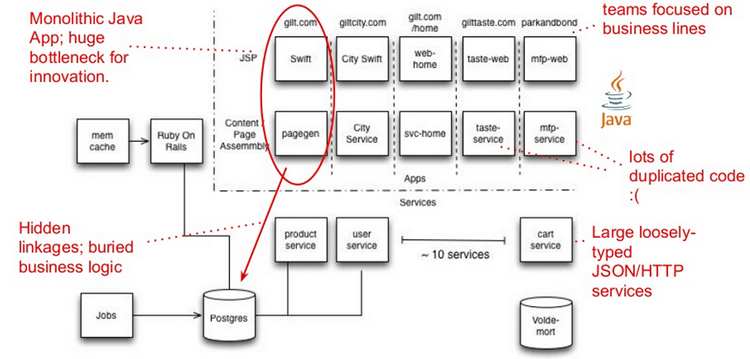

2011

A number of smaller, but still somewhat monolithic services were created – product service, user service, etc. But most of the services were still sharing the same Postgres database.

The cart service was the only one that had its own dedicated datastore, and this proved to be a valuable lesson to them. In order to evolve services independently you need to severe the hidden coupling that occurs when services share the same database.

Adrian also pointed out that, whilst some of the backend services have been split out, the UI applications (JSP pages) were still monoliths. Lots business logic such as promos and offers were hardcoded and hard/slow to change.

Another pervasive issue is that services are all loosely typed – everything’s a map. This introduced plenty of confusion around what’s in the map. This was because lots of their developers still hadn’t made the mental transition to working in a statically typed language.

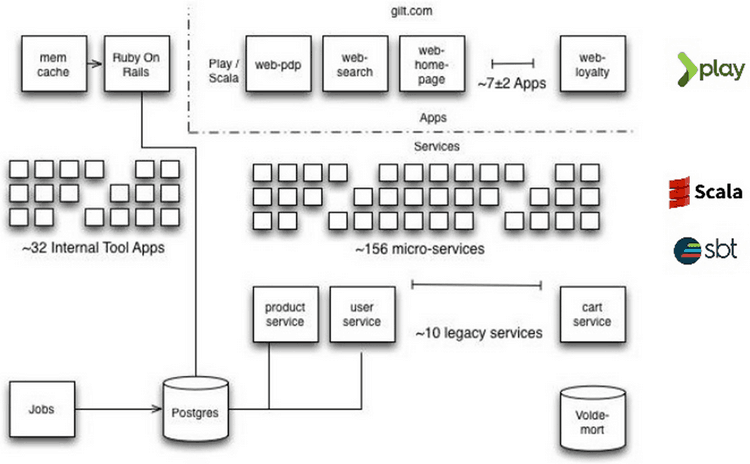

2015

Fast forward to 2015, and they’re now creating micro-services at a much faster rate using primarily Scala and the Play framework.

They have left the original services alone as they’re well understood and haven’t had to change frequently.

The front end application has been broken up into a set of granular, page-specific applications.

There has also been significant cultural changes:

- emergent architecture design rather than top-down

- technology decisions are driven by KPI where appropriate; or simple goals where there is no measurable KPIs

- fail fast, and be open about it so that lessons can be shared amongst the organization; they even hold regular meetings to allow people to openly discuss their failures

To the Cloud

Alongside the transition to micro-services, Gilt also migrated to AWS using a hybrid approach via Amazon VPC.

Every team has its own AWS account as well as a budget. However, organization of teams can change from time to time, but with this setup it’s difficult to move services around different AWS accounts.

Incremental Approach

One thing to note about Gilt’s migration to micro-services is that it took a long time and they have taken an incremental approach.

Adrian explained this as down to them prioritizing getting into market and extracting real business values over technical evolution.

Why Micro-Services

- it lessens inter-dependencies between teams

- faster code-to-production

- allows lots of initiatives in parallel

- allows different language/framework to be used by each team

- allows graceful degradation of services

- allows code to be easily disposable – easy to innovate, fail and move on; Greg Young also touched on this in his recent talk on optimizing for deletability

Challenges with Micro-Services

Adrian listed 7 challenges his team came across in their journey.

Staging

They found it hard to maintain staging environments across multiple teams and services. Instead, they have come to believe that testing in production is the best way to go, and it’s therefore necessary to invest in automation tools to aid with doing canary releases.

I once heard Ben Christensen of Netflix talk about the same thing, that Netflix too has come to realize that the only way to test a complex micro-services architecture is to test it in production.

That said, I’m sure both Netflix and Gilt still have basic tests to catch the obvious bugs before they release anything into the wild. But these tests would not sufficiently test the interaction between the services (something Dan North and Jessica Kerr covered in their opening keynote Complexity is Outside the Code).

To reduce the risk involved with testing in production, you should at least have:

- canary release mechanism to limit impact of bad release and;

- minimize time for roll back;

- minimize time to discovery for problems by having granular metrics (see ex-Netflix architect Adrian Cockcroft’s talk at Monitorama 14 on this)

Ownership

Who owns the service, and what happens if the person who created the service moves onto something else?

Gilt’s decision is to have teams and departments own the service, rather than individual staff.

Deployment

Gilt is building tools over Docker to give them elastic and fast provisioning. This is kinda interesting, because there are already a number of such tools/services available, such as:

It’s not clear what are missing from these that is driving Gilt to build their own tools.

Lightweight API

They have settled on a REST-style API, although I don’t get the sense that Adrian was talking about REST in the sense that Roy Fielding described – i.e. driven by hypermedia.

They also make sure that the clients are totally decoupled from the service, and are dumb and have zero dependencies.

Adrian also gave a shout out apidoc. Personally, we have been using Mulesoft’s Anypoint Platform and it has been a very useful tool for us to document our APIs.

Audit & Alerting

In order to give your engineers full autonomy in production and still stay compliant, you need good auditing and alerting capabilities.

Gilt built a smart alerting tool called CAVE, which alerts you when system invariants – e.g. number of orders shipped to the US in 5 min interval should be greater than 0 – have been violated.

Monitoring of micro-services is an interesting topic because once again, the traditional approach to monitoring – alert me when CPU/network or latencies go above threshold – is no longer sufficient because of the complex effects of causality that runs through your inter-dependent services.

Instead, as Richard Rodger pointed out in his Measuring Micro-Services talk at Codemotion Rome this year, it’s far more effective to identify and monitor emerging properties instead.

If you live in Portland then it might be worth going to Monitorama in June, it’s a conference that focuses on monitoring. Last year’s conference had some pretty awesome talks.

IO Explosions

I didn’t get a clear sense of how it works, but Adrian mentioned that Gilt has looked to lambda architecture for their critical path code, and are doing pre-computation and real-time push updates to reduce the number of IO calls that occur between services.

Reporting

Since they have many databases, it means their data analysts would need access to all the databases in order to run their reports. To simplify things, they put their analytics data into a queue which is then written to S3.

Whilst Adrian didn’t divulge on what technology Gilt is using for analytics, there are quite a number of tools/services available to you on the market.

Based on our experience at Gamesys Social where we have been using Google BigQuery for a number of years, I strongly recommend that you take it into consideration. It’s a managed service that allows you to run SQL-like, ad-hoc queries on Exabyte-size datasets and get results back in seconds.

At this point we have around 100TB of analytics data at Gamesys Social and our data analysts are able to run their queries everyday and analyse 10s of TBs of data without any help from the developers.

BigQuery is just one of many interesting technologies that we use at Gamesys Social, so if you’re interested in working with us, we’re hiring for a functional programmer right now.

Links

- CraftConf 15 takeaways

- Greg Young – the art of destroying software

- Takeaways from Adam Tornhill – Code as Crime Scene at QCON London

- Canary Release

- Adrian Cockcroft @ Monitorama PDX 2014

- A false choice : Microservices or Monoliths

- Microservices – not a free lunch!

- Richard Rodger – Measuring micro-services

Whenever you’re ready, here are 4 ways I can help you:

- Production-Ready Serverless: Join 20+ AWS Heroes & Community Builders and 1000+ other students in levelling up your serverless game. This is your one-stop shop for quickly levelling up your serverless skills.

- Do you want to know how to test serverless architectures with a fast dev & test loop? Check out my latest course, Testing Serverless Architectures and learn the smart way to test serverless.

- I help clients launch product ideas, improve their development processes and upskill their teams. If you’d like to work together, then let’s get in touch.

- Join my community on Discord, ask questions, and join the discussion on all things AWS and Serverless.

Pingback: CraftConf 15–Takeaways from “Microservice AntiPatterns” | theburningmonk.com

IO Explosions, just my guess:

If services have some kind of dependencies to other services (even when they should be independend, but still) if one service calls three other services, and each of those calls again three more services, and so on, and you have n tiers of services (even though service tiers are not microservice-concept, just as a calculation example here), and the latest make some kind of IO to get e.g. localization string, it will be (3 ^ n) times hits to the localization (-service) by just one “action”. Instead if you could (use some kind of reactive programming model and) push the required localizations to some kind of cache, well, those hits could be avoided. (Cache the subscription, not the actual result.)

Services should be independent in the sense that they have their own bounded context, and has one job that it needs to do well, but it doesn’t mean that they can’t depend on other services or interact with other services.

Caching is one of the ways you can combat IO explosion and .Net’s HttpClient supports caching behaviour (even though it’s disabled by default for some reason.. http://blog.technovert.com/2013/01/httpclient-caching/). It’s something that others have talked about at length (e.g. http://www.infoq.com/presentations/netflix-ipc).

But it really depends on what data you’re serving, for something like localization then it’s easily cachable but for hot data (data that gets changed a lot, like user state for a game) you might not be able to cache it at all.

Also, in general, caching only applies to HTTP GET requests, and not the other HTTP verbs. And what if you’re communicating with other services via a queue, or a stream (kinesis, kafka, etc.)?

There are also other ways around it, for instance, if two services have to talk to each other all the time (say, 1:1 message rate) and the ratio of CPU load is consistent (say 3:1) then you might choose to go multi-tenancy.

With something like Amazon ECS you can deploy both as Docker apps with 75% CPU allocated to one and 25% to the other. So you still have the boundaries for your services (both in terms of security and encapsulation) via Docker, and deployment granularity (if one service has to change then you deploy update only to that service) but cut out the network bandwidth and latency between this pair of services.

You do lose the ability to scale the two services independently, but since we know the message rate between the two is an invariant in our system so we’ll never truly scale them independently anyway (more load on one = more load on the other). Of course, this depends on if there is such invariant to begin with. In practice, I wouldn’t go this far unless it’s absolutely necessary and it does have a cognitive cost too (deployment becomes more complex and it’s just another thing you need to remember)

Oh, also check out another talk from CraftConf on micro-service antipatterns https://theburningmonk.com/2015/05/craftconf15-takeaways-from-microservice-antipatterns/ there were some good ideas and points there too (although only slightly related to IO explosion)

Pingback: The microservices marathon | manuel.bernhardt.io