Yan Cui

I help clients go faster for less using serverless technologies.

This article is brought to you by

The real-time data platform that empowers developers to build innovative products faster and more reliably than ever before.

A while back we looked at the performance difference between the language runtimes AWS Lambda supports natively.

We intentionally omitted coldstart time from that experiment as we were interested in performance differences when a function is “warm”.

However, coldstart is still an important performance consideration, so let’s take a closer look with some experiments designed to measure only coldstart times.

Methodology

From my personal experience running Lambda functions in production, coldstarts happen when a function is idle for ~5 mins. Additionally, functions will be recycled 4 hours after it starts – which was also backed up by analysis by the folks at IO Pipe.

However, the 5 mins rule seems to have changed. After a few tests, I was not able to see coldstart even after a function had been idle for more than 30 mins.

I needed a more reliable way to trigger coldstart.

After a few failed attempts, I settled on a surefire way to cause coldstart : by deploying a new version of my functions before invoking them.

I have a total of 45 functions for both experiments. Using a simple script (see below) I’m able to:

- deploy all 45 functions using the Serverless framework

- after each round of deployments, invoke the functions programmatically

the deploy + invoke loop takes around 3 mins. I ran the experiment for over 24 hours to collect a meaningful amount of data points. Thankfully the Serverless framework made it easy to create variants of the same function with different memory sizes and to deploy them quickly.

https://gist.github.com/theburningmonk/ce36a6239dc7fc317c23a6d3fbad09c1

https://gist.github.com/theburningmonk/bbbb8b5194e6f96bbeefaeb34bbf99ee

Hypothesis

Here were my hypothesis before the experiments, based on the knowledge that the amount of CPU resource you get is proportional to the amount of memory you allocate to a AWS Lambda function.

- C# and Java have higher coldstart time

- memory size affects coldstart time linearly

- code size affects coldstart time linearly

Let’s see if the experiments support these hypothesis.

Experiment 1 : coldstart time by runtime & memory

For this experiment, I created 20 functions with 5 variants (different memory sizes) for each language runtime – C#, Java, Python and Nodejs.

After running the experiment for a little over 24 hours, I collected a bunch of metric data (which you can download yourself here).

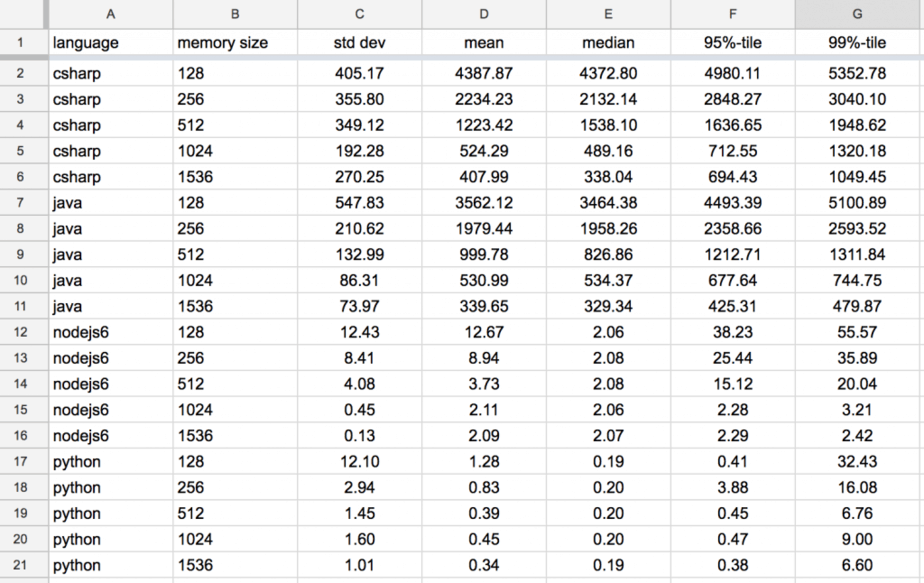

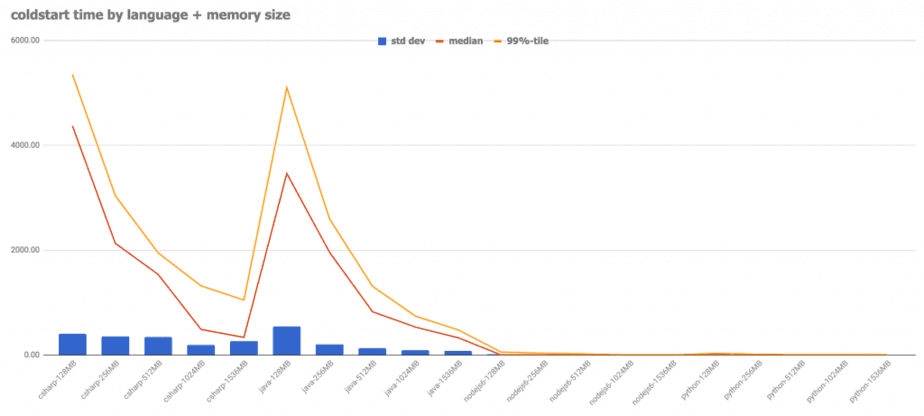

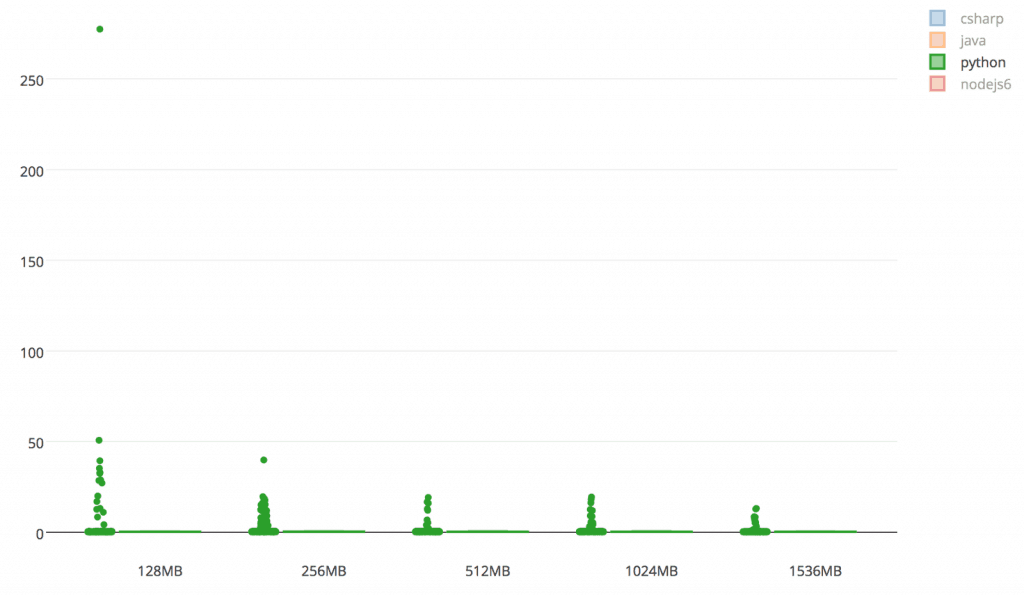

Here is how they look.

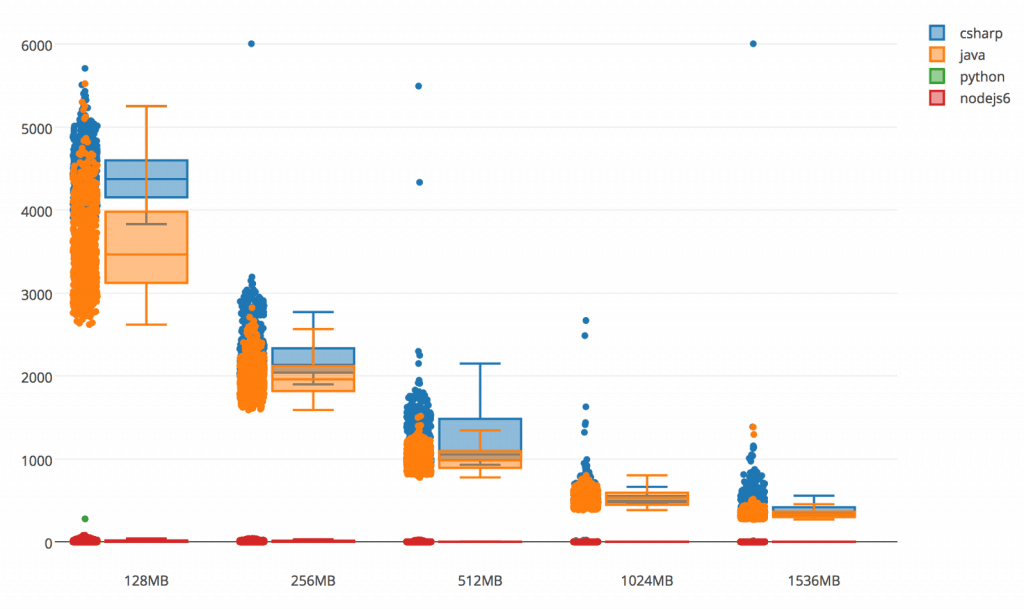

Observation #1 : C# and Java have much higher coldstart time

The most obvious trend is that statically typed languages (C# and Java) have over 100 times higher coldstart time. This clearly supports our hypothesis, although to a much greater extent than I anticipated.

Observation #2 : Python has ridiculously low codstart time

I’m pleasantly surprised by how little coldstart the Python runtime experiences. OK, there were some outlier data points that heavily influenced some of the 99 percentile and standard deviations, but you can’t argue with a 0.41ms coldstart time at the 95 percentile of a 128MB function.

Observation #3 : memory size improves coldstart time linearly

The more memory you allocate to your function, the smaller the coldstart time and the less standard deviation in coldstart time too. This is most obvious with the C# and Java runtimes as the baseline (128MB) coldstart time for both are very significant.

Again, the data from this experiment clearly supports our hypothesis.

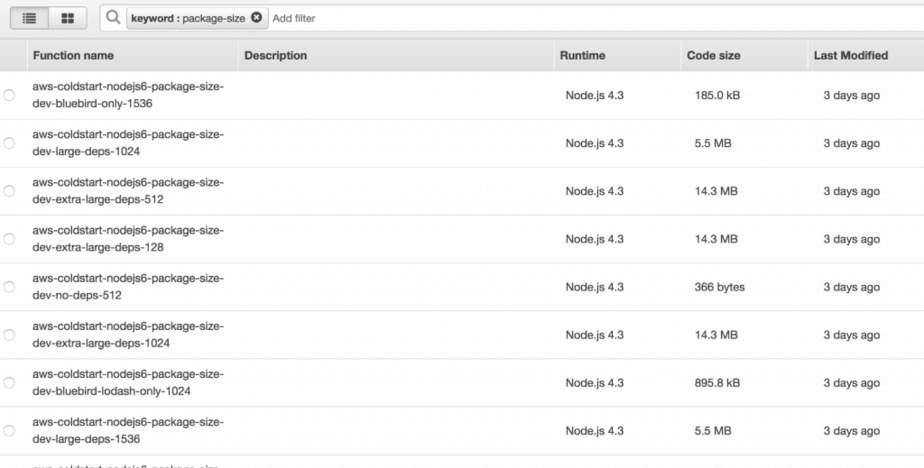

Experiment 2: coldstart time by code size & memory

For this second experiment, I decided to fix the runtime to Nodejs and create variants with different deployment package size and memory.

Here are the results.

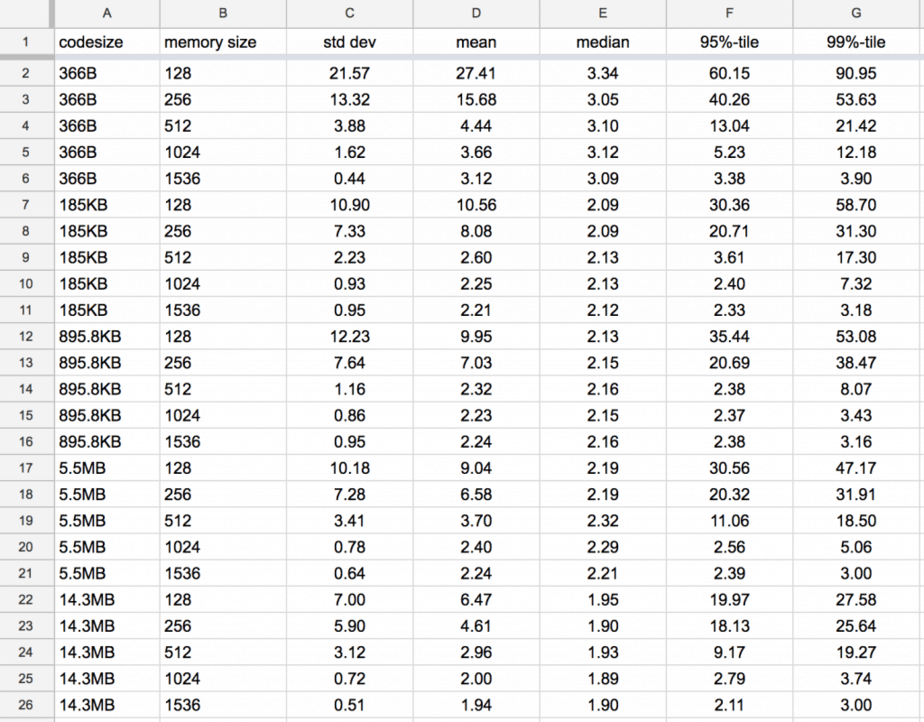

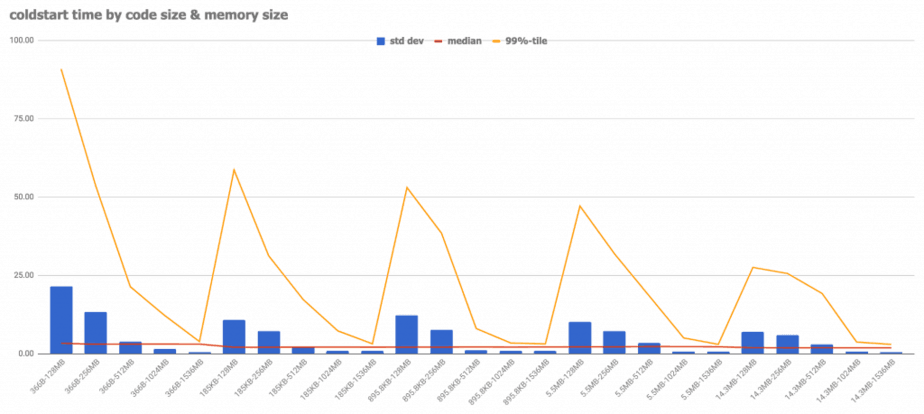

Observation #1 : memory size improves coldstart time linearly

As with the first experiment, the memory size improves the coldstart time (and standard deviation) in a roughly linear fashion.

Observation #2 : code size improves coldstart time

Interestingly the size of the deployment package does not increase the coldstart time (bigger package = more time to download & unzip, or so one might assume). Instead it seems to have a positive effect and decreases the overall coldstart time.

I would love to see someone else repeat the experiment with another language runtime to see if the behaviour is consistent.

Conclusions

The things I learnt from these experiments are:

- functions are no longer recycled after ~5 mins of idleness, which makes coldstarts far less punishing than before

- memory size improves coldstart time linearly

- C# and Java runtimes experience ~100 times the coldstart time of Python and suffer from much higher standard deviation too

- as a result of the above you should consider running your C#/Java Lambda functions with a higher memory allocation than you would Nodejs/Python functions

- bigger deployment package size does not increase coldstart time

ps. the source code used for these experiments can be found here, including the scripts used to calculate the stats and generate the plot.ly box charts.

Whenever you’re ready, here are 4 ways I can help you:

- Production-Ready Serverless: Join 20+ AWS Heroes & Community Builders and 1000+ other students in levelling up your serverless game. This is your one-stop shop for quickly levelling up your serverless skills.

- Do you want to know how to test serverless architectures with a fast dev & test loop? Check out my latest course, Testing Serverless Architectures and learn the smart way to test serverless.

- I help clients launch product ideas, improve their development processes and upskill their teams. If you’d like to work together, then let’s get in touch.

- Join my community on Discord, ask questions, and join the discussion on all things AWS and Serverless.

Pingback: Finding coldstarts : how long does AWS Lambda keep your idle functions around? | theburningmonk.com

Great article. Thank you!

Thank you

Thanks

It’d be cool to see golang too now that it’s officially supported.

Hi Mika, yes, it’s something that’s on my backlog to update this now that Golang and .Net Core 2.0 are supported, I’m waiting for the serverless framework to catch up as I used that for deployment.

Thank you, it would great to see golang as well!

https://serverless.com/blog/framework-example-golang-lambda-support/