Yan Cui

I help clients go faster for less using serverless technologies.

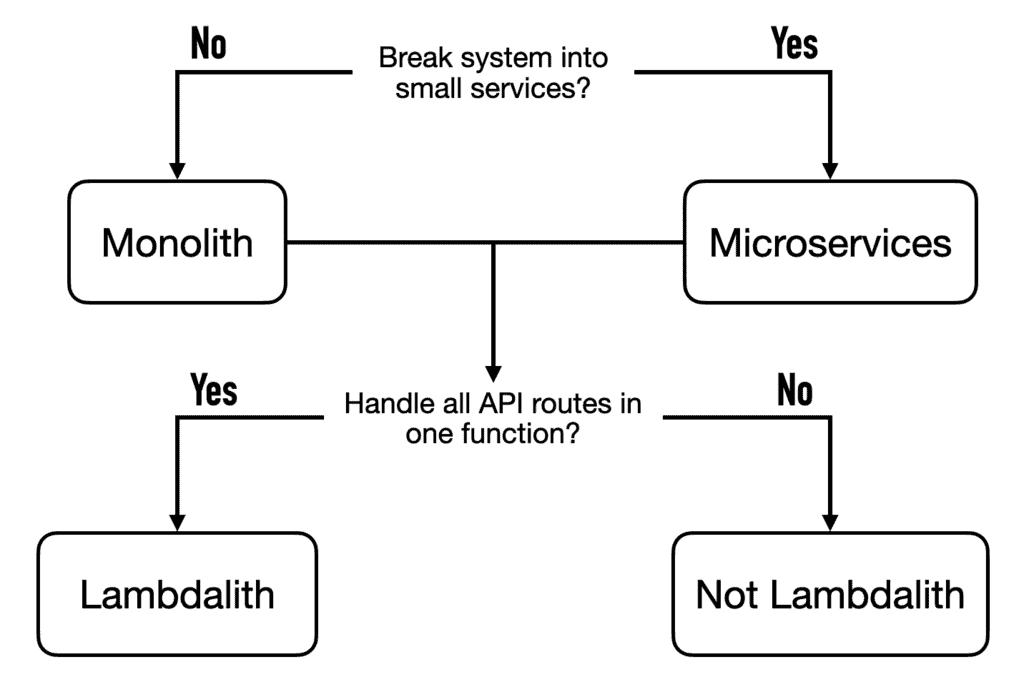

“Lambdalith” refers to deploying monolithic applications using AWS Lambda. This is typically associated with (but not limited to) building serverless APIs.

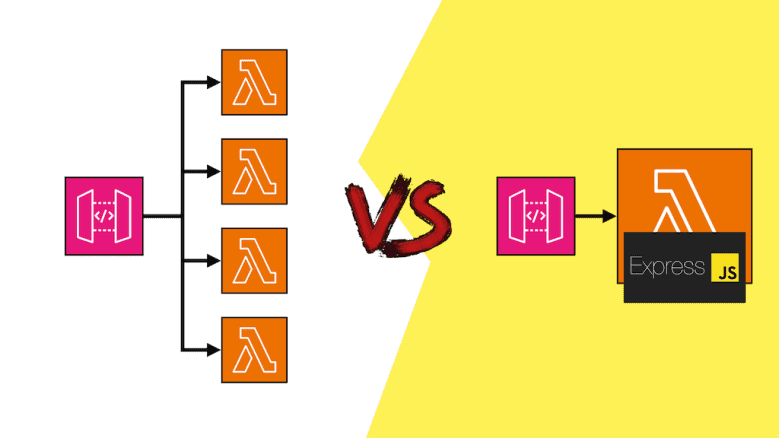

With a Lambdalith, a single Lambda function handles all the routes in an API. You can use Lambda Function URLs [1] or put the function behind a greedy path in API Gateway.

This contrasts with the “function per endpoint” approach, where a different Lambda function handles each API route.

Lambdaliths has been the source of intense debate in the serverless community. While I generally prefer the “function per endpoint” approach, I have adopted Lambdaliths where it makes sense.

In this post, let’s discuss the pros and cons of Lambdaliths and the nuances that are often overlooked.

Lambdaliths come in different shapes

The word “monolith” has some existing connotations within software engineering.

When you put different domains and business functionalities into a single service, that’s a monolith.

On the other hand, you have microservices. In microservices, we break a system into smaller, independent services, each handling a specific business function or domain.

When it comes to organizing a service (be it a monolith or a micro service) into Lambda functions, there is another level of break down.

Do we break down the different API routes of this service into multiple functions, perhaps, one function for each route?

Or do we implement all the API routes in one function? Aka, a Lambdalith.

As such, a Lambdalith can be a monolith service, but it can also be a micro service inside a microservices architecture. In the same way that a container app can be a monolith service, but it can also be a micro service inside a microservices architecture.

More often than not, “Lambdalith” means a monolith implemented with a single Lambda function.

But I’d like you to keep in mind that the engineering decision determining whether you have a Lambdalith is whether to handle all the API routes in one function, regardless of the role the API plays within the larger system.

Tools that support Lambdaliths

First, let’s talk about the (growing) ecosystem behind Lambdaliths.

A popular way to implement Lambdaliths is to use the Lambda Web Adapter [2]. It lets you build APIs using familiar frameworks (e.g. Express.js, Next.js or Flask) and run them on Lambda.

The adapter translates Lambda invocation events to HTTP 1.1/1.0 requests that these frameworks understand.

It supports invocation events from ALB, API Gateway and Lambda Function URLs. It can be used with Lambda functions packaged as container images or zip files.

It’s very flexible and is supported by AWS.

Performance

How you implement Lambdaliths makes a big difference in Lambda’s cold start performance.

The web framework

The framework you use in Lambda matters greatly when using the Lambda Web Adapter. Many of these frameworks are bulky. Next.js, for example, is 111MB unpacked!

Large dependencies have a significant impact on cold start duration.

So, I recommend using a lightweight framework such as Express.js or Hono [3] behind the Lambda Web Adapter.

The Lambda Web Adapter itself

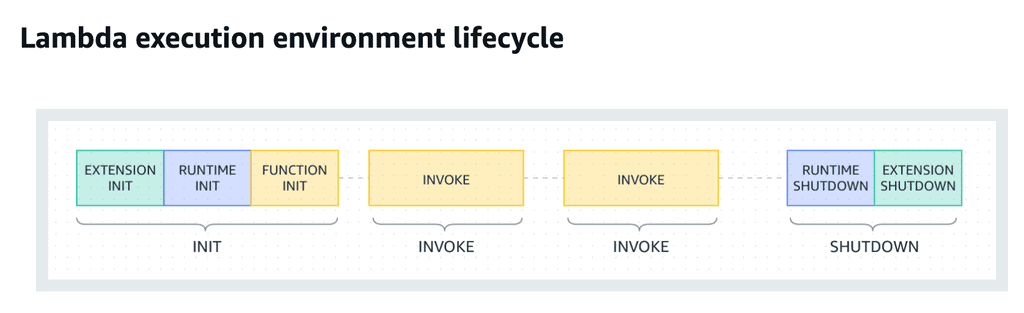

The Lambda Web Adapter runs as a Lambda Extension. This can add double-digit ms to Lambda cold starts because extensions are initialized before the runtime and function module (see Lambda lifecycle [4])

So, while the Lambda Web Adapter is very convenient and lets you run an existing web app in Lambda pretty much verbatim, it comes with some performance overhead.

If performance matters to you and you are happy to make small changes to existing web apps, consider frameworks such as serverless-express [5].

Unlike the Lambda Web Adapter, serverless-express provides a lightweight wrapper that you use to wrap an existing Node.js app (Express, Koa, Hapi, Sails, etc.).

Arguments AGAINST Lambdalith

The most common argument against Lambdaliths is that they make cold starts slower.

The rationale is that a Lambdalith function needs more dependencies to handle all the API routes. More dependencies mean bigger deployment artefacts.

Generally speaking, every 10 MB in unpacked code size costs 100 ms in cold start time.

I have seen Lambdaliths that handle both server-side rendering (SSR) and REST API routes. The API routes were needlessly punished because of the SSR-related dependencies (e.g. React) and took over 2 seconds to cold start!

However, this argument might not apply to many Lambdaliths. How much it applies depends on how homogeneous the dependencies are across the API routes.

For example, if all your API routes talk to DynamoDB and have the same dependencies, namely, the DynamoDB client, then this argument doesn’t apply to you.

Of course, the web framework itself is still an overhead, as discussed above. But if you use lightweight frameworks such as Express.js or Hono, then the cold start penalty is minimal and worth the gains in developer experience (more on this later).

Arguments FOR Lambdaliths

On the other hand, many argue that Lambdaliths are better for cold starts because there are fewer of them.

The rationale is that, with single-route functions, many of these routes do not receive enough traffic to keep them warm. So, every time a user hits a route, a cold start happens.

By consolidating all the traffic to one function, you reduce the likelihood of cold starts.

This is true for low-traffic web apps. But again, it does not apply to many situations. How much it applies depends on the traffic your API receives and, more importantly, the traffic distribution across routes.

Even for low-traffic APIs, you often see that the traffic naturally concentrates around a few routes. These represent the most common actions users take. So there is a natural consolidation of traffic.

Besides, for low-traffic routes that users seldom use, you can:

a) Ignore them: So what if they are slower? Few people ever experience it anyway.

b) Make cold starts fast enough: If cold starts are fast enough (because the function is lightweight and doesn’t have many dependencies), then it’s not a problem how often they occur.

This cold start argument only applies to low-traffic scenarios. In these cases, you can always use a Lambda warmer to keep a few instances of your function warm. If you use the Serverless Framework, there’s also a ready-made Lambda warmer plugin [6].

Portability / Familiarity

Personally, I think the value proposition of Lambdaliths is that:

- It makes your application more portable. If you need to move the workload to containers later, minimal rewriting is required.

- It makes it easier to migrate existing web apps to serverless with minimal rewrite.

- It brings a familiar development experience for testing and structuring the application.

It lets teams enjoy the benefits of serverless (scalability, built-in multi-AZ redundancy, pay-per-use pricing, and simplified deployments) without a large upfront investment in rewrites and re-skilling the team.

This is a big win for developer experience (DX), especially for teams who are new to serverless. They can continue using familiar application frameworks without changing how they structure or test their applications.

This is not to say that the DX is bad for single-route functions! Quite the contrary.

Once you figure out how, writing and testing single-route functions is a breeze. I have dedicated years to teaching others [7] how to build production-ready serverless applications and create a productive development workflow.

But, for teams new to serverless, it requires changing existing habits and a lot of rethinking. Lambdaliths let them skip ahead.

Function URLs

Lambdaliths also unlocks the use of Lambda Function URLs.

I discussed API Gateway vs. Function URLs in more detail here [1].

Function URLs are handy when:

- Response streaming is needed, e.g. when building LLM-powered chatbots or returning large payloads that exceed API Gateway’s 10MB limit.

- You need a long-running API endpoint. Nowadays, you can extend beyond API Gateway’s default 29s integration timeout. But beyond 3 minutes, you need to sacrifice maximum throughput.

Cost

Using Lambda Function URLs also reduces the cost of your application because you no longer need to pay for API Gateway!

Scalability

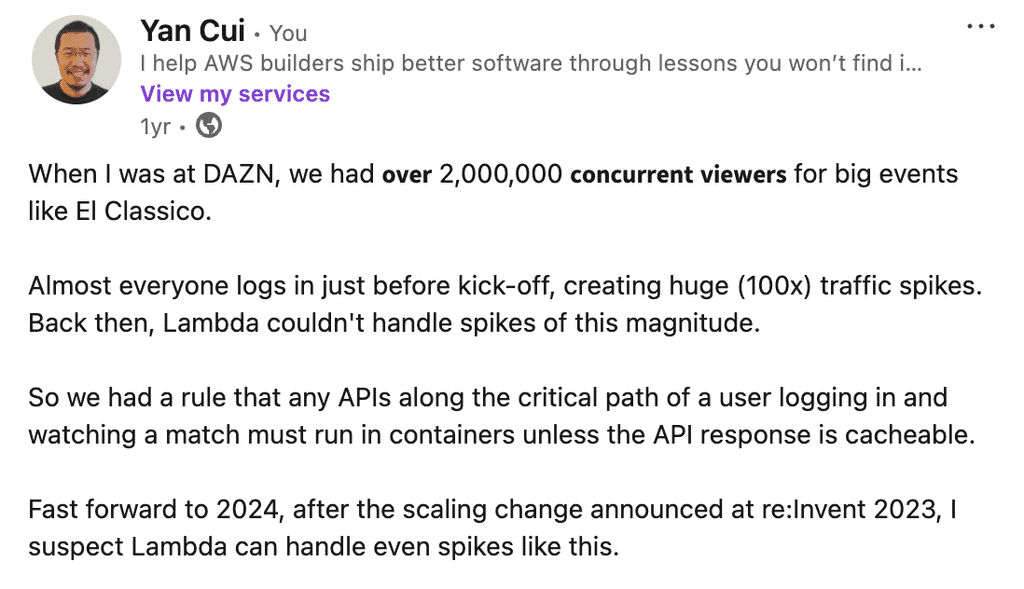

However, in some cases, Lambdaliths cannot scale as quickly as single-route functions.

Each Lambda function can instantly scale from 0 to 1,000 concurrent executions. After that, it can scale by a further 1,000 concurrent executions every 10 seconds. See official doc here [8].

(That is, assuming you have sufficient throughput units available at the region level)

This should more than suffice for most applications!

However, for some extreme cases [9], this might not be enough for applications that need to scale very quickly.

In these rare cases, you can reach higher throughput quicker with single-route functions because each function can scale by 1,000 concurrent executions every 10 seconds, independently.

By splitting the API across multiple functions – assuming the traffic spike is somewhat distributed across multiple routes – you can reach a higher total throughput faster.

Observability

For me, the biggest drawback of Lambdaliths is that you lose the fine-grained telemetry data that API Gateway and Lambda provide out of the box:

- API Gateway can provide per-endpoint metrics.

- Each Lambda function has its own metrics for invocations, errors, duration, etc.

These fine-grained metrics give you deeper insight and let you create more fine-grained and actionable alerts. Your alerts can tell you when a specific route has problems rather than a generic “something is wrong with the API”.

You can replace these built-in metrics with custom metrics. You can publish these custom metrics from the Lambdalith using Embedded Metric Format (EMF) [10], which has no latency overhead.

You can even automate these with middlewares.

Alternatively, you can use a platform like Lumigo [11], which can ingest your logs as events and allow you to create custom metrics and alerts from them at no extra cost, unlike CloudWatch, where you pay for the logs, metrics and alerts separately.

Ultimately, this is not a showstopper. But it’s a trade-off to consider, as it becomes something you must do yourself.

Summary

Lambdaliths handles all API routes in a single Lambda function, offering a more straightforward migration path and familiar DX for teams new to serverless.

They work well with tools like the Lambda Web Adapter, allowing you to use familiar web frameworks on Lambda.

Pros

- Easy migration from traditional apps.

- Familiar testing and structure.

- (Potentially) Fewer cold starts for low-traffic APIs.

- Lower costs with Lambda Function URLs.

- Response streaming and long-running APIs with Function URLs.

Cons

- (Potentially) Slower cold starts due to more/larger dependencies or from the Lambda Web Adapter.

- Limited per-route observability without extra instrumentation.

- Slower scaling in rapid-scaling scenarios.

Links

[1] API Gateway vs. Lambda Function URLs

[3] Hono – a lightweight web framework

[4] Lambda execution environment lifecycle

[6] Lambda warmer plugin for Serverless Framework

[7] Production-Ready Serverless bootcamp

[8] Understanding Lambda function scaling

[9] DAZN needs to scale quickly to allow millions of users to log in simultaneously

[10] EMF specification

[11] Lumigo – the best observability platform for serverless

Related Posts

Whenever you’re ready, here are 3 ways I can help you:

- Production-Ready Serverless: Join 20+ AWS Heroes & Community Builders and 1000+ other students in levelling up your serverless game. This is your one-stop shop for quickly levelling up your serverless skills.

- I help clients launch product ideas, improve their development processes and upskill their teams. If you’d like to work together, then let’s get in touch.

- Join my community on Discord, ask questions, and join the discussion on all things AWS and Serverless.