Yan Cui

I help clients go faster for less using serverless technologies.

This article is brought to you by

The real-time data platform that empowers developers to build innovative products faster and more reliably than ever before.

Note: don’t forget to check out the Benchmarks page to see the latest round up of binary and JSON serializers.

When working with the BinaryFormatter class frequently, one of the things you notice is that it is really damn inefficient… both in terms of speed as well as the payload (the size of the serialized byte array).

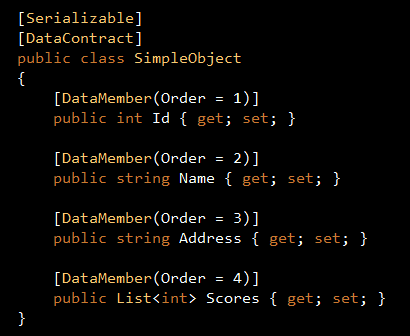

Google’s Protocol Buffers format is designed to be super fast and minimizes the payload by requiring a serializable object to define ahead of time the order in which its properties/fields should be serialized/deserialized in, doing so removes the need for all sorts of metadata that traditionally need to be encoded along with the actual data.

Marc Gravell (of StackOverflow fame!) has a .Net implementation called ‘protobuf-net‘, and is said to totally kick ass! As with most performance related topics, it’s hugely intriguing to me so I decided to put it to test myself :-)

Assumptions/Conditions of tests

- code is compiled in release mode, with optimization option turned on

- 5 runs of the same test is performed, with the top and bottom results excluded, the remaining three results is then averaged

- 100,000 identical instances of type SimpleObject(see below) is serialized and deserialized

- serialization/deserializatoin of the objects happen sequentially in a loop (no concurrency)

Results

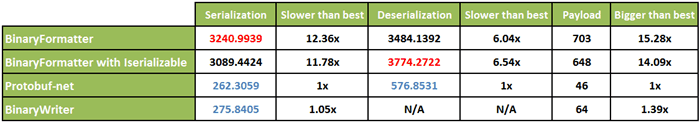

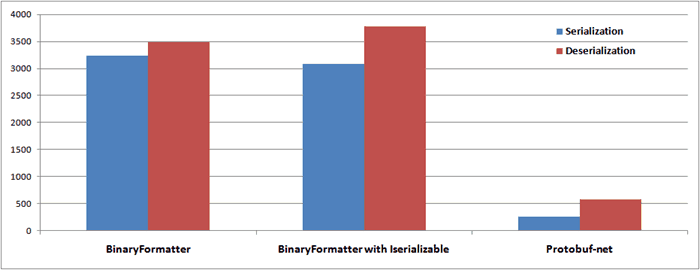

Unsurprisingly, the protobuf-net serializer wins hands-down in all three categories, as you can see from the table below it is a staggering 12x faster than BinaryFormatter when it comes to serialization, with a payload size less than 15th of its counterpart’s.

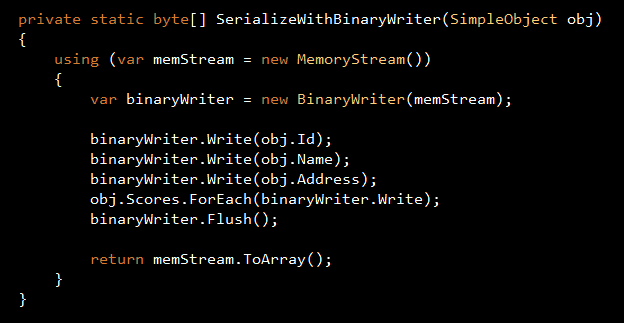

One curious observation about the payload size is that, when I used a BinaryWriter to simply write every property into the output stream without any metadata, what I got back should be the minimum payload size without compression, and yet the protobuf-net serializer still manages to beat that!

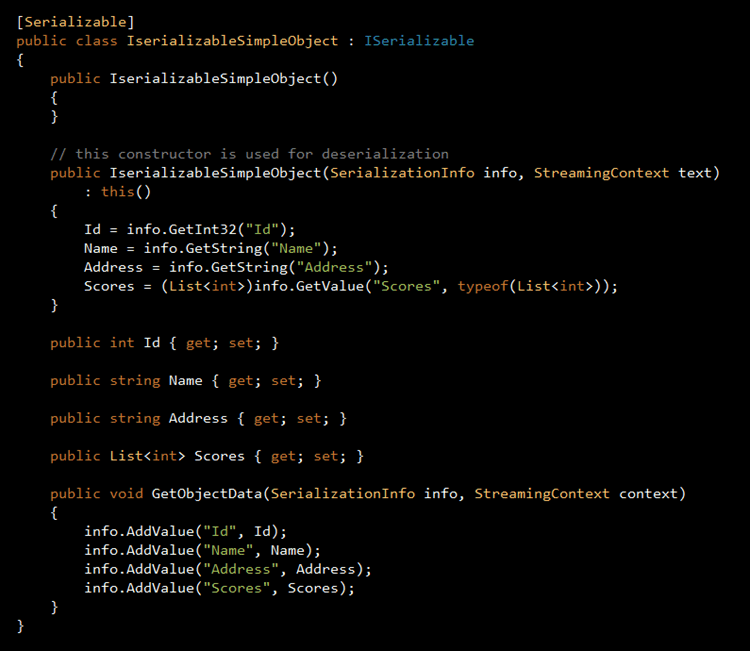

BinaryFormatter with ISerializable

I also tested the BinaryFormatter with a class that implements the ISerializable interface (see below) because others had suggested in the past that you are likely to get a noticeable performance boost if you implement the ISerializable interface yourself. The belief is that it will perform much better as it removes the reliance on reflection which can be detrimental to the performance of your code when used excessively.

However, based on the tests I have done, this does not seem to be the case, the slightly better serialization speed is far from conclusive and is offset by a slightly slower deserialization speed..

Source code

If you’re interested in running the tests yourself, you can find the source code here, it uses my SimpleSpeedTester framework to orchestrate the test runs but you should be able to get the gist of it fairly easily.

UPDATE 2011/08/24:

As I mentioned in the post, protobuf-net managed to produce a smaller payload than what is required to hold all the property values of the test object without any meta.

I posted this question on SO, and as Marc said in his answer the smaller payload is achieved through the use of varint and zigzag encoding, read more about them here.

Whenever you’re ready, here are 4 ways I can help you:

- Production-Ready Serverless: Join 20+ AWS Heroes & Community Builders and 1000+ other students in levelling up your serverless game. This is your one-stop shop for quickly levelling up your serverless skills.

- Do you want to know how to test serverless architectures with a fast dev & test loop? Check out my latest course, Testing Serverless Architectures and learn the smart way to test serverless.

- I help clients launch product ideas, improve their development processes and upskill their teams. If you’d like to work together, then let’s get in touch.

- Join my community on Discord, ask questions, and join the discussion on all things AWS and Serverless.

Pingback: Integrating Nancy with protobuf-net | theburningmonk.com

Pingback: Performance Test – Binary serializers Part II | theburningmonk.com

I have a binary serializer that is faster than protobuf-net :)

@TJ – I’d like to try it, is it available somewhere?

I am planning to it up on github next week. I will post a link once it is ready.

@TJ – excellent! looking forward to it

Here is the link the project: https://github.com/rpgmaker/MessageShark

Have fun :)

Would you have a blog post comparing protobuf-net to messageshark?

Sure, once I’ve had a chance to play around with it, I can update the benchmarks on binary serializers

Heh, I also have a serializer that is faster than protobuf-net =). It has its limitations, but I haven’t seen a faster serializer for the purpose it was made.

http://www.codeproject.com/Articles/351538/NetSerializer-A-fast-simple-serializer-for-NET

https://github.com/tomba/netserializer

@tomba – very interesting, I’ll give it a go this weekend :-)

Pingback: Does Protobuf-net has build-in compression for serialization? - Technology

Pingback: Protobuf-net is broken around DateTime | Bar Arnon

Pingback: Kronos - Binary serialization with Protocol Buffers from Google - Lukasz Pyrzyk