Yan Cui

I help clients go faster for less using serverless technologies.

Threats to the security of our serverless applications take many forms, some are the same old foes we have faced before; some are new; and some have taken on new forms in the serverless world.

As we adopt the serverless paradigm for building cloud-hosted applications, we delegate even more of the operational responsibilities to our cloud providers. When you build a serverless architecture around AWS Lambda you no longer have to configure AMIs, patch the OS, and install daemons to collect and distribute logs and metrics for your application. AWS takes care all of that for you.

What does this mean for the Shared Responsibility Model that has long been the cornerstone of security in the AWS cloud?

Protection from attacks against the OS

AWS takes over the responsibility for maintaining the host OS as part of their core competency. Hence alleviating you from the rigorous task of applying all the latest security patches – something most of us just don’t do a good enough job of as it’s not our primary focus.

In doing so, it protects us from attacks against known vulnerabilities in the OS and prevents attacks such as WannaCry.

Also, by removing long lived servers from the picture we also removed the threats posed by compromised servers that live in our environment for a long time.

However, it is still our responsibility to patch our application and address vulnerabilities that exist in our code and our dependencies.

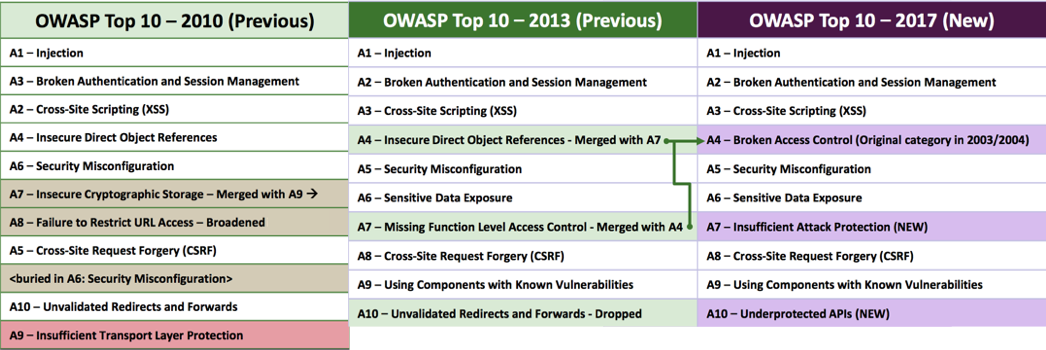

OWASP top 10 is still as relevant as ever

A glance at the OWASP top 10 list for 2017 shows us some familiar threats – Injection, Cross-Site Scripting, Sensitive Data Exposure, and so on.

A9 – Components with Known Vulnerabilities

When the folks at Snyk looked at a dataset of 1792 data breaches in 2016 they found that 12 of the top 50 data breaches were caused by applications using components with known vulnerabilities.

Furthermore, 77% of the top 5000 URLs from Alexa include at least one vulnerable library. This is less surprising than it first sound when you consider that some of the most popular front-end js frameworks – eg. jquery, Angular and React – all had known vulnerabilities. It highlights the need to continuously update and patch your dependencies.

However, unlike OS patches which are standalone, trusted and easy to apply, security updates to these 3rd party dependencies are usually bundled with feature and API changes that need to be integrated and tested. It makes our life as developers difficult and it’s yet another thing we gotta do when we’re working overtime to ship new features.

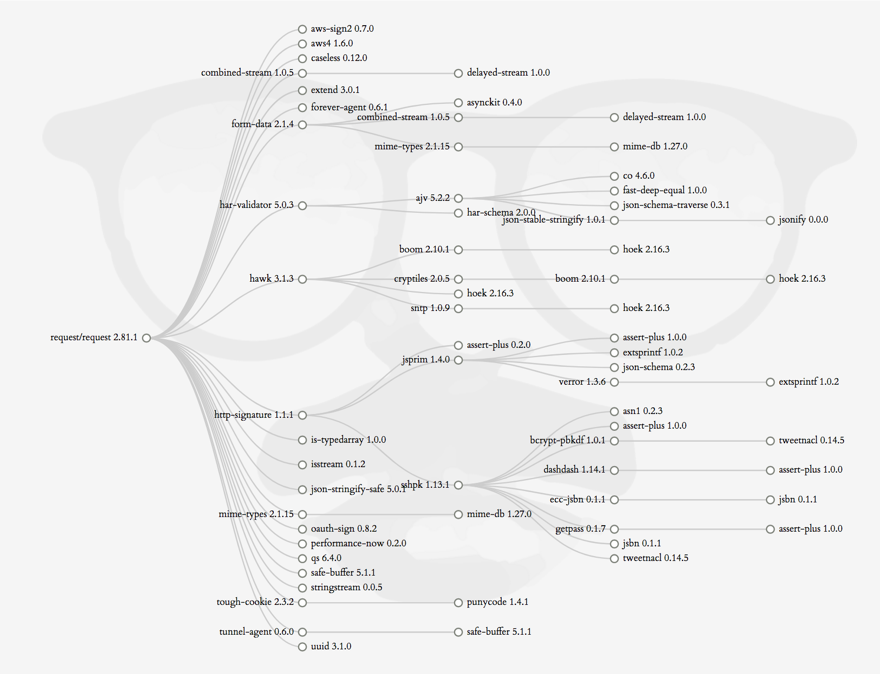

And then there’s the matter of transient dependencies, and boy there are so many of them… If these transient dependencies have vulnerabilities then you too are vulnerable through your direct dependencies.

Finding vulnerabilities in our dependencies is hard work and requires constant diligence, which is why services such as Snyk is so useful. It even comes with a built-in integration with Lambda too!

Attacks against NPM publishers

Just a few weeks ago, a security bounty hunter posted this amazing thread on how he managed to gain direct push rights to 14% of NPM packages. The list of affected packages include some big names too: debug, request, react, co, express, moment, gulp, mongoose, mysql, bower, browserify, electron, jasmine, cheerio, modernizr, redux and many more. In total, these packages account for 20% of the total number of monthly downloads from NPM.

Let that sink in for a moment.

Did he use highly sophisticated methods to circumvent NPM’s security?

Nope, it was a combination of brute force and using known account & credential leaks from a number of sources including Github. In other words, anyone could have pulled these off with very little research.

It’s hard not to feel let down by these package authors when so many display such a cavalier attitude towards securing access to their NPM accounts. I feel my trust in these 3rd party dependencies have been betrayed.

662 users had password «

123456», 174 – «123», 124 – «password».

1409 users (1%) used their username as their password, in its original form, without any modifications.

11% of users reused their leaked passwords: 10.6% – directly, and 0.7% – with very minor modifications.

As I demonstrated in my recent talk on Serverless security, one can easily steal temporary AWS credentials from affected Lambda functions (or EC2-hosted Node.js applications) with a few lines of code.

Imagine then, a scenario where an attacker had managed to gain push rights to 14% of all NPM packages. He could publish a patch update to all these packages and steal AWS credentials at a massive scale.

The stakes are high and it’s quite possibly the biggest security threat we face in the serverless world; and it’s equally threatening to applications hosted in containers or VMs.

The problems and risks with package management is not specific to the Node.js ecosystem. I have spent most of my career working with .Net technologies and am now working with Scala at Space Ape Games, package management has been a challenge everywhere. Whether or not you’re talking about Nuget or Maven, or whatever package repository, you’re always at risk if the authors of your dependencies do not exercise the same due diligence to secure their accounts as they would their own applications.

Or, perhaps they do…

A1 – Injection & A3 – XSS

SQL injection and other forms of injection attacks are still possible in the serverless world, as are cross-site scripting attacks.

Even if you’re using NoSQL databases you might not be exempt from injection attacks either. MongoDB for instance, exposes a number of attack vectors through its query APIs.

Arguably DynamoDB’s API makes it hard (at least I haven’t heard of a way yet) for an attacker to orchestrate an injection attack, but you’re still open to other forms of exploits – eg. XSS, and leaked credentials which grants attacker access to DynamoDB tables.

Nonetheless, you should always sanitize user inputs, as well as the output from your Lambda functions.

A6 – Sensitive Data Exposure

Along with servers, web frameworks also disappeared when one migrates to the serverless paradigm. These web frameworks have served us well for many years, but they also handed us a loaded gun we can shot ourselves in the foot with.

As Troy Hunt demonstrated at a recent talk at the LDNUG, we can accidentally expose all kinds of sensitive data by accidentally leaving directory listing options ON. From web.config containing credentials (at 35:28) to SQL backups files (at 1:17:28)!

With API Gateway and Lambda, accidental exposures like this become very unlikely – directory listing is a “feature” you’d have to implement yourself. It forces you to make very conscious decisions about when to support directory listing and the answer is probably never.

IAM

If your Lambda functions are compromised, then the next line of defence is to restrict what these compromised functions can do.

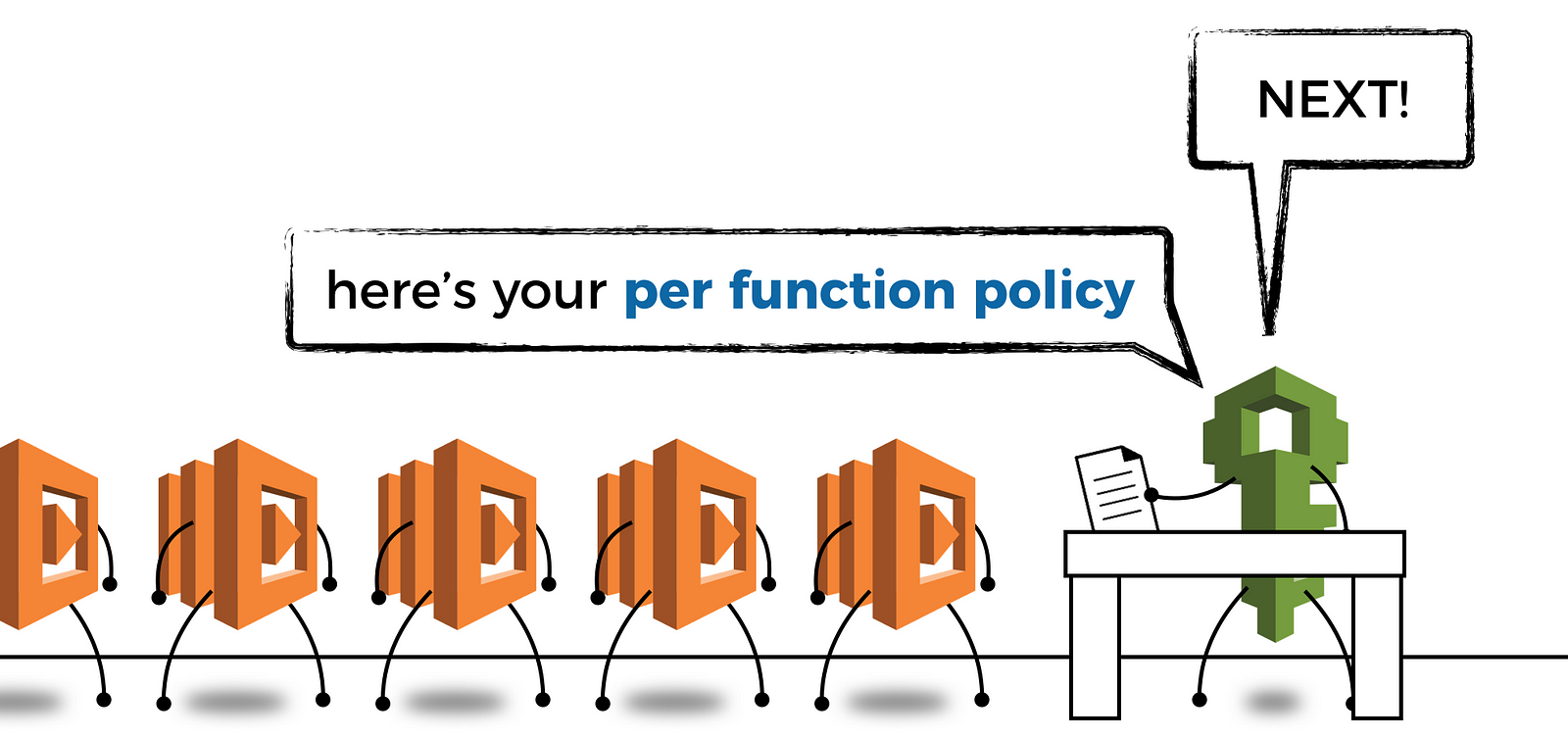

This is why you need to apply the Least Privilege Principle when configuring Lambda permissions.

In the Serverless framework, the default behaviour is to use the same IAM role for all functions in the service.

However, the serverless.yml spec allows you to specify a different IAM role per function. Although as you can see from the examples it involves a lot more development effort and (from my experience) adds enough friction that almost no one does this…

IAM policy not versioned with Lambda

A shortcoming with the current Lambda + IAM configuration is that IAM policies are not versioned along with the Lambda function.

In the scenario where you have multiple versions of the same function in active use (perhaps with different aliases), then it becomes problematic to add or remove permissions:

- adding a new permission to a new version of the function allows old versions of the function additional access that they don’t require (and poses a vulnerability)

- removing an existing permission from a new version of the function can break old versions of the function that still require that permission

Since the 1.0 release of the Serverless framework this has become less a problem as it no longer use aliases for stages – instead, each stage is deployed as a separate function, eg.

service-function-devservice-function-stagingservice-function-prod

which means it’s far less likely that you’ll need to have multiple versions of the same function in active use.

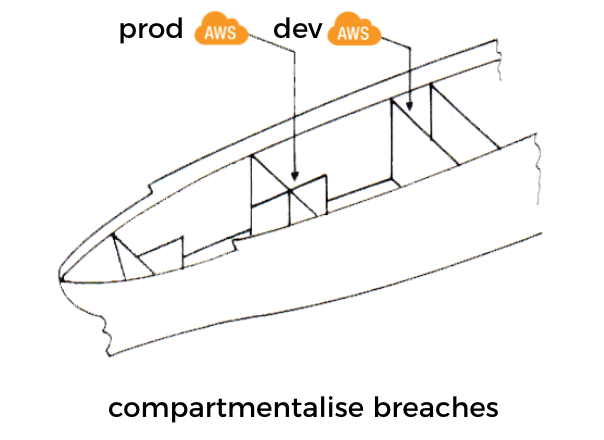

I also found (from personal experience) account level isolation can help mitigate the problems of adding/removing permissions, and crucially, the isolation also helps compartmentalise security breaches – eg. a compromised function running in a non-production account cannot be used to cause harm in the production account and impact your users.

Delete unused functions

One of the benefits of the serverless paradigm is that you don’t pay for functions when they’re not used.

The flip side of this property is that you have less need to remove unused functions since they don’t show up on your bill. However, these functions still exist as attack surface, even more so than actively used functions because they’re less likely to be updated and patched. Over time, these unused functions can become a hotbed for components with known vulnerabilities that attackers can exploit.

Lambda’s documentations also cites this as one of the best practices.

Delete old Lambda functions that you are no longer using.

The changing face of DoS attacks

With AWS Lambda you are far more likely to scale your way out of a DoS attack. However, scaling your serverless architecture aggressively to fight a DoS attack with brute force has a significant cost implication.

No wonder people started calling DoS attacks against serverless applications Denial of Wallet (DoW) attacks!

“But you can just throttle the no. of concurrent invocations, right?”

Sure, and you end up with a DoS problem instead… it’s a lose-lose situation.

AWS recently introduced AWS Shield but at the time of writing the payment protection (only if you pay a monthly flat fee for AWS Shield Advanced) does not cover Lambda costs incurred during a DoS attack.

Also, Lambda has an at-least-once invocation policy. According to the folks at SunGard, this can result in up to 3 (successful) invocations. From the article, the reported rate of multiple invocations is extremely low – 0.02% – but one wonders if the rate is tied to the load and might manifest itself at a much higher rate during a DoS attack.

Furthermore, you need to consider how Lambda retries failed invocations by an asynchronous source – eg. S3, SNS, SES, CloudWatch Events, etc. Officially, these invocations are retried twice before they’re sent to the assigned DLQ (if any).

However, an analysis by the OpsGenie guys showed that the no. of retries are not carved in stone and can go up to as many as 6 before the invocation is sent to the DLQ.

If the DoS attacker is able to trigger failed async invocations (perhaps by uploading files to S3 that will cause your function to except when attempting to process) then they can significantly magnify the impact of their attack.

All these add up to the potential for the actual no. of Lambda invocations to explode during a DoS attack. As we discussed earlier, whilst your infrastructure might be able to handle the attack, can your wallet stretch to the same extend? Should you allow it to?

Securing external data

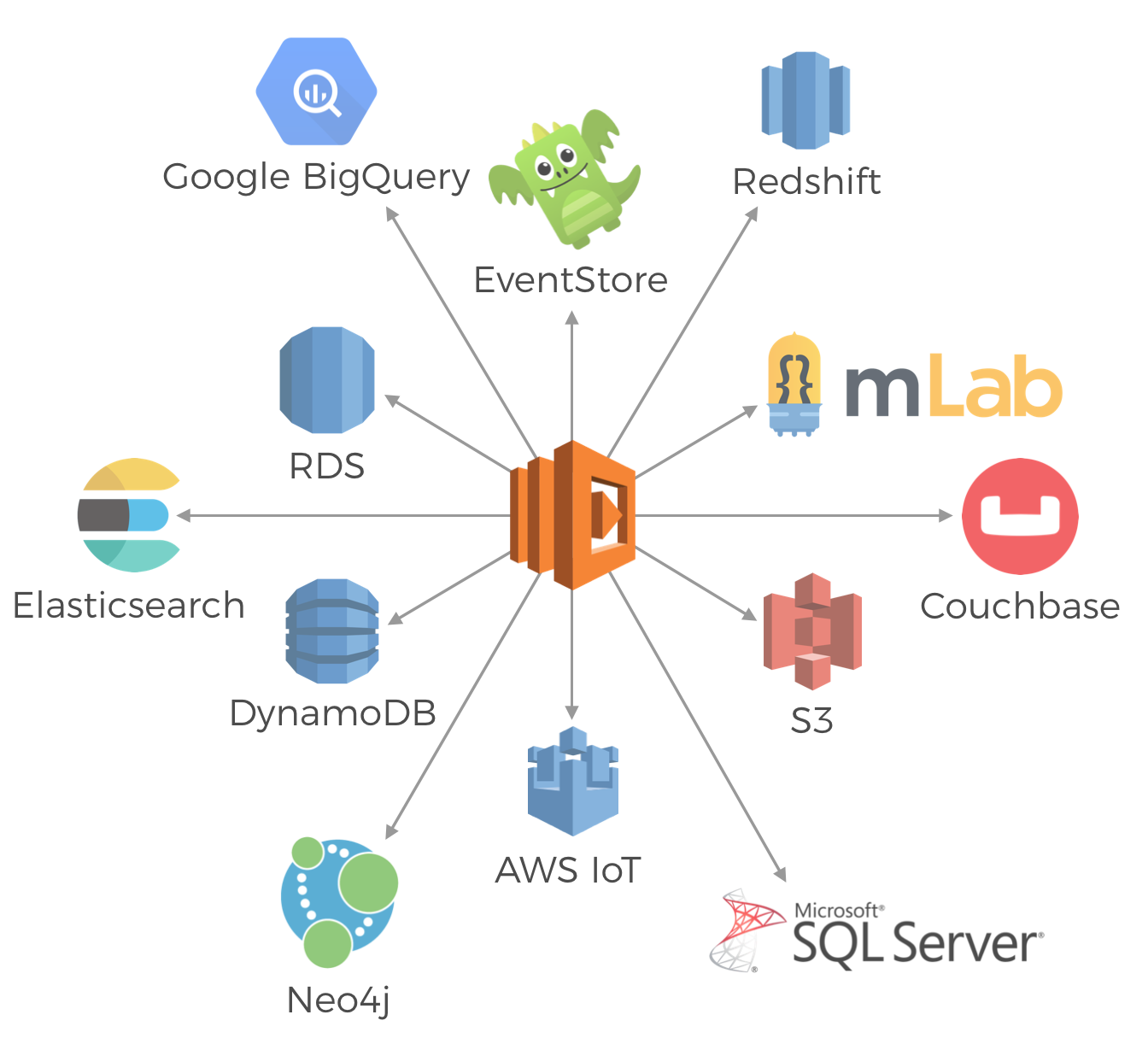

Due to the ephemeral nature of Lambda functions, chances are all of your functions are stateless. More than ever, states are stored in external systems and we need to secure them both at rest and in-transit.

Communication to all AWS services are via HTTPS and every request needs to be signed and authenticated. A handful of AWS services also offer server-side encryption for your data at rest – S3, RDS and Kinesis streams springs to mind, and Lambda has built-in integration with KMS to encrypt your functions’ environment variables.

The same diligence needs to be applied when storing sensitive data in services/DBs that do not offer built-in encryption – eg. DynamoDB, Elasticsearch, etc. – and ensure they’re protected at rest. In the case of a data breach, it provides another layer of protection for our users’ data.

We owe our users that much.

Use secure transport when transmitting data to and from services (both external and internal ones). If you’re building APIs with API Gateway and Lambda then you’re forced to use HTTPS by default, which is a good thing. However, API Gateway is always publicly accessible and you need to take the necessary precautions to secure access to internal APIs.

You can use API keys but I think it’s better to use IAM roles. It gives you fine grained control over who can invoke which actions on which resources. Also, using IAM roles spares you from awkward conversations like this:

“It’s X’s last day, he probably has our API keys on his laptop somewhere, should we rotate the API keys just in case?”

“mm.. that’d be a lot of work, X is trustworthy, he’s not gonna do anything.”

“ok… if you say so… (secretly pray X doesn’t lose his laptop or develop a belated grudge against the company)”

Fortunately, both can be easily configured using the Serverless framework.

Leaked credentials

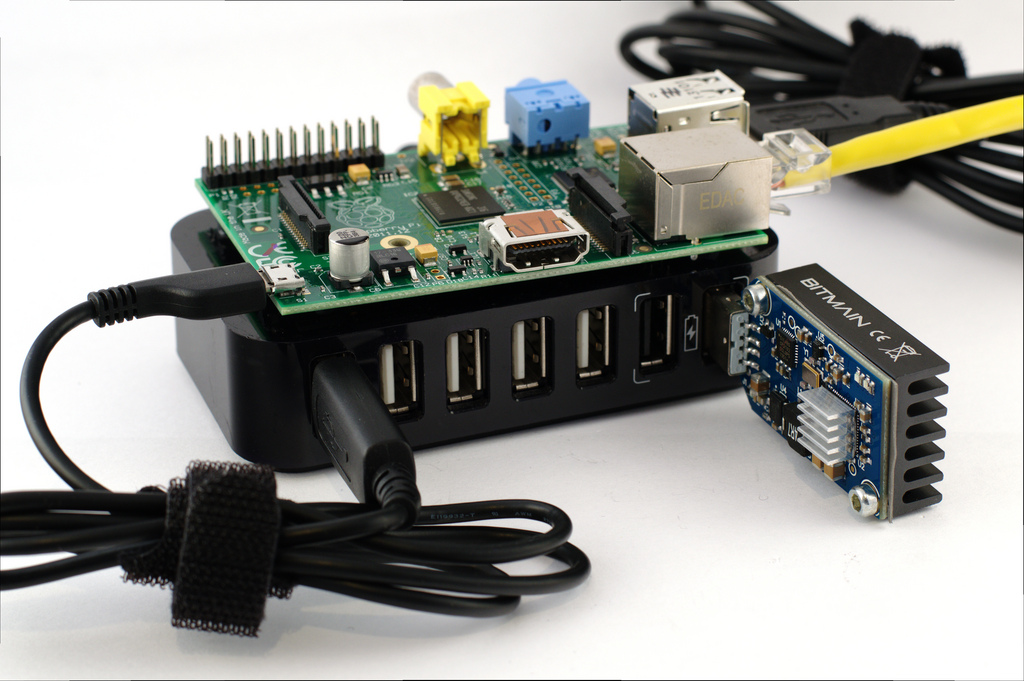

The internet is full of horror stories of developers racking up a massive AWS bill after their leaked credentials are used by cyber-criminals to mine bitcoins. For every such story many more would have been affected but choose to be silent (for the same reason many security breaches are not announced publicly as big companies do not want to appear incompetent).

Even within my small social circle (*sobs) I have heard 2 such incidents, neither were made public and both resulted in over $100k worth of damages. Fortunately, in both cases AWS agreed to cover the cost.

I know for a fact that AWS actively scan public Github repos for active AWS credentials and try to alert you as soon as possible. But as some of the above stories mentioned, even if your credentials were leaked only for a brief window of time it will not escape the watchful gaze of attackers. (plus, they still exist in git commit history unless you rewrite the history too, best to deactivate the credentials if possible).

A good approach to prevent AWS credential leaks is to use git pre-commit hooks as outlined by this post.

Conclusions

We looked at a number of security threats to our serverless applications in this post, many of them are the same threats that have plighted the software industry for years. All of the OWASP top 10 still apply to us, including SQL, NoSQL and other forms of injection attacks.

Leaked AWS credentials remain a major issue and can potentially impact any organisation that uses AWS. Whilst there are quite a few publicly reported incidents, I have a strong feeling that the actual no. of incidents are much much higher.

We are still responsible for securing our users’ data both at rest as well as in-transit. API Gateway is always publicly accessible, so we need to take the necessary precautions to secure access to our internal APIs, preferably with IAM roles. IAM offers fine grained control over who can invoke which actions on your API resources, and make it easy to manage access when employees come and go.

On a positive note, having AWS take over the responsibility for the security of the host OS gives us a no. of security benefits:

- protection against OS attacks because AWS can do a much better job of patching known vulnerabilities in the OS

- host OS are ephemeral which means no long-lived compromised servers

- it’s much harder to accidentally leave sensitive data exposed by forgetting to turn off directory listing options in a web framework – because you no longer need such web frameworks!

DoS attacks have taken a new form in the serverless world. Whilst you’re probably able to scale your way out of an attack, it’ll still hurt you in the wallet, hence why DoS attacks have become known in the serverless world as Denial of Wallet attacks. Lambda costs incurred during a DoS attack is not covered by AWS Shield Advanced at the time of writing, but hopefully they will be in the near future.

Meanwhile, some new attack surfaces have emerged with AWS Lambda:

- functions are often given too much permission because they’re not given individual IAM policies tailored to their needs, a compromised function can therefore do more harm than it might otherwise

- unused functions are often left around for a long time as there is no cost associated with them, but attackers might be able to exploit them especially if they’re not actively maintained and therefore are likely to contain known vulnerabilities

Above all, the most worrisome threat for me are attacks against the package authors themselves. It has been shown that many authors do not take the security of their accounts seriously, and as such endangers themselves as well as the rest of the community that depends on them. It’s impossible to guard against such attacks and erodes one of the strongest aspect of any software ecosystem – the community behind it.

Once again, people have proven to be the weakest link in the security chain.

Related Posts

Whenever you’re ready, here are 3 ways I can help you:

- Production-Ready Serverless: Join 20+ AWS Heroes & Community Builders and 1000+ other students in levelling up your serverless game. This is your one-stop shop for quickly levelling up your serverless skills.

- I help clients launch product ideas, improve their development processes and upskill their teams. If you’d like to work together, then let’s get in touch.

- Join my community on Discord, ask questions, and join the discussion on all things AWS and Serverless.

AWS Macie can help here as well https://aws.amazon.com/macie/ it’s a brand new service for S3 but it’s expected to cover more services by the end of the year.

I haven’t played with it yet, but it’s sounds promising and with good reviews (e.g. Netflix). Another tool to the mix and more to come…

Hey Bruno, good shout, saw the announcement the other day, it looks really promising. Surprised by the no. of announcements so early this year, usually they save a lot of new service announcements at re:Invent. I’m going this year with some folks from Space Ape and Supercell, are you gonna be there by any chance?

Loved reading the article and the slides very informative. In addition to Macie, AWS has updated their console screens such that no human can accidentally leave the “Any Authenticated AWS user” option selected. It can only be done via CLI now.