Yan Cui

I help clients go faster for less using serverless technologies.

Note: don’t forget to check out the Benchmarks page to see the latest round up of binary and JSON serializers.

A little while ago I put together a quick performance test comparing the BCL’s BinaryFormatter with that of Marc Gravell‘s protobuf-net library (.Net implementation of Google’s protocol buffer format). You can read more about my results here.

There’s another fast binary serialization library which I had heard about before, MessagePack, which claims to be 4 times faster than protocol buffers which I find a little hard to believe, so naturally, I have to see for myself!

Tests

The conditions of the test is very similar to those outlined in my first post, except MessagePack is now included in the test.

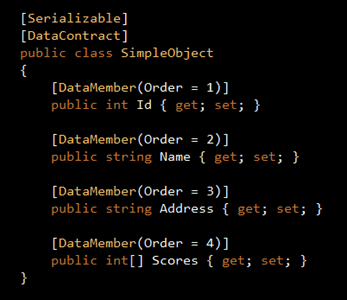

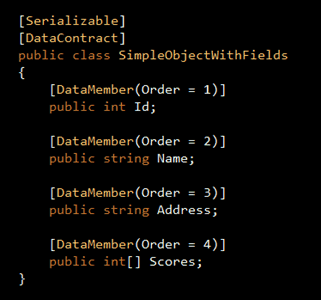

I defined two types to be serialized/deserialized, both contain the same amount of data, one exposes them as public properties whilst the other exposes them as public fields:

The reason for this arrangement is to try and get the best performance out of MessagePack (see Note 2 below).

100,000 identical instances of the above types are serialized and deserialized over 5 runs with the best and worst results excluded, results of the remaining 3 runs are then averaged to give the final results.

Results

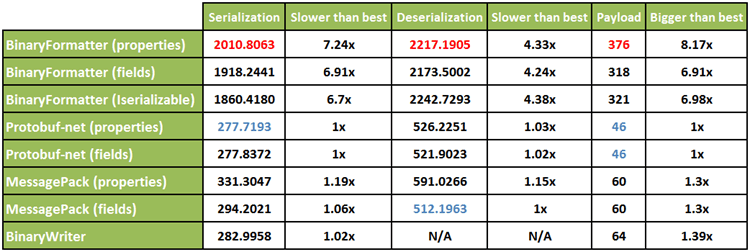

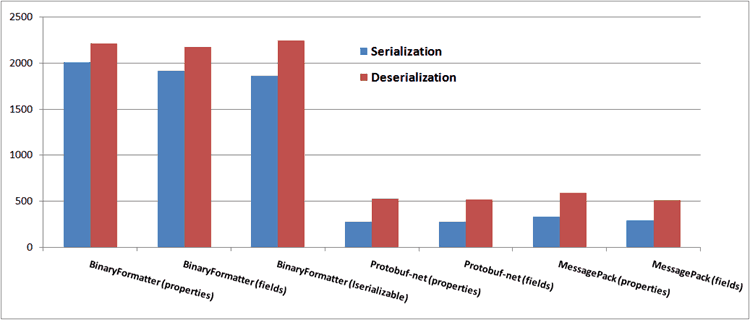

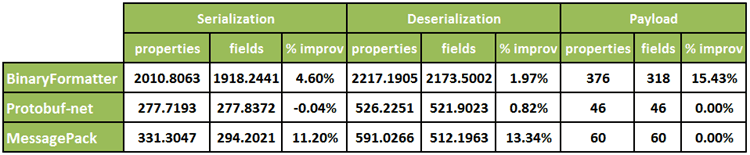

A couple of observations from these results:

1. BinaryFormatter performs better with fields – faster serialization and smaller payload!

2. Protobuf-net performs equally well with fields and properties – because it’s driven by the [DataMember] attributes.

3. MessagePack performs significantly better with fields – over 10% faster serialization and deserialization!

4. MessagePack is NOT 4x faster than Protocol Buffer! – at least not with the two implementations (MsgPack and Profotbuf-net) I have tested here, speed-wise there’s not much separating the two and depending on the data being serialized you might find a slightly different result.

5. MessagePack generates bigger payload than Protocol Buffer – again, depending on the data being serialized you might arrive at a slightly different result.

Source code

If you’re interested in running the tests yourself, you can find the source code here. It uses my SimpleSpeedTester framework to orchestrate test runs but you should be able to get the gist of it fairly easily.

Closing thoughts…

Although MessagePack couldn’t back up its claim to be 4x faster than Protocol Buffer in my test here, it’s still great to see that it offers comparable speed and payload, which makes it a viable alternative to Protocol Buffer and in my opinion having a number of different options is always a plus!

In addition to serialization MessagePack also offers a RPC framework, and is also widely available with client implementations available in quite a number of different language and platforms, e.g. C#, JavaScript, Node.Js, Erlang, Python and Ruby, so it’s definitely worth considering when you start a new project.

On a different note, although you’re able to get a good 10% improvement in performance when you use fields instead of properties to expose publically available data in your type, it’s important to remember that the idiomatic way in .Net is to use public properties.

As much as I think performance is of paramount importance, maintainability of your code is a close second, especially in an environment where many developers will be working on the same piece of code. By following the ‘recognized’ way of doing things you can more easily communicate your intentions to other developers working on the same code.

Imagine you’ve intentionally used public fields in your class because you know it will be serialized by the MessagePack serializer but then a new developer joins the team and, not knowing all the intricate details of your code, decides to convert them all to public properties instead..

So if you’re willing to step out of the idiomatic .Net way to get that performance boost, be sure to comment your code well in all the places where you’re diverging from the norm!

NOTE 1:

The SimpleObject class in this post is slightly different from the one I used in my previous post, the ‘Scores‘ property is now an int array instead of List<int>, doing so drastically improved performance for ALL the serializers involved.

This is because the implementation of List<T> uses an array internally and the array is resized when capacity is reached. So even though you only have x number of items in a List<T> the internal array will always have more than x number of spaces. Which is why SimpleObject in my first post (where ‘Scores‘ is of type List<int>) is serialized into 708 bytes by the BinaryFormatter but the SimpleObject class defined in this post can be serialized into 376 bytes.

Similarly, initializing an instance of Dictionary<TKey, TValue> with the intended capacity allows you to add items to it much more efficiently, for more details, see here.

Note 2:

One caveat I came across in the MessagePack test was that, if you instantiate CompiledPacker without any parameter, the default behaviour is to serialize public fields only and that meant none of the public properties I’ve defined on the SimpleObject type will get serialized. As a result, to serialize/deserialize instances of SimpleObject I needed to use an instance of CompiledPacker that serializes private fields too, which according to the MessagePack’s GettingStarted guide, is slower.

So in order to get the best performance (speed) out of MessagePack, I defined a second type (see SimpleObjectWithFields type above) to see how well it does with a type that defines public fields instead of properties. In the interest of fairness, I repeated the same test for BinaryFormatter and protobuf-net too, just to see if you get better performance out of them too.

Whenever you’re ready, here are 3 ways I can help you:

- Production-Ready Serverless: Join 20+ AWS Heroes & Community Builders and 1000+ other students in levelling up your serverless game. This is your one-stop shop for quickly levelling up your serverless skills.

- I help clients launch product ideas, improve their development processes and upskill their teams. If you’d like to work together, then let’s get in touch.

- Join my community on Discord, ask questions, and join the discussion on all things AWS and Serverless.

Pingback: Performance Test – Binary serializers Part III | theburningmonk.com